Count Distinct with PostgreSQL

10,897

Due to the use-case it seems better to split the code.

Create a table month_brand based on the following query:

select to_char(transaction_date, 'YYYY-MM') as yyyymm

,brand

,count (distinct unique_mem_id) as count_distinct_unique_mem_id

from source.table

group by yyyymm

,brand

;

Join month_brand with your source table:

select t.transaction_date, t.brand, t.Description, t.Amount, t.Amount / m.count_distinct_unique_mem_id as newval

from source.table as t

join month_brand as m

on m.yyyymm = to_char(t.transaction_date, 'YYYY-MM')

where t.description ilike '%Criteria%'

;

Instead of count(distinct ...), 2 phase solution:

- give row numbers to the duplicated unique_mem_id

- count only the unique_mem_id with row_number = 1

select *

into temp.table

from (SELECT transaction_date, brand, Description, Amount,

(Amount / count(case rn when 1 then unique_mem_id end) over (partition by to_char(transaction_date, 'YYYY-MM'),brand)) as newval

FROM (SELECT transaction_date, brand, Description, Amount,unique_mem_id

row_numner () over (partition by to_char(transaction_date, 'YYYY-MM'),brand,unique_mem_id) as rn

FROM source.table

)

)

WHERE DESCRIPTION iLIKE '%Criteria%'

;

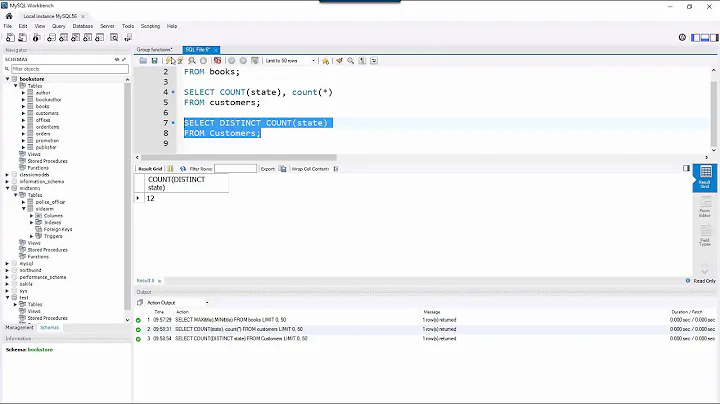

Related videos on Youtube

Author by

ZJAY

Updated on November 02, 2022Comments

-

ZJAY over 1 year

I am trying to change the count DISTINCT portion of the query below, because the current query returns WINDOW definition is not supported. What is most seamless way to do this calc using PostgreSQL? Thank you.

SELECT transaction_date, brand, Description, Amount, newval into temp.table FROM (SELECT transaction_date, brand, Description, Amount, (Amount / count(DISTINCT unique_mem_id) over (partition by to_char(transaction_date, 'YYYY-MM'),brand )) as newval FROM source.table ) WHERE DESCRIPTION iLIKE '%Criteria%';-

Nikhil over 7 yearscan you give sample input and output

-

-

David דודו Markovitz over 7 yearsHi @ZJAY, Please take a look at the solution.

David דודו Markovitz over 7 yearsHi @ZJAY, Please take a look at the solution. -

ZJAY over 7 yearsSolution returned ERROR: column "unique_mem_id" does not exist in derived_table1

-

David דודו Markovitz over 7 yearsIf unique_mem_id cannot be null then there is no need to select it in the inner query, and it should replaced int the count with any constant, e.g. 1.

David דודו Markovitz over 7 yearsIf unique_mem_id cannot be null then there is no need to select it in the inner query, and it should replaced int the count with any constant, e.g. 1. -

ZJAY over 7 yearsThank you Dudu, very helpful. One final question. I will run this query 80-100 times a day, each with a different WHERE DESCRIPTION iLIKE '%_%' (final part of query). The query runs across 100s of millions of rows, so it is inefficient to run the non-unique part of the query repeatedly (the Count Distinct is same across all queries). Is there a way where I can run the Count Distinct portion once, perhaps in a separate table, but preserve the results I am seeking. The key will be to partition the correct YYYY-MM for each relevant row without running the partition in each query. Thanks!

-

David דודו Markovitz over 7 yearsI'll be avaiable to answer tomorrow.

David דודו Markovitz over 7 yearsI'll be avaiable to answer tomorrow. -

David דודו Markovitz over 7 years@ZJAY, P.s. do you have something in the source table that is guaranteed to be unique?

David דודו Markovitz over 7 years@ZJAY, P.s. do you have something in the source table that is guaranteed to be unique? -

David דודו Markovitz over 7 years@ZJAY, Just verifying, a column called unique_mem_id is not unique?

David דודו Markovitz over 7 years@ZJAY, Just verifying, a column called unique_mem_id is not unique? -

David דודו Markovitz over 7 years@ZJAY, You could and you should separate the code to 2 queries (I'll edit my answer), but in any case why are you running the code 100 times? why not retrieve all requested descriptions in a single run?

David דודו Markovitz over 7 years@ZJAY, You could and you should separate the code to 2 queries (I'll edit my answer), but in any case why are you running the code 100 times? why not retrieve all requested descriptions in a single run? -

David דודו Markovitz over 7 years@ZJAY. P.s. since this is a correct answer to your answer, please mark it as such. Thanks.

David דודו Markovitz over 7 years@ZJAY. P.s. since this is a correct answer to your answer, please mark it as such. Thanks.