Create DataFrame from case class

12,772

Solution 1

To be able to use implicit conversion to DataFrame you have to import spark.implicits._ like :

val spark = SparkSession

.builder

.appName("test")

.getOrCreate()

import spark.implicits._

This way the conversion should work.

In case you are using Spark Shell this is not needed as the Spark session is already created and the specific conversion functions imported.

Solution 2

There is no issue in the piece of code you copied from the link shared, as error explains it's something else (exact code copy result in my run below).

case class Employee(Name:String, Age:Int, Designation:String, Salary:Int, ZipCode:Int)

val EmployeesData = Seq( Employee("Anto", 21, "Software Engineer", 2000, 56798))

val Employee_DataFrame = EmployeesData.toDF

Employee_DataFrame.show()

Employee_DataFrame:org.apache.spark.sql.DataFrame = [Name: string, Age: integer ... 3 more fields]'

+----+---+-----------------+------+-------+

|Name|Age| Designation|Salary|ZipCode|

+----+---+-----------------+------+-------+

|Anto| 21|Software Engineer| 2000| 56798|

+----+---+-----------------+------+-------+

Related videos on Youtube

Author by

Jill Clover

Updated on June 04, 2022Comments

-

Jill Clover almost 2 years

I have read other related questions but I do not find the answer.

I want to create a

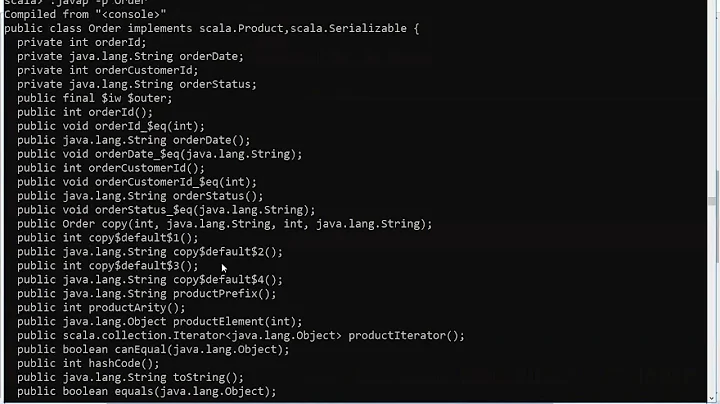

DataFramefrom a case class in Spark 2.3. Scala 2.11.8.Code

package org.XXX import org.apache.spark.sql.SparkSession object Test { def main(args: Array[String]): Unit = { val spark = SparkSession .builder .appName("test") .getOrCreate() case class Employee(Name:String, Age:Int, Designation:String, Salary:Int, ZipCode:Int) val EmployeesData = Seq( Employee("Anto", 21, "Software Engineer", 2000, 56798)) val Employee_DataFrame = EmployeesData.toDF spark.stop() } }Here is what I tried in spark-shell:

case class Employee(Name:String, Age:Int, Designation:String, Salary:Int, ZipCode:Int) val EmployeesData = Seq( Employee("Anto", 21, "Software Engineer", 2000, 56798)) val Employee_DataFrame = EmployeesData.toDFError

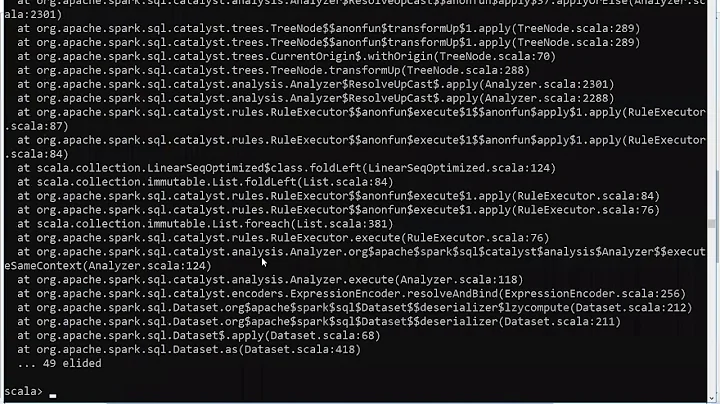

java.lang.VerifyError: class org.apache.spark.sql.hive.HiveExternalCatalog overrides final method alterDatabase.(Lorg/apache/spark/sql/catalyst/catalog/CatalogDatabase;)V at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:763) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:467) at java.net.URLClassLoader.access$100(URLClassLoader.java:73) at java.net.URLClassLoader$1.run(URLClassLoader.java:368) at java.net.URLClassLoader$1.run(URLClassLoader.java:362) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:361) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog$lzycompute(HiveSessionStateBuilder.scala:53) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:52) at org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1.<init>(HiveSessionStateBuilder.scala:69) at org.apache.spark.sql.hive.HiveSessionStateBuilder.analyzer(HiveSessionStateBuilder.scala:69) at org.apache.spark.sql.internal.BaseSessionStateBuilder$$anonfun$build$2.apply(BaseSessionStateBuilder.scala:293) at org.apache.spark.sql.internal.BaseSessionStateBuilder$$anonfun$build$2.apply(BaseSessionStateBuilder.scala:293) at org.apache.spark.sql.internal.SessionState.analyzer$lzycompute(SessionState.scala:79) at org.apache.spark.sql.internal.SessionState.analyzer(SessionState.scala:79) at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57) at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55) at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47) at org.apache.spark.sql.Dataset.<init>(Dataset.scala:172) at org.apache.spark.sql.Dataset.<init>(Dataset.scala:178) at org.apache.spark.sql.Dataset$.apply(Dataset.scala:65) at org.apache.spark.sql.SparkSession.createDataset(SparkSession.scala:470) at org.apache.spark.sql.SQLContext.createDataset(SQLContext.scala:377) at org.apache.spark.sql.SQLImplicits.localSeqToDatasetHolder(SQLImplicits.scala:228)-

gasparms almost 6 yearsCode is OK, where do you try? spark-shell? IDE? Could you add information about how do you initialize SparkSession. What scala version do you have?

gasparms almost 6 yearsCode is OK, where do you try? spark-shell? IDE? Could you add information about how do you initialize SparkSession. What scala version do you have? -

Midiparse almost 6 yearsyes, the error that you are getting seems unrelated to me

-

-

Mátyás Kuti-Kreszács over 4 yearsHowever the error suggests that you mixed up some dependencies. Check the version of your dependencies and if they are compatible with each other.