CUDA atomicAdd for doubles definition error

That flavor of atomicAdd is a new method introduced for compute capability 6.0. You may keep your previous implementation of other compute capabilities guarding it using macro definition

#if !defined(__CUDA_ARCH__) || __CUDA_ARCH__ >= 600

#else

<... place here your own pre-pascal atomicAdd definition ...>

#endif

This macro named architecture identification macro is documented here:

5.7.4. Virtual Architecture Identification Macro

The architecture identification macro

__CUDA_ARCH__is assigned a three-digit value string xy0 (ending in a literal 0) during each nvcc compilation stage 1 that compiles for compute_xy.This macro can be used in the implementation of GPU functions for determining the virtual architecture for which it is currently being compiled. The host code (the non-GPU code) must not depend on it.

I assume NVIDIA did not place it for previous CC to avoid conflict for users defining it and not moving to Compute Capability >= 6.x. I would not consider it a BUG though, rather a release delivery practice.

EDIT: macro guard was incomplete (fixed) - here a complete example.

#if !defined(__CUDA_ARCH__) || __CUDA_ARCH__ >= 600

#else

__device__ double atomicAdd(double* a, double b) { return b; }

#endif

__device__ double s_global ;

__global__ void kernel () { atomicAdd (&s_global, 1.0) ; }

int main (int argc, char* argv[])

{

kernel<<<1,1>>> () ;

return ::cudaDeviceSynchronize () ;

}

Compilation with:

$> nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2016 NVIDIA Corporation

Built on Wed_May__4_21:01:56_CDT_2016

Cuda compilation tools, release 8.0, V8.0.26

Command lines (both successful):

$> nvcc main.cu -arch=sm_60

$> nvcc main.cu -arch=sm_35

You may find why it works with the include file: sm_60_atomic_functions.h, where the method is not declared if __CUDA_ARCH__ is lower than 600.

Related videos on Youtube

kalj

Currently developing financial software with FIS. Previously did a PhD in Scientific Computing with specialization in High-Performance Computing.

Updated on September 16, 2022Comments

-

kalj over 1 year

kalj over 1 yearIn previous versions of CUDA, atomicAdd was not implemented for doubles, so it is common to implement this like here. With the new CUDA 8 RC, I run into troubles when I try to compile my code which includes such a function. I guess this is due to the fact that with Pascal and Compute Capability 6.0, a native double version of atomicAdd has been added, but somehow that is not properly ignored for previous Compute Capabilities.

The code below used to compile and run fine with previous CUDA versions, but now I get this compilation error:

test.cu(3): error: function "atomicAdd(double *, double)" has already been definedBut if I remove my implementation, I instead get this error:

test.cu(33): error: no instance of overloaded function "atomicAdd" matches the argument list argument types are: (double *, double)I should add that I only see this if I compile with

-arch=sm_35or similar. If I compile with-arch=sm_60I get the expected behavior, i.e. only the first error, and successful compilation in the second case.Edit: Also, it is specific for

atomicAdd-- if I change the name, it works well.It really looks like a compiler bug. Can someone else confirm that this is the case?

Example code:

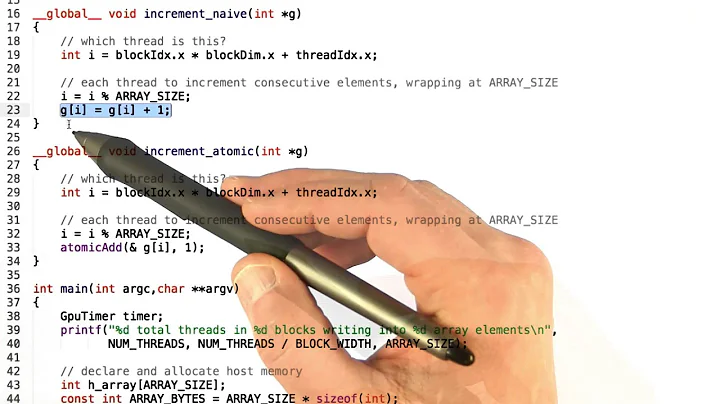

__device__ double atomicAdd(double* address, double val) { unsigned long long int* address_as_ull = (unsigned long long int*)address; unsigned long long int old = *address_as_ull, assumed; do { assumed = old; old = atomicCAS(address_as_ull, assumed, __double_as_longlong(val + __longlong_as_double(assumed))); } while (assumed != old); return __longlong_as_double(old); } __global__ void kernel(double *a) { double b=1.3; atomicAdd(a,b); } int main(int argc, char **argv) { double *a; cudaMalloc(&a,sizeof(double)); kernel<<<1,1>>>(a); cudaFree(a); return 0; }

Edit: I got an answer from Nvidia who recognize this problem, and here is what the developers say about it:

The sm_60 architecture, that is newly supported in CUDA 8.0, has native fp64 atomicAdd function. Because of the limitations of our toolchain and CUDA language, the declaration of this function needs to be present even when the code is not being specifically compiled for sm_60. This causes a problem in your code because you also define a fp64 atomicAdd function.

CUDA builtin functions such as atomicAdd are implementation-defined and can be changed between CUDA releases. Users should not define functions with the same names as any CUDA builtin functions. We would suggest you to rename your atomicAdd function to one that is not the same as any CUDA builtin functions.

-

kangshiyin almost 8 yearsLooks like a bug in CUDA 8 RC to me. It seems the native double atomicAdd() works only with sm_60 but also can be seen with sm_35. Maybe you could solve this by renaming your own version.

-

kalj almost 8 years@Eric Yes, renaming resolves it. Post edited to include this.

kalj almost 8 years@Eric Yes, renaming resolves it. Post edited to include this.

-

-

kalj almost 8 yearsI may not keep it using the same name, as that results in the first of the errors above, "function ... has already been defined". How is it not a bug to give a completely unnecessary error, with a very confusing message?

kalj almost 8 yearsI may not keep it using the same name, as that results in the first of the errors above, "function ... has already been defined". How is it not a bug to give a completely unnecessary error, with a very confusing message? -

Florent DUGUET almost 8 years@kalj, you may keep the same name, but guarded by

__CUDA_ARCH__. If your declaration is guarded by this macro you should not have the errors listed above. Moreover, this will leave your code with some consistence and clarity. Truely, whether it is a bug or an API support choice is more opinion than technical statement. Choose whichever, but NVIDIA will get the last word on it. -

kalj almost 8 yearsPerhaps I am not understanding what you mean? If I add

kalj almost 8 yearsPerhaps I am not understanding what you mean? If I add#if ( __CUDA_ARCH__ < 600 )and#endifaround the whole function definition in my snippet above, and compile with -arch=sm_35, I still get exactly the same error. And why should it change -- the if case evaluates to true and I get identical code to the first case? -

Florent DUGUET almost 8 years@kalj, my macro test was indeed incomplete (that is fixed). I provided a complete example wich compiles using CUDA 8.0 RC on linux Ubuntu 16.04.

-

kalj almost 8 yearsOkay, now this makes sense, and solves the problem. However, I still feel that this is not av very nice behavior from the compiler. The developers do recognize this if not as a bug, but still as an issue. See my edit of the question.

kalj almost 8 yearsOkay, now this makes sense, and solves the problem. However, I still feel that this is not av very nice behavior from the compiler. The developers do recognize this if not as a bug, but still as an issue. See my edit of the question. -

Kriegalex almost 7 yearsHow do you prevent custom pre-pascal atomicAdd(double, double) to hide the atomicAdd(float, float) CUDA function (device_atomic_function.hpp) on systems >=CC2.X && < CC6.X ?

-

Florent DUGUET almost 7 years@Kriegalex, I would assume the C++ compiler to mitigate between float and double types.

-

Kriegalex almost 7 years@FlorentDUGUET it was a thing of namespaces, atomicAdd(double, double) should be outside the namespace, otherwise you have to provide yourself the float version

-

AdrianO almost 7 yearsI'm confused about the if pragma statement -- if I want to overwrite

AdrianO almost 7 yearsI'm confused about the if pragma statement -- if I want to overwriteatomicAdd(double*, double)only pre sm_60 and for CUDA <8.0, shouldn't this read#if defined(__CUDA_ARCH__) && __CUDA_ARCH__ >= 600with the same followingif-structure as you said? -

Kolay.Ne almost 4 years@FlorentDUGUET, why have you edited your answer (replaced

Kolay.Ne almost 4 years@FlorentDUGUET, why have you edited your answer (replaced#if condition ... #endifwith#if !condition #else ... #endif)? The if-endif version didn't work for me (I guess, it was always true, regardless of architecture), and if-else-endif did, but I wonder if you could tell me why???