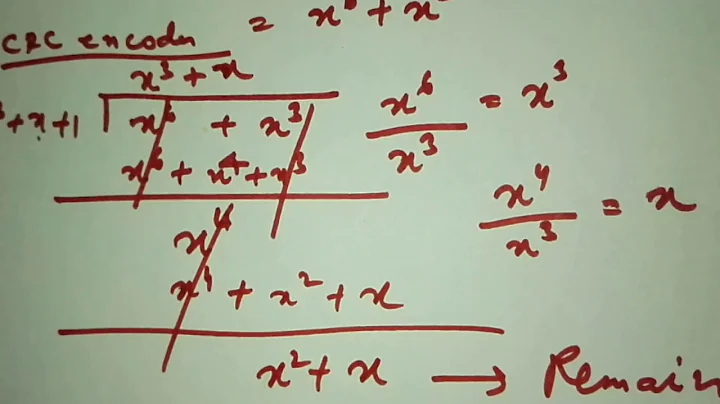

Data Length vs CRC Length

Solution 1

It's not a research topic. It's really well understood: http://en.wikipedia.org/wiki/Cyclic_redundancy_check

The math is pretty simple. An 8-bit CRC boils all messages down to one of 256 values. If your message is more than a few bytes long, the possibility of multiple messages having the same hash value goes up higher and higher.

A 16-bit CRC, similarly, gives you one of the 65,536 available hash values. What are the odds of any two messages having one of these values?

A 32-bit CRC gives you about 4 billion available hash values.

From the wikipedia article: "maximal total blocklength is equal to 2**r − 1". That's in bits. You don't need to do much research to see that 2**9 - 1 is 511 bits. Using CRC-8, multiple messages longer than 64 bytes will have the same CRC checksum value.

Solution 2

The effectiveness of a CRC is dependent on multiple factors. You not only need to select the SIZE of the CRC but also the GENERATING POLYNOMIAL to use. There are complicated and non-intuitive trade-offs depending on:

- The expected bit error rate of the channel.

- Whether the errors tend to occur in bursts or tend to be spread out (burst is common)

- The length of the data to be protected - maximum length, minimum length and distribution.

The paper Cyclic Redundancy Code Polynominal Selection For Embedded Networks, by Philip Koopman and Tridib Chakravarty, publised in the proceedings of the 2004 International Conference on Dependable Systems and Networks gives a very good overview and makes several recomendations. It also provides a bibliography for further understanding.

http://www.ece.cmu.edu/~koopman/roses/dsn04/koopman04_crc_poly_embedded.pdf

Solution 3

The choice of CRC length versus file size is mainly relevant in cases where one is more likely to have an input which differs from the "correct" input by three or fewer bits than to have a one which is massively different. Given two inputs which are massively different, the possibility of a false match will be about 1/256 with most forms of 8-bit check value (including CRC), 1/65536 with most forms of 16-bit check value (including CRC), etc. The advantage of CRC comes from its treatment of inputs which are very similar.

With an 8-bit CRC whose polynomial generates two periods of length 128, the fraction of single, double, or triple bit errors in a packet shorter than that which go undetected won't be 1/256--it will be zero. Likewise with a 16-bit CRC of period 32768, using packets of 32768 bits or less.

If packets are longer than the CRC period, however, then a double-bit error will go undetected if the distance between the erroneous bits is a multiple of the CRC period. While that might not seem like a terribly likely scenario, a CRC8 will be somewhat worse at catching double-bit errors in long packets than at catching "packet is totally scrambled" errors. If double-bit errors are the second most common failure mode (after single-bit errors), that would be bad. If anything that corrupts some data is likely to corrupt a lot of it, however, the inferior behavior of CRCs with double-bit errors may be a non-issue.

Solution 4

I think the size of the CRC has more to do with how unique of a CRC you need instead of of the size of the input data. This is related to the particular usage and number of items on which you're calculating a CRC.

Solution 5

The CRC should be chosen specifically for the length of the messages, it is not just a question of the size of the CRC: http://www.ece.cmu.edu/~koopman/roses/dsn04/koopman04_crc_poly_embedded.pdf

Related videos on Youtube

Robert Deml

Updated on April 17, 2022Comments

-

Robert Deml about 2 years

I've seen 8-bit, 16-bit, and 32-bit CRCs.

At what point do I need to jump to a wider CRC?

My gut reaction is that it is based on the data length:

- 1-100 bytes: 8-bit CRC

- 101 - 1000 bytes: 16-bit CRC

- 1001 - ??? bytes: 32-bit CRC

EDIT: Looking at the Wikipedia page about CRC and Lott's answer, here' what we have:

<64 bytes: 8-bit CRC

<16K bytes: 16-bit CRC

<512M bytes: 32-bit CRC

-

Steven Sudit over 14 yearsThis is accurate and helpful if the CRC is being used to detect changes to a file. However, if it's being used as a digest to detect duplicates among files, then it's more complicated. In specific, the birthday paradox requires us to factor in how many distinct values we expect to have.

-

user1066101 over 14 years@Steven Sudit: Correct. Sadly the question is too vague to determine anything about the use of the CRC.

-

ysap about 8 yearsI think that any message loner than the CRC width (r-1, and not 2^r-1) will have multiple messages mapped to the same checksum. IOW, any message of more than a byte long, will have overlapping CRC8 mappings. I think (one of) the challenge(s) is to design the mapping such that the distribution of message strings over the hashes is uniform.

-

Arash almost 4 yearsDoing a CRC-32 forwards and backward you mean doing CRC two times on a file?

Arash almost 4 yearsDoing a CRC-32 forwards and backward you mean doing CRC two times on a file? -

Arash over 3 yearsif we have bigger CRCs we can use bigger size packets with similar HDs. this is the reason right?

Arash over 3 yearsif we have bigger CRCs we can use bigger size packets with similar HDs. this is the reason right? -

starblue over 3 yearsIt's not that simple, read the answer Mary Ann Mojica.

-

Ted Shaneyfelt over 2 yearsYes, @Arash it seems he means a file. An advantage of CRC32 or MD5 is they can be calculated as the data passes. Reversing the data means you have to store it all buffered until you go back through the bits in reverse order. MD5 is more calculation intensive - more designed for signing a message than checking for errors because CRCs are easier to contrive a set of data that will match a particular CRC.

-

Ted Shaneyfelt over 2 yearsThis paper has the best correct answer in it.

-

Mark Adler about 2 yearsIt is possible to calculate such a "reverse CRC" in the forward direction. You don't need to buffer.

Mark Adler about 2 yearsIt is possible to calculate such a "reverse CRC" in the forward direction. You don't need to buffer.