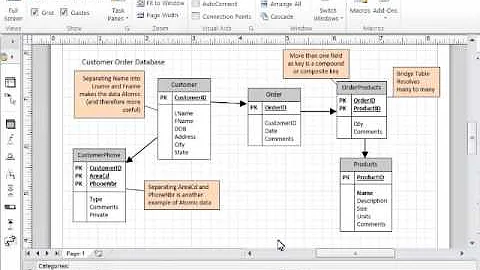

Database design: one huge table or separate tables?

Solution 1

Start out with one large table, and then apply 2008's table partitioning capabilities where appropriate, if performance becomes an issue.

Solution 2

Datawarehouses are supposed to be big (the clue is in the name). Twenty million rows is about medium by warehousing standards, although six hundred million can be considered large.

The thing to bear in mind is that such large tables have a different physics, like black holes. So tuning them takes a different set of techniques. The other thing is, users of a datawarehouse must understand that they are dealing with huge amounts of data, and so they must not expect sub-second response (or indeed sub-minute) for every query.

Partitioning can be useful, especially if you have clear demarcations such as, as in your case, CUSTOMER. You have to be aware that partitioning can degrade the performance of queries which cut across the grain of the partitioning key. So it is not a silver bullet.

Solution 3

Splitting tables for performance reasons is called sharding. Also, a database schema can be more or less normalized. A normalized schema has separate tables with relations between them, and data is not duplicated.

Solution 4

I am assuming you have your database properly normalized. It shouldn't be a problem to deal with the data volume you refer to on a single table in SQL Server; what I think you need to do is review your indexes.

Solution 5

Since you've tagged your question as 'datawarehouse' as well I assume you know some things about the subject. Depending on your goals you could go for a star-schema (a multidemensional model with a fact and dimensiontables). Store all fastchanging data in 1 table (per subject) and the slowchaning data in another dimension/'snowflake' tables.

An other option is the DataVault method by Dan Lindstedt. Which is a bit more complex but provides you with full flexibility.

Related videos on Youtube

littlegreen

Updated on July 09, 2022Comments

-

littlegreen almost 2 years

Currently I am designing a database for use in our company. We are using SQL Server 2008. The database will hold data gathered from several customers. The goal of the database is to acquire aggregate benchmark numbers over several customers.

Recently, I have become worried with the fact that one table in particular will be getting very big. Each customer has approximately 20.000.000 rows of data, and there will soon be 30 customers in the database (if not more). A lot of queries will be done on this table. I am already noticing performance issues and users being temporarily locked out.

My question, will we be able to handle this table in the future, or is it better to split this table up into smaller tables for each customer?

Update: It has now been about half a year since we first created the tables. Following the advices below, I created a handful of huge tables. Since then, I have been experimenting with indexes and decided on a clustered index on the first two columns (Hospital code and Department code) on which we would have partitioned the table had we had Enterprise Edition. This setup worked fine until recently, as Galwegian predicted, performance issues are springing up. Rebuilding an index takes ages, users lock each other out, queries frequently take longer than they should, and for most queries it pays off to first copy the relevant part of the data into a temp table, create indices on the temp table and run the query. This is not how it should be. Therefore, we are considering to buy Enterprise Edition for use of partitioned tables. If the purchase cannot go through I plan to use a workaround to accomplish partitioning in Standard Edition.

-

Neil Knight about 14 yearsFor your locks, are you specifying the NOLOCK query hint on your SELECT statements?

-

littlegreen about 14 yearsNot yet, but now I will. Thanks.

-

littlegreen about 14 yearsOn second thought, I probably won't, considering some info that I found on the subject, and the discussion below.

-

Channa about 4 yearstable partitioning will help. Also, rebuilding indexes won't take much time because now indexes will be specific to each partition now

Channa about 4 yearstable partitioning will help. Also, rebuilding indexes won't take much time because now indexes will be specific to each partition now

-

-

littlegreen about 14 yearsI have my data normalized, however the table I am referring to is completely denormalized, since it will be queried a lot and will not often change.

-

Otávio Décio about 14 yearsIf you are not updating the table then I wonder why you are having users being locked out.

-

Martin Smith about 14 yearsDirty Reads Yes - It won't affect Phantoms though as these occur under the default isolation level as well.

Martin Smith about 14 yearsDirty Reads Yes - It won't affect Phantoms though as these occur under the default isolation level as well. -

littlegreen about 14 yearsProbably because we are still in a design phase where we are bulk loading data into the database quite often. But I get your point, the locking problem will disappear in a production situation. Thanks!

-

littlegreen about 14 yearshehe.. i wish i knew even more about datawarehousing. you aren't by any chance looking for a job, are you :)

-

littlegreen about 14 yearsIf i have to give points to someone... this answer is concise, and the table partitioning hint led me to a lot of specific SQL server 2008 info that i can use. So thanks Galwegian, and everyone at that!

-

littlegreen about 14 yearsI am not using a framework, I am using indexes, and we do have a kickass server. However, it is true that I am a newbie at the subject, and we are looking for a professional DBA to add to the team. I am also not yet using Profiler, so thanks for that tip.

-

Jé Queue over 13 yearsIs my nomenclature off? I call splitting tables partitioning. I call sharding the physical or seperation of data sets for particular purposes, no?

Jé Queue over 13 yearsIs my nomenclature off? I call splitting tables partitioning. I call sharding the physical or seperation of data sets for particular purposes, no? -

StockB over 10 yearsWhat do you mean by black holes?

StockB over 10 yearsWhat do you mean by black holes? -

Joe C. about 10 years@StockB: What he means is that large databases are an entirely different sort of thing than normal databases, much like black holes (in astrophysics) are an entirely different sort of thing than normal objects. They're so different that the "usual" rules we're used to when dealing with them simply don't apply. They have their own set of rules and assumptions you have to work with.