Detect and exclude outliers in a pandas DataFrame

Solution 1

If you have multiple columns in your dataframe and would like to remove all rows that have outliers in at least one column, the following expression would do that in one shot.

df = pd.DataFrame(np.random.randn(100, 3))

import numpy as np

from scipy import stats

df[(np.abs(stats.zscore(df)) < 3).all(axis=1)]

description:

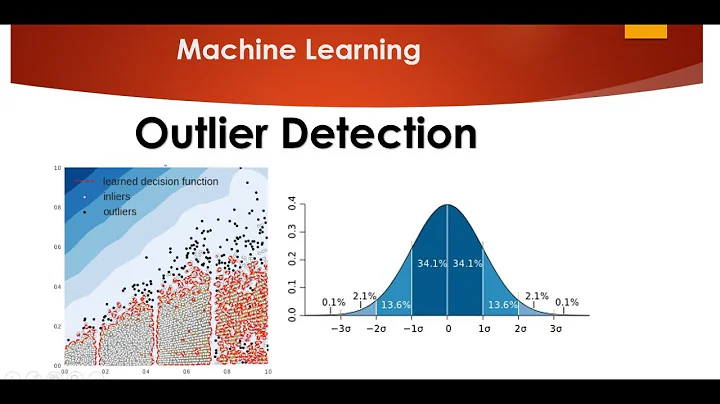

- For each column, it first computes the Z-score of each value in the column, relative to the column mean and standard deviation.

- It then takes the absolute Z-score because the direction does not matter, only if it is below the threshold.

- all(axis=1) ensures that for each row, all column satisfy the constraint.

- Finally, the result of this condition is used to index the dataframe.

Filter other columns based on a single column

- Specify a column for the

zscore,df[0]for example, and remove.all(axis=1).

df[(np.abs(stats.zscore(df[0])) < 3)]

Solution 2

For each of your dataframe column, you could get quantile with:

q = df["col"].quantile(0.99)

and then filter with:

df[df["col"] < q]

If one need to remove lower and upper outliers, combine condition with an AND statement:

q_low = df["col"].quantile(0.01)

q_hi = df["col"].quantile(0.99)

df_filtered = df[(df["col"] < q_hi) & (df["col"] > q_low)]

Solution 3

Use boolean indexing as you would do in numpy.array

df = pd.DataFrame({'Data':np.random.normal(size=200)})

# example dataset of normally distributed data.

df[np.abs(df.Data-df.Data.mean()) <= (3*df.Data.std())]

# keep only the ones that are within +3 to -3 standard deviations in the column 'Data'.

df[~(np.abs(df.Data-df.Data.mean()) > (3*df.Data.std()))]

# or if you prefer the other way around

For a series it is similar:

S = pd.Series(np.random.normal(size=200))

S[~((S-S.mean()).abs() > 3*S.std())]

Solution 4

This answer is similar to that provided by @tanemaki, but uses a lambda expression instead of scipy stats.

df = pd.DataFrame(np.random.randn(100, 3), columns=list('ABC'))

standard_deviations = 3

df[df.apply(lambda x: np.abs(x - x.mean()) / x.std() < standard_deviations)

.all(axis=1)]

To filter the DataFrame where only ONE column (e.g. 'B') is within three standard deviations:

df[((df['B'] - df['B'].mean()) / df['B'].std()).abs() < standard_deviations]

See here for how to apply this z-score on a rolling basis: Rolling Z-score applied to pandas dataframe

Solution 5

#------------------------------------------------------------------------------

# accept a dataframe, remove outliers, return cleaned data in a new dataframe

# see http://www.itl.nist.gov/div898/handbook/prc/section1/prc16.htm

#------------------------------------------------------------------------------

def remove_outlier(df_in, col_name):

q1 = df_in[col_name].quantile(0.25)

q3 = df_in[col_name].quantile(0.75)

iqr = q3-q1 #Interquartile range

fence_low = q1-1.5*iqr

fence_high = q3+1.5*iqr

df_out = df_in.loc[(df_in[col_name] > fence_low) & (df_in[col_name] < fence_high)]

return df_out

Related videos on Youtube

Comments

-

user1121201 almost 2 years

I have a pandas data frame with few columns.

Now I know that certain rows are outliers based on a certain column value.

For instance

column 'Vol' has all values around

12xxand one value is4000(outlier).Now I would like to exclude those rows that have

Volcolumn like this.So, essentially I need to put a filter on the data frame such that we select all rows where the values of a certain column are within, say, 3 standard deviations from mean.

What is an elegant way to achieve this?

-

chandni mirchandani about 2 yearsdid you got the solution ?

chandni mirchandani about 2 yearsdid you got the solution ?

-

-

Jeff about 10 yearstheir is a

DataFrame.abs()FYI, alsoDataFrame.clip() -

CT Zhu about 10 yearsIn the case of

clip(), Jeff, the outlines are not removed:df.SOME_DATA.clip(-3std,+3std)assign the outliners to either +3std or -3std -

user1121201 about 10 yearsWhat if i need hte same on a pd.Series?

-

CT Zhu about 10 yearsThat is almost the same, @AMM

-

samthebrand over 8 yearsCan you explain what this code is doing? And perhaps provide an idea how I might remove all rows that have an outlier in a single specified column? Would be helpful. Thanks.

samthebrand over 8 yearsCan you explain what this code is doing? And perhaps provide an idea how I might remove all rows that have an outlier in a single specified column? Would be helpful. Thanks. -

rafaelvalle almost 8 yearsFor each column, first it computes the Z-score of each value in the column, relative to the column mean and standard deviation. Then is takes the absolute of Z-score because the direction does not matter, only if it is below the threshold. .all(axis=1) ensures that for each row, all column satisfy the constraint. Finally, result of this condition is used to index the dataframe.

rafaelvalle almost 8 yearsFor each column, first it computes the Z-score of each value in the column, relative to the column mean and standard deviation. Then is takes the absolute of Z-score because the direction does not matter, only if it is below the threshold. .all(axis=1) ensures that for each row, all column satisfy the constraint. Finally, result of this condition is used to index the dataframe. -

DreamerP about 6 yearsHow can we do the same thing if our pandas data frame has 100 columns?

-

user6903745 about 6 yearsThis article gives a very good overview of outlier removal techniques machinelearningmastery.com/…

-

Imran Ahmad Ghazali about 6 yearsI am getting error "ValueError: Cannot index with multidimensional key" in line " df_out = df_in.loc[(df_in[col_name] > fence_low) & (df_in[col_name] < fence_high)] " Will you help

-

JE_Muc almost 6 yearsAwesome, thanks for that answer @CTZhu. @DreamerP you can just apply it to the whole DataFrame with:

df_new = df[np.abs(df - df.mean()) <= (3 * df.std())]. But in contrast to applying it to a Series or single column, this will replace outliers withnp.nanand keep the shape of the DataFrame, so interpolation might be needed to fill the missing values. -

asimo over 5 yearsHow would you handle the situation when there are Nulls/Nans in the columns. How can we have them ignored ?

-

wordsforthewise over 5 years

wordsforthewise over 5 yearstrimbothwas easiest for me. -

RajeshM over 5 yearsCan't make assumptions about why the OP wants to do something.

-

BCArg about 5 yearsHere you are selecting only data within the interquartile range (IQR), but keep in mind that there can be values outside this range that are not outliers.

-

ssp about 5 yearshow do we deal with str columns for this solution? If some of the columns are non-numeric and we want to remove outliers based on all numeric columns.

-

Priyansh about 5 years@rafaelvalle What is the significance of 3 in the code above, can you explain that?

-

rafaelvalle about 5 yearsassuming distribution X with mean mu and standard deviation sigma, the z score measures how many sigmas a value is from mu. algebraically: z-score = (x - mu) / sigma. the 3 is the threshold in number of standard deviations away from the mean.

rafaelvalle about 5 yearsassuming distribution X with mean mu and standard deviation sigma, the z score measures how many sigmas a value is from mu. algebraically: z-score = (x - mu) / sigma. the 3 is the threshold in number of standard deviations away from the mean. -

KeyMaker00 almost 5 yearsSuccinct and elegant for all dataset's attributes. I like it. I have taken the liberty to extend your answer (see bellow) to handle a data-frame than might contain also non-numerical values. Hope it can help someone.

-

PascalVKooten almost 5 yearsChoosing e.g. 0.1 and 0.9 would be pretty safe I think. Using between and the quantiles like this is a pretty syntax.

PascalVKooten almost 5 yearsChoosing e.g. 0.1 and 0.9 would be pretty safe I think. Using between and the quantiles like this is a pretty syntax. -

sak almost 5 yearsGot error: "TypeError: unsupported operand type(s) for /: 'str' and 'int'"

sak almost 5 yearsGot error: "TypeError: unsupported operand type(s) for /: 'str' and 'int'" -

Erfan over 4 yearsThis should be

Erfan over 4 yearsThis should bele(3)since its removing outliers. This way you getTruefor the outliers. Besides that +1 and this answer should be higher up -

RK1 over 4 yearsGreat solution! As a heads up

reduce=Falsehas been deprecated sincepandasversion 0.23.0 -

Sam Vanhoutte over 4 years@sak : that is because you are running this on all columns and rows in your dataset. so, it expects these values to be numeric. typically you can execute the above by column name, or first apply (one-hot?) encoding to make all your values numeric, before executing this.

Sam Vanhoutte over 4 years@sak : that is because you are running this on all columns and rows in your dataset. so, it expects these values to be numeric. typically you can execute the above by column name, or first apply (one-hot?) encoding to make all your values numeric, before executing this. -

Sam Vanhoutte over 4 years@tanemaki - I also use this code for the outliers, but I am now looking to get the list of columns that contain values that fall outside of the 3-sigma range. so I can use this to scan a dataset and get a good indication of which columns contain outliers.

Sam Vanhoutte over 4 years@tanemaki - I also use this code for the outliers, but I am now looking to get the list of columns that contain values that fall outside of the 3-sigma range. so I can use this to scan a dataset and get a good indication of which columns contain outliers. -

indolentdeveloper over 4 yearsthis might remove outliers only from upper bound.. not lower?

-

user6903745 over 4 years@indolentdeveloper you are right, just invert the inequality to remove lower outliers, or combine them with an OR operator.

-

indolentdeveloper over 4 yearsThe idea of comment was to up update the answers ;). Since someone can miss this point.

-

Ekaba Bisong over 4 yearsSubstitute

Ekaba Bisong over 4 yearsSubstituteresult_type='reduce'forreduce=False. -

A.B over 4 years@user6903745 AND statement or "OR"?

A.B over 4 years@user6903745 AND statement or "OR"? -

JOHN over 4 years@rafaelvalle. Should we use all or any?

-

user6903745 over 4 years@A.B yes that's an AND statement, mistake in my previous comment

-

Admin about 4 years@user6903745 df_filtered = df[(df["col"] < q_hi) & (df["col"] > q_low)] I guess this statement is enough to remove both upper and lower outliers. I don't know why this isn't enough

Admin about 4 years@user6903745 df_filtered = df[(df["col"] < q_hi) & (df["col"] > q_low)] I guess this statement is enough to remove both upper and lower outliers. I don't know why this isn't enough -

user6903745 about 4 years@Ashwani Yes, the answer should have been already corrected accordinly.

-

bendl about 4 yearsThis fails in the event that an entire column has the same value - in these cases zscore returns NaN and therefore the < 3 check returns False for every row, so it drops every record.

-

bendl about 4 yearsThis fails in the event that an entire column has the same value - in these cases zscore returns NaN and therefore the < 3 check returns False for every row dropping every record.

-

enricw almost 4 yearsI get "AttributeError: 'DataFrame' object has no attribute 'Data' ". Anyone know how to tackle this?

-

seralouk over 3 yearsIt's better to explicitly state the axis:

seralouk over 3 yearsIt's better to explicitly state the axis:df[(np.abs(stats.zscore(df, axis=0)) < 3).all(axis=1)] -

user140259 about 3 years@tanemaki I made boxplot graphs before and after using that command (in jupyter notebook, it shows you q1, q3, upper fence, lower_fence). Checking my results after using that command, I have fewer records, but some of them would still be outliers because their values are higher than upper_fence or lower than lower_fence from my original boxplot. Do you know why that happens? Thank you.

-

taga almost 3 yearsCan you tell me what number 3 represents in df[(np.abs(stats.zscore(df)) < 3).all(axis=1)] ?

-

Keivan over 2 yearsThe number 3 represent the 3 standard deviation. You can find more information about it here: sixsigmastudyguide.com/z-scores-z-table-z-transformations

Keivan over 2 yearsThe number 3 represent the 3 standard deviation. You can find more information about it here: sixsigmastudyguide.com/z-scores-z-table-z-transformations -

Lorenzo Bassetti over 2 years@sak : if some numbers are wrongly read as strings, you can try this: DF["column"] = pd.to_numeric(DF["column"]) . It will transform strings to numbers, if they contains numbers of course.

Lorenzo Bassetti over 2 years@sak : if some numbers are wrongly read as strings, you can try this: DF["column"] = pd.to_numeric(DF["column"]) . It will transform strings to numbers, if they contains numbers of course. -

sak over 2 years@LorenzoBassetti Thanks Lorenzo, 2 yrs.

sak over 2 years@LorenzoBassetti Thanks Lorenzo, 2 yrs. -

flashliquid over 2 years@KeyMaker00 I'd really like to use this but I get the following error: ValueError: No axis named 1 for object type Series

-

kommradHomer over 2 yearsfor those who have 10k of data and just a dozen outliers , quantile doesn't help. I'd suggest z-score

-

user6903745 over 2 years@kommradHomer could you elaborate please?

-

kommradHomer over 2 years@user6903745 when you have a few thousands of 0s and 1s in a series of 10.000 values and like only 20-30 values above 1 , you need quantiles like 0.9999 to see something different

-

user6903745 over 2 years@kommradHomer I agree, it might depend on the shape of the data distribution

-

till Kadabra over 2 yearsTo avoid dropping rows with NaNs in non-numerical columns use df.dropna(how='any', subset=cols, inplace=True)

-

Aaditya Ura about 2 yearsHi, could you take a look at this question stackoverflow.com/questions/70954791/…

Aaditya Ura about 2 yearsHi, could you take a look at this question stackoverflow.com/questions/70954791/… -

nathan liang almost 2 years@enricw That means your dataframe has no column named

nathan liang almost 2 years@enricw That means your dataframe has no column named'Data', you'll need to select the column with the right name for you. -

user1259201 almost 2 yearsI believe

np.logical_orshould benp.logical_andto work properly (option 2)