Direct memory access DMA - how does it work?

Solution 1

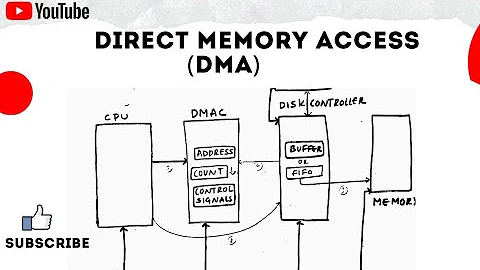

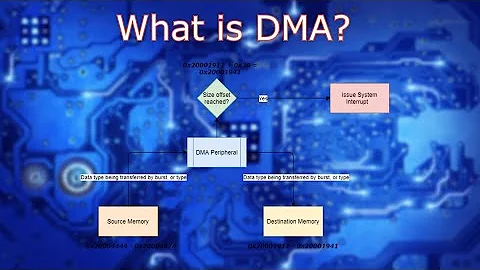

First of all, DMA (per se) is almost entirely obsolete. As originally defined, DMA controllers depended on the fact that the bus had separate lines to assert for memory read/write, and I/O read/write. The DMA controller took advantage of that by asserting both a memory read and I/O write (or vice versa) at the same time. The DMA controller then generated successive addresses on the bus, and data was read from memory and written to an output port (or vice versa) each bus cycle.

The PCI bus, however, does not have separate lines for memory read/write and I/O read/write. Instead, it encodes one (and only one) command for any given transaction. Instead of using DMA, PCI normally does bus-mastering transfers. This means instead of a DMA controller that transfers memory between the I/O device and memory, the I/O device itself transfers data directly to or from memory.

As for what else the CPU can do at the time, it all depends. Back when DMA was common, the answer was usually "not much" -- for example, under early versions of Windows, reading or writing a floppy disk (which did use the DMA controller) pretty much locked up the system for the duration.

Nowadays, however, the memory typically has considerably greater bandwidth than the I/O bus, so even while a peripheral is reading or writing memory, there's usually a fair amount of bandwidth left over for the CPU to use. In addition, a modern CPU typically has a fair large cache, so it can often execute some instruction without using main memory at all.

Solution 2

Well the key point to note is that the CPU bus is always partly used by the DMA and the rest of the channel is free to use for any other jobs/process to run. This is the key advantage of DMA over I/O. Hope this answered your question :-)

Solution 3

But, DMA to memory data/control channel is busy during this transfer.

Being busy doesn't mean you're saturated and unable to do other concurrent transfers. It's true the memory may be a bit less responsive than normal, but CPUs can still do useful work, and there are other things they can do unimpeded: crunch data that's already in their cache, receive hardware interrupts etc.. And it's not just about the quantity of data, but the rate at which it's generated: some devices create data in hard real-time and need it to be consumed promptly otherwise it's overwritten and lost: to handle this without DMA the software may may have to nail itself to a CPU core then spin waiting and reading - avoiding being swapped onto some other task for an entire scheduler time slice - even though most of the time further data's not even ready.

Related videos on Youtube

Comments

-

Boolean almost 2 years

I read that if DMA is available, then processor can route long read or write requests of disk blocks to the DMA and concentrate on other work. But, DMA to memory data/control channel is busy during this transfer. What else can processor do during this time?

-

Jerry Coffin over 9 years@artlessnoise: DMA and bus mastering are similar, but not really the same thing. Bus mastering isn't obsolete, but DMA pretty much is. For details of DMA, see (for example) the Intel 8237.

-

artless noise over 9 yearsOk, I think we have a disconnect on the meaning of DMA. See: Wiki DMA; this is ambiguous and I see your definition and mine. In the Linux dma directory, these are fitting my definition. A 'memory to memory', 'peripheral to memory' and 'memory to peripheral' device. All involve bus mastering and at least what I think DMA is. For all CPUs without PIO (direct mapped peripherals; most excluding the x86), DMA is an advantage.

artless noise over 9 yearsOk, I think we have a disconnect on the meaning of DMA. See: Wiki DMA; this is ambiguous and I see your definition and mine. In the Linux dma directory, these are fitting my definition. A 'memory to memory', 'peripheral to memory' and 'memory to peripheral' device. All involve bus mastering and at least what I think DMA is. For all CPUs without PIO (direct mapped peripherals; most excluding the x86), DMA is an advantage. -

embert over 9 years@DashmeshSingh Please stop adding those "references". Thank you.

embert over 9 years@DashmeshSingh Please stop adding those "references". Thank you.