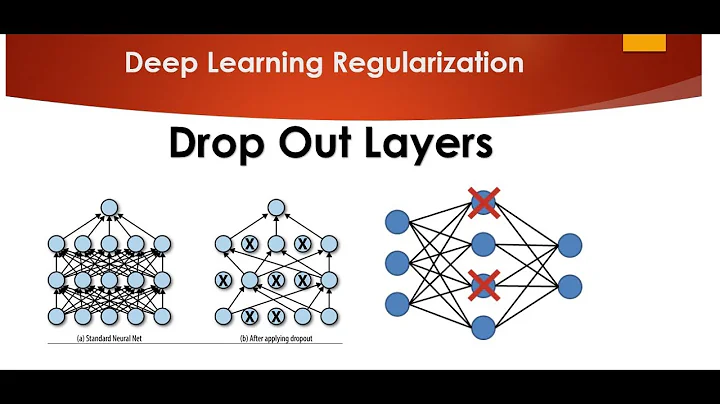

Does dropout layer go before or after dense layer in TensorFlow?

It is not an either/or situation. Informally speaking, common wisdom says to apply dropout after dense layers, and not so much after convolutional or pooling ones, so at first glance that would depend on what exactly the prev_layer is in your second code snippet.

Nevertheless, this "design principle" is routinely violated nowadays (see some interesting relevant discussions in Reddit & CrossValidated); even in the MNIST CNN example included in Keras, we can see that dropout is applied both after the max pooling layer and after the dense one:

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3),

activation='relu',

input_shape=input_shape))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25)) # <-- dropout here

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dropout(0.5)) # <-- and here

model.add(Dense(num_classes, activation='softmax'))

So, both your code snippets are valid, and we can easily imagine a third valid option as well:

dropout = tf.layers.dropout(prev_layer, [...])

dense = tf.layers.dense(dropout, units=1024, activation=tf.nn.relu)

dropout2 = tf.layers.dropout(dense, [...])

logits = tf.layers.dense(dropout2, units=params['output_classes'])

As a general advice: tutorials such the one you link to are only trying to get you familiar with the tools and the (very) general principles, so "overinterpreting" the solutions shown is not recommended...

Related videos on Youtube

rodrigo-silveira

Hybrid Software Engineer/Data Scientist I was born and raised a Software Engineer spending most of my career as a full-stack web developer and Android app developer, but have since converted into a Data Scientist slash Machine Learning Engineer. Websites http://www.rodrigo-silveira.com

Updated on May 22, 2022Comments

-

rodrigo-silveira almost 2 years

rodrigo-silveira almost 2 yearsAccording to A Guide to TF Layers the dropout layer goes after the last dense layer:

dense = tf.layers.dense(input, units=1024, activation=tf.nn.relu) dropout = tf.layers.dropout(dense, rate=params['dropout_rate'], training=mode == tf.estimator.ModeKeys.TRAIN) logits = tf.layers.dense(dropout, units=params['output_classes'])Doesn't it make more sense to have it before that dense layer, so it learns the mapping from input to output with the dropout effect?

dropout = tf.layers.dropout(prev_layer, rate=params['dropout_rate'], training=mode == dense = tf.layers.dense(dropout, units=1024, activation=tf.nn.relu) logits = tf.layers.dense(dense, units=params['output_classes'])

![[TensorFlow 2 Deep Learning] Dense Layer](https://i.ytimg.com/vi/lor2LnEVn8M/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAN0oRB73gHoBov6L5p2ft2_3hgqA)