Easily get a particular column from output without sed or awk

Solution 1

I'm not sure why

ls -hal / | awk '{print $5, $9}'

seems to you to be much more disruptive to your thought processes than

ls -hal / | cut -d'\s' -f5,9

would have been, had it worked. Would you really have to write that down? It only takes a few awk lines before adding the {} becomes automatic. (For me the hardest issue is remembering which field number corresponds to which piece of data, but perhaps you don't have that problem.)

You don't have to use all of awk's features; for simply outputing specific columns, you need to know very little awk.

The irritating issue would have been if you'd wanted to output the symlink as well as the filename, or if your filenames might have spaces in them. (Or, worse, newlines). With the hypothetical regex-aware cut, this is not a problem (except for the newlines); you would just replace -f5,9 with -f5,9-. However, there is no awk syntax for "fields 9 through to the end", and you're left with having to remember how to write a for loop.

Here's a little shell script which turns cut-style -f options into an awk program, and then runs the awk program. It needs much better error-checking, but it seems to work. (Added bonus: handles the -d option by passing it to the awk program.)

#!/bin/bash

prog=\{

while getopts f:d: opt; do

case $opt in

f) IFS=, read -ra fields <<<"$OPTARG"

for field in "${fields[@]}"; do

case $field in

*-*) low=${field%-*}; high=${field#*-}

if [[ -z $low ]]; then low=1; fi

if [[ -z $high ]]; then high=NF; fi

;;

"") ;;

*) low=$field; high=$field ;;

esac

if [[ $low == $high ]]; then

prog+='printf "%s ", $'$low';'

else

prog+='for (i='$low';i<='$high';++i) printf "%s ", $i;'

fi

done

prog+='printf "\n"}'

;;

d) sep="-F$OPTARG";;

*) exit 1;;

esac

done

if [[ -n $sep ]]; then

awk "$sep" "$prog"

else

awk "$prog"

fi

Quick test:

$ ls -hal / | ./cut.sh -f5,9-

7.0K bin

5.0K boot

4.2K dev

9.0K etc

1.0K home

8.0K host

33 initrd.img -> /boot/initrd.img-3.2.0-51-generic

33 initrd.img.old -> /boot/initrd.img-3.2.0-49-generic

...

Solution 2

I believe that there are no simpler solution than sed or awk. But you can write your own function.

Here is list function (copy paste to your terminal):

function list() { ls -hal $1 | awk '{printf "%-10s%-30s\n", $5, $9}'; }

then use list function:

list /

list /etc

Solution 3

You can't just talk about "columns" without also explaining what a column is!

Very common in unix text processing is having whitespace as the column (field) separator and (naturally) newline as the row or record separator.

Then awk is an excellent tool, that is very readable as well:

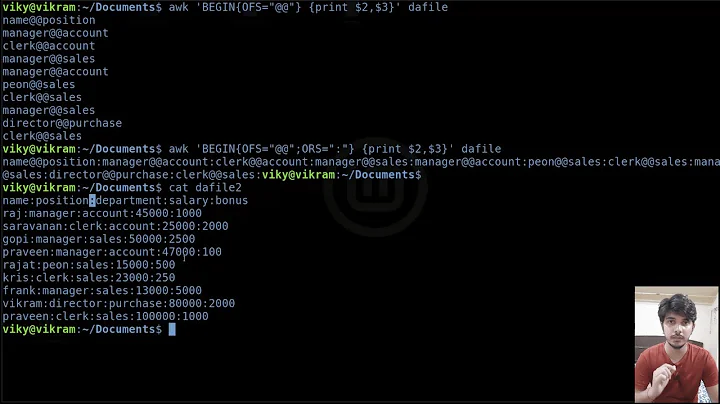

# for words (columns) 5 and 9:

ls -lah | awk '{print $5 " " $9}'

# or this, for the fifth and the last word:

ls -lah | awk '{print $5 " " $NF}'

If the columns are instead ordered character-wise, perhaps cut -c is better.

ls -lah | cut -c 31-33,46-

You can tell awk to use other field separators with the -F option.

If you don't use -c (or -b) with cut, use -f to specify which columns to output.

The trick is knowledge about the input

Generally speaking, it's not always a good idea to parse output of ls, df, ps and similar tools with text-processing tools, at least not if you wish to be portable/compatible. In those cases, try to force the output in a POSIX-defined format. Sometimes this can be achieved by passing a certain option (-P perhaps) to the command generating the output. Sometimes by setting an environment variable such as POSIXLY_CORRECT or calling a specific binary, such as /usr/xpg4/bin/ls.

Solution 4

This is an old question, but at the risk of rocking the boat, I have to say I agree with @iconoclast: there really ought to be a good, simple way of extracting selected columns in Unix.

Now, yes, awk can easily do this, it's true. But I think it's also true that it's "overkill" for a simple, common task. And even if the overkill factor isn't a concern, the extra typing certainly is: given how often I have columns to extract, I'd really rather not have to type print, and those braces, and those dollar signs, and those quotes, every time. And if the existence of awk and sed really imply that we don't need a simple column extractor, then by the same token I guess we don't need grep, either!

The cut utility ought to be the answer, but it's sadly broken. Its default is not "whitespace separated columns", despite the (IMO) overwhelming predominance of that need. And, in fact, it can't do arbitrary whitespace separation at all! (Thus iconoclast's question.)

Something like 35 years ago, before I'd even heard of cut, I wrote my own version. It works well; I'm still using it every day; I commend it to anyone who would like a better cut and who isn't hung up on using only "standard" tools. It's got one significant drawback in that its name, "column", has since been taken by a BSD utility.

Anyway, with this version of column in hand, my answer to iconoclast's question is

ls -hal / | column 5 9

or if you wish

ls -hal / | column 5,9

Man page and source tarball at http://www.eskimo.com/~scs/src/#column . Use it if you're interested; ignore it as the off-topic answer I suppose this is if you're not.

Solution 5

If you just want to display these two attributes (size and name), you can also use the stat tool (which is designed for just that - querying file attributes):

stat -c "%s %n" .* *

will display the size and name of all files (including "hidden" files) in the current directory.

Notice: You chose ls as one application example for the use cases where you want to extract specific columns from the output of a program. Unfortunately, ls is one of the examples where you should really avoid using text-processing tools to parse the output.

Related videos on Youtube

iconoclast

Contractor at Infinite Red, a mobile app & web site design & development company with employees worldwide, experts in React Native, Rails, Phoenix, and all things JavaScript!

Updated on September 18, 2022Comments

-

iconoclast over 1 year

Is there a quicker way of getting a couple of column of values than futzing with

sedandawk?For instance, if I have the output of

ls -hal /and I want to just get the file and directory names and sizes, how can I easily and quickly doing that, without having to spend several minutes tweaking my command.total 16078 drwxr-xr-x 33 root wheel 1.2K Aug 13 16:57 . drwxr-xr-x 33 root wheel 1.2K Aug 13 16:57 .. -rw-rw-r-- 1 root admin 15K Aug 14 00:41 .DS_Store d--x--x--x 8 root wheel 272B Jun 20 16:40 .DocumentRevisions-V100 drwxr-xr-x+ 3 root wheel 102B Mar 27 12:26 .MobileBackups drwx------ 5 root wheel 170B Jun 20 15:56 .Spotlight-V100 d-wx-wx-wt 2 root wheel 68B Mar 27 12:26 .Trashes drwxrwxrwx 4 root wheel 136B Mar 30 20:00 .bzvol srwxrwxrwx 1 root wheel 0B Aug 13 16:57 .dbfseventsd ---------- 1 root admin 0B Aug 16 2012 .file drwx------ 1275 root wheel 42K Aug 14 00:05 .fseventsd drwxr-xr-x@ 2 root wheel 68B Jun 20 2012 .vol drwxrwxr-x+ 289 root admin 9.6K Aug 13 10:29 Applications drwxrwxr-x 7 root admin 238B Mar 5 20:47 Developer drwxr-xr-x+ 69 root wheel 2.3K Aug 12 21:36 Library drwxr-xr-x@ 2 root wheel 68B Aug 16 2012 Network drwxr-xr-x+ 4 root wheel 136B Mar 27 12:17 System drwxr-xr-x 6 root admin 204B Mar 27 12:22 Users drwxrwxrwt@ 6 root admin 204B Aug 13 23:57 Volumes drwxr-xr-x@ 39 root wheel 1.3K Jun 20 15:54 bin drwxrwxr-t@ 2 root admin 68B Aug 16 2012 cores dr-xr-xr-x 3 root wheel 4.8K Jul 6 13:08 dev lrwxr-xr-x@ 1 root wheel 11B Mar 27 12:09 etc -> private/etc dr-xr-xr-x 2 root wheel 1B Aug 12 21:41 home -rw-r--r--@ 1 root wheel 7.8M May 1 20:57 mach_kernel dr-xr-xr-x 2 root wheel 1B Aug 12 21:41 net drwxr-xr-x@ 6 root wheel 204B Mar 27 12:22 private drwxr-xr-x@ 68 root wheel 2.3K Jun 20 15:54 sbin lrwxr-xr-x@ 1 root wheel 11B Mar 27 12:09 tmp -> private/tmp drwxr-xr-x@ 13 root wheel 442B Mar 29 23:32 usr lrwxr-xr-x@ 1 root wheel 11B Mar 27 12:09 var -> private/varI realize there are a bazillion options for

lsand I could probably do it for this particular example that way, but this is a general problem and I'd like a general solution to getting specific columns easily and quickly.cutdoesn't cut it because it doesn't take a regular expression, and I virtually never have the situation where there's a single space delimiting columns. This would be perfect if it would work:ls -hal / | cut -d'\s' -f5,9awkandsedare more general than I want, basically entire languages unto themselves. I have nothing against them, it's just that unless I've recently being doing a lot with them, it requires a pretty sizable mental shift to start thinking in their terms and write something that works. I'm usually in the middle of thinking about some other problem I'm trying to solve and suddenly having to solve ased/awkproblem throws off my focus.Is there a flexible shortcut to achieving what I want?

-

Admin about 10 yearsfutz |fəts| verb [ no obj. ] informal waste time; idle or busy oneself aimlessly; Getting to know

Admin about 10 yearsfutz |fəts| verb [ no obj. ] informal waste time; idle or busy oneself aimlessly; Getting to knowsedandawkis in no way futzing my friend. If it is anything it is opposite as it saves many many hours. -

Admin about 10 yearsThat's an overly-narrow definition of "futz". Would you prefer I used "fiddle"? I'm in no way disputing the value of

Admin about 10 yearsThat's an overly-narrow definition of "futz". Would you prefer I used "fiddle"? I'm in no way disputing the value ofsedorawk, just pointing out that I don't want to shift focus from one thing to another. -

Admin almost 4 yearsOpposed to most others in this thread, I think this is a good idea. AWK and SED are hard to get into, if you are new to this type of language. Especially, if you don't use it much. I use both from time to time, not too often, but definitely regularly and it is definitely not really easy to handle it, especially after a long break from them. I guess it would be helpful if there were a awk/sed wrapper with a much simpler API. That would be 1. a possible 2. not bad solution, I think.

Admin almost 4 yearsOpposed to most others in this thread, I think this is a good idea. AWK and SED are hard to get into, if you are new to this type of language. Especially, if you don't use it much. I use both from time to time, not too often, but definitely regularly and it is definitely not really easy to handle it, especially after a long break from them. I guess it would be helpful if there were a awk/sed wrapper with a much simpler API. That would be 1. a possible 2. not bad solution, I think. -

Admin over 2 yearsSee also

Admin over 2 yearsSee alsodu -ahd1for this particular task. Disk usage, -a to include files, -h for human-readable, -d1 for max depth 1.

-

-

iconoclast about 10 yearsActually, the problem here is that the function doesn't take arguments, so it's very narrowly focussed on a specific case, and is not flexible. If you could work out the quoting so that you can pass arguments into it, that would be useful. And of course remove everything before the pipe, so you can use it with any arbitrary input, and specify which columns you want.

-

Zac Thompson over 6 yearsin other words, please re-create awk but give it a different name and fewer features

Zac Thompson over 6 yearsin other words, please re-create awk but give it a different name and fewer features -

iconoclast over 4 years@ZacThompson: yeah, like 0.1% of the features, and a much simpler API. That would be quite useful.

-

iconoclast over 4 yearsAwk is quite literally another language with different syntax. Would you understand if we were talking about AppleScript instead? Or take human languages: no matter how well you know another human language, switching back and forth requires extra effort, unless you have a lot of practice making that switch frequently.

-

iconoclast over 2 yearsThanks this is interesting but not relevant to the question of getting columns of data. I only gave that

lsexample for the sake of having something concrete to talk about. I'm concerned with easily getting columns from shell output, not with that particular use case. -

AdminBee over 2 years@iconoclast I see, but unfortunately your example (the output of

ls) is one use case where you really should avoid using text-processing tools for parsing. -

iconoclast over 2 yearsPretend this doesn't use

ls.lsis 1000% irrelevant to the question. -

Stephen Kitt over 2 yearsYour first command doesn’t use

find. Your second option misses the point of the question, which is about generic extraction of column information, not the specific file information fromls. -

αғsнιη over 2 yearsalso your awk command says the same thing as mentioned in other existing answers

αғsнιη over 2 yearsalso your awk command says the same thing as mentioned in other existing answers