Efficient distance calculation between N points and a reference in numpy/scipy

Solution 1

I would take a look at scipy.spatial.distance.cdist:

http://docs.scipy.org/doc/scipy/reference/generated/scipy.spatial.distance.cdist.html

import numpy as np

import scipy

a = np.random.normal(size=(10,3))

b = np.random.normal(size=(1,3))

dist = scipy.spatial.distance.cdist(a,b) # pick the appropriate distance metric

dist for the default distant metric is equivalent to:

np.sqrt(np.sum((a-b)**2,axis=1))

although cdist is much more efficient for large arrays (on my machine for your size problem, cdist is faster by a factor of ~35x).

Solution 2

I would use the sklearn implementation of the euclidean distance. The advantage is the usage of the more efficient expression by using Matrix multiplication:

dist(x, y) = sqrt(np.dot(x, x) - 2 * np.dot(x, y) + np.dot(y, y)

A simple script would look like this:

import numpy as np

x = np.random.rand(1000, 3)

y = np.random.rand(1000, 3)

dist = np.sqrt(np.dot(x, x)) - (np.dot(x, y) + np.dot(x, y)) + np.dot(y, y)

The advantage of this approach has been nicely described in the sklearn documentation: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.pairwise.euclidean_distances.html#sklearn.metrics.pairwise.euclidean_distances

I am using this approach to crunch large datamatrices (10000, 10000) with some minor modifications like using the np.einsum function.

Solution 3

This might not answer your question directly, but if you are after all permutations of particle pairs, I've found the following solution to be faster than the pdist function in some cases.

import numpy as np

L = 100 # simulation box dimension

N = 100 # Number of particles

dim = 2 # Dimensions

# Generate random positions of particles

r = (np.random.random(size=(N,dim))-0.5)*L

# uti is a list of two (1-D) numpy arrays

# containing the indices of the upper triangular matrix

uti = np.triu_indices(100,k=1) # k=1 eliminates diagonal indices

# uti[0] is i, and uti[1] is j from the previous example

dr = r[uti[0]] - r[uti[1]] # computes differences between particle positions

D = np.sqrt(np.sum(dr*dr, axis=1)) # computes distances; D is a 4950 x 1 np array

See this for a more in-depth look on this matter, on my blog post.

Solution 4

You can also use the development of the norm (similar to remarkable identities). This is probably the most efficent way to compute the distance of a matrix of points.

Here is a code snippet that I originally used for a k-Nearest-Neighbors implementation, in Octave, but you can easily adapt it to numpy since it only uses matrix multiplications (the equivalent is numpy.dot()):

% Computing the euclidian distance between each known point (Xapp) and unknown points (Xtest)

% Note: we use the development of the norm just like a remarkable identity:

% ||x1 - x2||^2 = ||x1||^2 + ||x2||^2 - 2*<x1,x2>

[napp, d] = size(Xapp);

[ntest, d] = size(Xtest);

A = sum(Xapp.^2, 2);

A = repmat(A, 1, ntest);

B = sum(Xtest.^2, 2);

B = repmat(B', napp, 1);

C = Xapp*Xtest';

dist = A+B-2.*C;

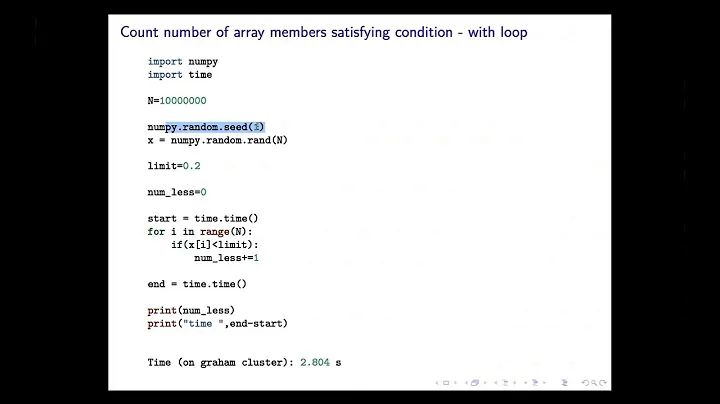

Related videos on Youtube

D. Huang

Updated on July 09, 2022Comments

-

D. Huang almost 2 years

I just started using scipy/numpy. I have an 100000*3 array, each row is a coordinate, and a 1*3 center point. I want to calculate the distance for each row in the array to the center and store them in another array. What is the most efficient way to do it?

-

Fred Foo almost 13 yearspossible duplicate of calculate euclidean distance with numpy

-

JoshAdel almost 13 years@larsmans: I don't think it's a duplicate since the answers only pertain to the distance between two points rather than the distance between N points and a reference point. And certainly the responses don't point the OP to the efficient scipy solution that I show below.

-

-

JoshAdel almost 13 yearsOn my machine this is still 18x slower than

cdistfor the OP's problem size. -

eat almost 13 years@JoshAdel: That's big difference. FWIW, with

numpy1.6 in my modest machine: forn= 1e5, timing s arecdist3.5 ms anddot9.5 ms. Sodotis only some 3 times slower. However with much smallern(<2e3) 'dot' will be faster. Thanks -

drewid over 7 yearsdoesn't address the question of calculating against a single reference point

-

BGabor about 7 years

numpy.sqrt((X**2).sum(axis=1)[:, None] - 2 * X.dot(Y.transpose()) + ((Y**2).sum(axis=1)[None, :]) -

Lithilion over 5 yearsPlease explain your solution

-

MikeB over 3 yearsIn this answer, where is the single reference point?

MikeB over 3 yearsIn this answer, where is the single reference point? -

Aaron Bramson about 3 years

Aaron Bramson about 3 yearsbis the single refence point in three dimensions,ais 10 other points in three dimensions. -

Ariel over 2 yearsin case b has more points (pairs)

np.sqrt(np.sum((hs[:, None] - an)**2, axis=2))