Failed create pod sandbox: rpc error: code = Unknown desc = NetworkPlugin cni failed to set up pod network

Solution 1

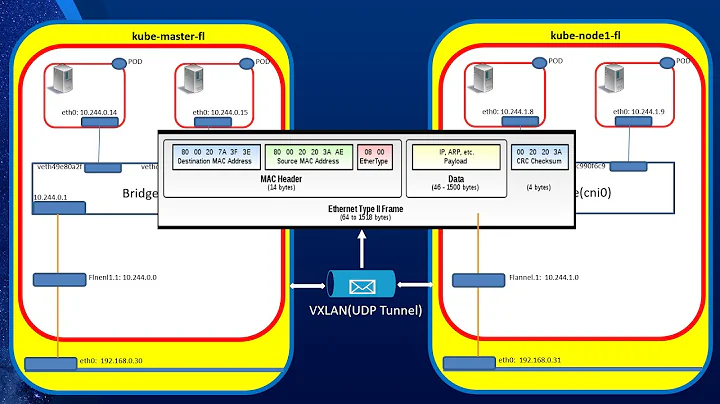

Ensure that /etc/cni/net.d and its /opt/cni/bin friend both exist and are correctly populated with the CNI configuration files and binaries on all Nodes. For flannel specifically, one might make use of the flannel cni repo

Solution 2

When I used calico as CNI and I faced a similar issue.

The container remained in creating state, I checked for /etc/cni/net.d and /opt/cni/bin on master both are present but not sure if this is required on worker node as well.

root@KubernetesMaster:/opt/cni/bin# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-5c7588df-5zds6 0/1 ContainerCreating 0 21m

root@KubernetesMaster:/opt/cni/bin# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetesmaster Ready master 26m v1.13.4

kubernetesslave1 Ready <none> 22m v1.13.4

root@KubernetesMaster:/opt/cni/bin#

kubectl describe pods

Name: nginx-5c7588df-5zds6

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: kubernetesslave1/10.0.3.80

Start Time: Sun, 17 Mar 2019 05:13:30 +0000

Labels: app=nginx

pod-template-hash=5c7588df

Annotations: <none>

Status: Pending

IP:

Controlled By: ReplicaSet/nginx-5c7588df

Containers:

nginx:

Container ID:

Image: nginx

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-qtfbs (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-qtfbs:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-qtfbs

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 18m default-scheduler Successfully assigned default/nginx-5c7588df-5zds6 to kubernetesslave1

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "123d527490944d80f44b1976b82dbae5dc56934aabf59cf89f151736d7ea8adc" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "8cc5e62ebaab7075782c2248e00d795191c45906cc9579464a00c09a2bc88b71" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "30ffdeace558b0935d1ed3c2e59480e2dd98e983b747dacae707d1baa222353f" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "630e85451b6ce2452839c4cfd1ecb9acce4120515702edf29421c123cf231213" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "820b919b7edcfc3081711bb78b79d33e5be3f7dafcbad29fe46b6d7aa22227aa" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "abbfb5d2756f12802072039dec20ba52f546ae755aaa642a9a75c86577be589f" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "dfeb46ffda4d0f8a434f3f3af04328fcc4b6c7cafaa62626e41b705b06d98cc4" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "9ae3f47bb0282a56e607779d3267127ee8b0ae1d7f416f5a184682119203b1c8" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Warning FailedCreatePodSandBox 18m kubelet, kubernetesslave1 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "546d07f1864728b2e2675c066775f94d658e221ada5fb4ed6bf6689ec7b8de23" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Normal SandboxChanged 18m (x12 over 18m) kubelet, kubernetesslave1 Pod sandbox changed, it will be killed and re-created.

Warning FailedCreatePodSandBox 3m39s (x829 over 18m) kubelet, kubernetesslave1 (combined from similar events): Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "f586be437843537a3082f37ad139c88d0eacfbe99ddf00621efd4dc049a268cc" network for pod "nginx-5c7588df-5zds6": NetworkPlugin cni failed to set up pod "nginx-5c7588df-5zds6_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

root@KubernetesMaster:/etc/cni/net.d#

On worker node NGINX is trying to come up but getting exited, I am not sure what's going on here - I am newbie to kubernetes & not able to fix this issue -

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 3 minutes ago Up 3 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 3 minutes ago Up 3 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 3 minutes ago Up 3 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

94b2994401d0 k8s.gcr.io/pause:3.1 "/pause" 1 second ago Up Less than a second k8s_POD_nginx-5c7588df-5zds6_default_677a722b-4873-11e9-a33a-06516e7d78c4_534

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 4 minutes ago Up 4 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 4 minutes ago Up 4 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f72500cae2b7 k8s.gcr.io/pause:3.1 "/pause" 1 second ago Up Less than a second k8s_POD_nginx-5c7588df-5zds6_default_677a722b-4873-11e9-a33a-06516e7d78c4_585

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 4 minutes ago Up 4 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

root@kubernetesslave1:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ad5500e8270 fadcc5d2b066 "/usr/local/bin/kube…" 5 minutes ago Up 5 minutes k8s_kube-proxy_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

b1c9929ebe9e k8s.gcr.io/pause:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_calico-node-749qx_kube-system_4e2d8c9c-4873-11e9-a33a-06516e7d78c4_1

ceb78340b563 k8s.gcr.io/pause:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kube-proxy-f24gd_kube-system_4e2d313a-4873-11e9-a33a-06516e7d78c4_1

I checked about /etc/cni/net.d & /opt/cni/bin on worker node as well, it is there -

root@kubernetesslave1:/home/ubuntu# cd /etc/cni

root@kubernetesslave1:/etc/cni# ls -ltr

total 4

drwxr-xr-x 2 root root 4096 Mar 17 05:19 net.d

root@kubernetesslave1:/etc/cni# cd /opt/cni

root@kubernetesslave1:/opt/cni# ls -ltr

total 4

drwxr-xr-x 2 root root 4096 Mar 17 05:19 bin

root@kubernetesslave1:/opt/cni# cd bin

root@kubernetesslave1:/opt/cni/bin# ls -ltr

total 107440

-rwxr-xr-x 1 root root 3890407 Aug 17 2017 bridge

-rwxr-xr-x 1 root root 3475802 Aug 17 2017 ipvlan

-rwxr-xr-x 1 root root 3520724 Aug 17 2017 macvlan

-rwxr-xr-x 1 root root 3877986 Aug 17 2017 ptp

-rwxr-xr-x 1 root root 3475750 Aug 17 2017 vlan

-rwxr-xr-x 1 root root 9921982 Aug 17 2017 dhcp

-rwxr-xr-x 1 root root 2605279 Aug 17 2017 sample

-rwxr-xr-x 1 root root 32351072 Mar 17 05:19 calico

-rwxr-xr-x 1 root root 31490656 Mar 17 05:19 calico-ipam

-rwxr-xr-x 1 root root 2856252 Mar 17 05:19 flannel

-rwxr-xr-x 1 root root 3084347 Mar 17 05:19 loopback

-rwxr-xr-x 1 root root 3036768 Mar 17 05:19 host-local

-rwxr-xr-x 1 root root 3550877 Mar 17 05:19 portmap

-rwxr-xr-x 1 root root 2850029 Mar 17 05:19 tuning

root@kubernetesslave1:/opt/cni/bin#

Solution 3

I had this issue with my GKE cluster on GCP with one of my preemptive node pools. Thanks to @mdaniel tip of checking the integrity of /etc/cni/net.d I could reproduce the issue again by ssh into the node of a testing cluster with the command gcloud compute ssh <name of some node> --zone <zone-of-cluster> --internal-ip. Then I simply edited the file /etc/cni/net.d/10-gke-ptp.conflist and messed with the values on the "routes": [ {"dst": "0.0.0.0/0"} ] (changed from 0.0.0.0/0 to 1.0.0.0/0).

After that, I deleted the pods that were running inside of it and they all got stuck with the ContainerCreating status forever generating kublet events with the error Failed create pod sandbox: rpc error: code...

Note that in order to test I've set up my nodepool to have maximum of 1 node. Otherwise it will scale up a new one and the pods will be recreated at the new node. In my production incident the nodepool reached maximum node count so setting my tests to max 1 node reproduced a similar situation.

Since that, deleting the node from GKE solved the issue in production, I created a Python script that lists all events on the cluster and filters the ones that have the keyword "Failed create pod sandbox: rpc error: code". Than I go over all events and get their pods, and then from the pods, I get the nodes. Finally I loop over the nodes deleting them both from Kubernetes API and from Compute API with it's respective Python clients. For the Python script I used the libs: kubernetes and google-cloud-compute.

This is a simpler version of the script. Test it before using it:

from kubernetes import client, config

from google.cloud.compute_v1.services.instances import InstancesClient

ERROR_KEYWORDS = [

'Failed to create pod sandbox'.lower()

]

config.load_kube_config()

v1 = client.CoreV1Api()

events_result = v1.list_event_for_all_namespaces()

filtered_events = []

# filter only the events containing ERROR_KEYWORDS

for event in events_result.items:

for error_keyword in ERROR_KEYWORDS:

if error_keyword in event.message.lower():

filtered_events.append(event)

# gets the list of pods from those events

pods_list = {}

for event in filtered_events:

try:

pod = v1.read_namespaced_pod(

event.involved_object.name,

namespace=event.involved_object.namespace

)

pod_dict = {

"name": event.involved_object.name,

"namespace": event.involved_object.namespace,

"node": pod.spec.node_name

}

pods_list[event.involved_object.name] = pod_dict

except Exception as e:

pass

# Get the nodes from those pods

broken_nodes = set()

for name, pod_dict in pods_list.items():

if pod_dict.get('node'):

broken_nodes.add(pod_dict["node"])

broken_nodes = list(broken_nodes)

# Deletes the nodes from both Kubernetes API and Compute Engine API

if broken_nodes:

broken_nodes_str = ", ".join(broken_nodes)

print(f'BROKEN NODES: "{broken_nodes_str}"')

for node in broken_nodes:

try:

api_response = v1.delete_node(node)

except Exception as e:

pass

time.sleep(30)

try:

result = gcp_client.delete(project=PROJECT_ID, zone=CLUSTER_ZONE, instance=node)

except Exception as e:

pass

Solution 4

This problem appeared for me when I added a PVC on AWS EKS.

Updating the aws-node CNI plugin to the latest version resolved it -

https://docs.aws.amazon.com/eks/latest/userguide/cni-upgrades.html

Solution 5

AWS EKS doesn't yet support t3a, m5ad r5ad instances

Related videos on Youtube

suboss87

Updated on July 09, 2022Comments

-

suboss87 almost 2 years

Issue Redis POD creation on k8s(v1.10) cluster and POD creation stuck at "ContainerCreating"

Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 30m default-scheduler Successfully assigned redis to k8snode02 Normal SuccessfulMountVolume 30m kubelet, k8snode02 MountVolume.SetUp succeeded for volume "default-token-f8tcg" Warning FailedCreatePodSandBox 5m (x1202 over 30m) kubelet, k8snode02 Failed create pod sandbox: rpc error: code = Unknown desc = NetworkPlugin cni failed to set up pod "redis_default" network: failed to find plugin "loopback" in path [/opt/loopback/bin /opt/cni/bin] Normal SandboxChanged 47s (x1459 over 30m) kubelet, k8snode02 Pod sandbox changed, it will be killed and re-created.-

mdaniel almost 6 years

-

suboss87 almost 6 years@MatthewLDaniel Yes, I do have in the master node.

[root@k8smaster01 k8s]# cd /etc/cni/net.d/ [root@k8smaster01 net.d]# ls **10-flannel.conf** [root@k8smaster01 net.d]# cd /opt/cni/bin [root@k8smaster01 bin]# ls **bridge dhcp flannel host-local ipvlan loopback macvlan portmap ptp sample tuning vlan** -

mdaniel almost 6 yearsSure, but do you have it on all the Nodes, specifically

k8snode02which doesn't sound like a master Node? An SDN-based cluster requires that all participants are able to use the SDN -

suboss87 almost 6 yearsI don't find /opt/cni/bin directory in k8snode02, So how can I get those SDN's onto k8snode02. Should I need to copy the CNI bin files? or how can i fix this.

-

suboss87 almost 6 yearsI also see the k8snode02 in "NOT READY" state.

NAME STATUS ROLES AGE VERSION k8smaster01 Ready master 20d v1.10.4 k8snode01 Ready <none> 20d v1.10.4 k8snode02 NotReady <none> 20d v1.10.4 -

mdaniel almost 6 yearsWell, how did you get them on your master Node? And did you follow the link I provided, since that repo is nothing but how to distribute those files across your cluster?

-

suboss87 almost 6 yearsI see CNI files got removed accidentally by other users and that created this issue. Finally able to fix the issue and thanks a lot for your guidance.

-

-

Bhargav Patel about 5 yearsI am facing same issue. exist both path. i am using calico network

Bhargav Patel about 5 yearsI am facing same issue. exist both path. i am using calico network -

Bhargav Patel about 5 yearsError is : Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "b577ddbdd5fbd6cbe79e5b1bf20648e981590ecd0df545a0158ce909d9179096" network for pod "frontend-784f75ddb7-nbz7t": NetworkPlugin cni failed to set up pod "frontend-784f75ddb7-nbz7t_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/

Bhargav Patel about 5 yearsError is : Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "b577ddbdd5fbd6cbe79e5b1bf20648e981590ecd0df545a0158ce909d9179096" network for pod "frontend-784f75ddb7-nbz7t": NetworkPlugin cni failed to set up pod "frontend-784f75ddb7-nbz7t_default" network: stat /var/lib/calico/nodename: no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/ -

mdaniel about 5 yearsDon't overlook the are correctly populated part. For that

/var/lib/calico/nodenameerror specifically, be careful if you are using aDaemonSetto configure calico: k8s may try to schedule thatfrontendPod on the Node before theDaemonSethas finished configuring it. In that specific circumstance, just deleting all the Pods from the Node after calico is successfully configured will cure that sandbox problem -

Bhargav Patel about 5 yearsThank for quick reply ... Had firewall issue. getting warning

Bhargav Patel about 5 yearsThank for quick reply ... Had firewall issue. getting warningWarning BackOff 2m11s (x153 over 36m) kubelet, truecomtelesoft Back-off restarting failed containershould i ignore this ? -

Prashanth Sams over 4 yearstrue, and also the instances should be with good capacity else, it doesnt work

-

Kangqiao Zhao over 3 years@mdaniel, by "just deleting all the Pods from the Node after calico is successfully configured will cure that sandbox problem". You mean "all" pods from all the workers or only the woker that the

Kangqiao Zhao over 3 years@mdaniel, by "just deleting all the Pods from the Node after calico is successfully configured will cure that sandbox problem". You mean "all" pods from all the workers or only the woker that thefrontendpod is on? -

phyatt almost 3 yearsThis saved my bacon. Thank you for posting this.

-

Morgan Christiansson almost 3 yearsPretty sure they're supported now. My answer is 2 years old.

![How to solve pod update fails with RuntimeError - [Xcodeproj] Unknown object version](https://i.ytimg.com/vi/T3GoefJIZVc/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLC6jQRAvo0exS_UtuqHn0yoX0-VPw)