Fastest inline-assembly spinlock

Solution 1

Just look here: x86 spinlock using cmpxchg

And thanks to Cory Nelson

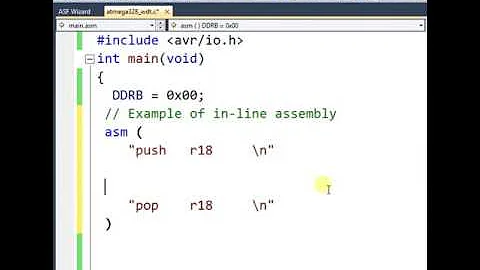

__asm{

spin_lock:

xorl %ecx, %ecx

incl %ecx

spin_lock_retry:

xorl %eax, %eax

lock; cmpxchgl %ecx, (lock_addr)

jnz spin_lock_retry

ret

spin_unlock:

movl $0 (lock_addr)

ret

}

And another source says: http://www.geoffchappell.com/studies/windows/km/cpu/cx8.htm

lock cmpxchg8b qword ptr [esi]

is replaceable with the following sequence

try:

lock bts dword ptr [edi],0

jnb acquired

wait:

test dword ptr [edi],1

je try

pause ; if available

jmp wait

acquired:

cmp eax,[esi]

jne fail

cmp edx,[esi+4]

je exchange

fail:

mov eax,[esi]

mov edx,[esi+4]

jmp done

exchange:

mov [esi],ebx

mov [esi+4],ecx

done:

mov byte ptr [edi],0

And here is a discussion about lock-free vs lock implementations: http://newsgroups.derkeiler.com/Archive/Comp/comp.programming.threads/2011-10/msg00009.html

Solution 2

Although there is already an accepted answer, there are a few things that where missed that could be used to improve all the answers, taken from this Intel article, all above fast lock implementation:

- Spin on a volatile read, not an atomic instruction, this avoids unneeded bus locking, especially on highly contended locks.

- Use back-off for highly contested locks

- Inline the lock, preferably with intrinsics for compilers where inline asm is detrimental (basically MSVC).

Solution 3

I'm usually not one to gripe about someone striving to achieve fast code: it's usually a very good exercise which results in better understanding of programming and faster code.

I won't gripe here either but I can state unequivocally that the question of a fast spinlock 3 instructions long or a few more is - at least on the x86 achitecture - a futile chase.

Here's why:

Invoking a spinlock with a typical code sequence

lock_variable DW 0 ; 0 <=> free

mov ebx,offset lock_variable

mov eax,1

xchg eax,[ebx]

; if eax contains 0 (no one owned it) you own the lock,

; if eax contains 1 (someone already does) you don't

Freeing a spinlock is trivial

mov ebx,offset lock_variable

mov dword ptr [ebx],0

The xchg instruction raises the lock pin on the processor which in effect means I want the bus during the next few clock cycles. This signal weaves its way through the caches and down to the slowest bus-mastering device which is usually the PCI bus. When every busmastering device has completed the locka (lock acknowledge) signal is sent back. Then the actual exchange takes place. The problem is that the lock/locka sequence takes a VERY long time. The PCI bus may run at 33MHz with several cycles of latency. On a 3.3 GHz CPU that means each PCI bus cycle takes one hundred CPU cycles.

As a rule of thumb I assume that a lock will take between 300 and 3000 CPU cycles to complete and in the end I don't know if I'll even own the lock. So the few cycles you can save by a "fast" spinlock will be a mirage because no lock is like the next, it will depend on your bus situation during that short time.

________________EDIT________________

I just read that the spinlock is a "heavily used object." Well, you obviously don't understand that a spinlock consumes an enormous amount of CPU cycles evey time it is invoked. Or, to put it another way, every time you invoke it you lose a significant amount of your processing capability.

The trick when using spinlocks (or their bigger sibling, the critical section) is to use them as sparingly as possible while still achieving the intended program function. Using them all over the place is easy and you'll end up with lackluster performance as a result.

It's not all about writing fast code, it's also about organizing your data. When you write "copying small structures between threads" you should realize that the lock may take hundreds of times longer to complete than the actual copying.

________________EDIT________________

When you compute an average lock time it will probably say very little as it is measured on your machine which may not be the intended target (which may have entirely different bus usage characteristics). For your machine the average will be made up of individual very fast times (when the bus-mastering activities didn't interfere) all the way up to very slow times (when bus-mastering interference was significant).

You could introduce code which determines the fastest and slowest cases and calculate the quotient to see just how greatly the spinlock times can vary.

________________EDIT________________

May 2016 update.

Peter Cordes promoted the idea that "tuning a lock in the un-contended case can make sense" and that lock times of many hundreds of clock cycles do not occur on modern CPUs except in situations where the lock variable is misaligned. I began wondering if my previous test program - written in 32-bit Watcom C - might be hampered by WOW64 as it was running on a 64-bit OS: Windows 7.

So I wrote a 64-bit program and compiled it with TDM's gcc 5.3. The program utilizes the implicitly bus-locking instruction variant "XCHG r,m" for locking and a simple assignment "MOV m,r" for unlocking. In some lock variants the lock variable was pre-tested to determine if it was feasible to even attempt a lock (using a simple compare "CMP r,m", probably not venturing outside L3). Here it is:

// compiler flags used:

// -O1 -m64 -mthreads -mtune=k8 -march=k8 -fwhole-program -freorder-blocks -fschedule-insns -falign-functions=32 -g3 -Wall -c -fmessage-length=0

#define CLASSIC_BUS_LOCK

#define WHILE_PRETEST

//#define SINGLE_THREAD

typedef unsigned char u1;

typedef unsigned short u2;

typedef unsigned long u4;

typedef unsigned int ud;

typedef unsigned long long u8;

typedef signed char i1;

typedef short i2;

typedef long i4;

typedef int id;

typedef long long i8;

typedef float f4;

typedef double f8;

#define usizeof(a) ((ud)sizeof(a))

#define LOOPS 25000000

#include <stdio.h>

#include <windows.h>

#ifndef bool

typedef signed char bool;

#endif

u8 CPU_rdtsc (void)

{

ud tickl, tickh;

__asm__ __volatile__("rdtsc":"=a"(tickl),"=d"(tickh));

return ((u8)tickh << 32)|tickl;

}

volatile u8 bus_lock (volatile u8 * block, u8 value)

{

__asm__ __volatile__( "xchgq %1,%0" : "=r" (value) : "m" (*block), "0" (value) : "memory");

return value;

}

void bus_unlock (volatile u8 * block, u8 value)

{

__asm__ __volatile__( "movq %0,%1" : "=r" (value) : "m" (*block), "0" (value) : "memory");

}

void rfence (void)

{

__asm__ __volatile__( "lfence" : : : "memory");

}

void rwfence (void)

{

__asm__ __volatile__( "mfence" : : : "memory");

}

void wfence (void)

{

__asm__ __volatile__( "sfence" : : : "memory");

}

volatile bool LOCK_spinlockPreTestIfFree (const volatile u8 *lockVariablePointer)

{

return (bool)(*lockVariablePointer == 0ull);

}

volatile bool LOCK_spinlockFailed (volatile u8 *lockVariablePointer)

{

return (bool)(bus_lock (lockVariablePointer, 1ull) != 0ull);

}

void LOCK_spinlockLeave (volatile u8 *lockVariablePointer)

{

*lockVariablePointer = 0ull;

}

static volatile u8 lockVariable = 0ull,

lockCounter = 0ull;

static volatile i8 threadHold = 1;

static u8 tstr[4][32]; /* 32*8=256 bytes for each thread's parameters should result in them residing in different cache lines */

struct LOCKING_THREAD_STRUCTURE

{

u8 numberOfFailures, numberOfPreTests;

f8 clocksPerLock, failuresPerLock, preTestsPerLock;

u8 threadId;

HANDLE threadHandle;

ud idx;

} *lts[4] = {(void *)tstr[0], (void *)tstr[1], (void *)tstr[2], (void *)tstr[3]};

DWORD WINAPI locking_thread (struct LOCKING_THREAD_STRUCTURE *ltsp)

{

ud n = LOOPS;

u8 clockCycles;

SetThreadAffinityMask (ltsp->threadHandle, 1ull<<ltsp->idx);

while (threadHold) {}

clockCycles = CPU_rdtsc ();

while (n)

{

Sleep (0);

#ifdef CLASSIC_BUS_LOCK

while (LOCK_spinlockFailed (&lockVariable)) {++ltsp->numberOfFailures;}

#else

#ifdef WHILE_PRETEST

while (1)

{

do

{

++ltsp->numberOfPreTests;

} while (!LOCK_spinlockPreTestIfFree (&lockVariable));

if (!LOCK_spinlockFailed (&lockVariable)) break;

++ltsp->numberOfFailures;

}

#else

while (1)

{

++ltsp->numberOfPreTests;

if (LOCK_spinlockPreTestIfFree (&lockVariable))

{

if (!LOCK_spinlockFailed (&lockVariable)) break;

++ltsp->numberOfFailures;

}

}

#endif

#endif

++lockCounter;

LOCK_spinlockLeave (&lockVariable);

#ifdef CLASSIC_BUS_LOCK

while (LOCK_spinlockFailed (&lockVariable)) {++ltsp->numberOfFailures;}

#else

#ifdef WHILE_PRETEST

while (1)

{

do

{

++ltsp->numberOfPreTests;

} while (!LOCK_spinlockPreTestIfFree (&lockVariable));

if (!LOCK_spinlockFailed (&lockVariable)) break;

++ltsp->numberOfFailures;

}

#else

while (1)

{

++ltsp->numberOfPreTests;

if (LOCK_spinlockPreTestIfFree (&lockVariable))

{

if (!LOCK_spinlockFailed (&lockVariable)) break;

++ltsp->numberOfFailures;

}

}

#endif

#endif

--lockCounter;

LOCK_spinlockLeave (&lockVariable);

n-=2;

}

clockCycles = CPU_rdtsc ()-clockCycles;

ltsp->clocksPerLock = (f8)clockCycles/ (f8)LOOPS;

ltsp->failuresPerLock = (f8)ltsp->numberOfFailures/(f8)LOOPS;

ltsp->preTestsPerLock = (f8)ltsp->numberOfPreTests/(f8)LOOPS;

//rwfence ();

ltsp->idx = 4u;

ExitThread (0);

return 0;

}

int main (int argc, char *argv[])

{

u8 processAffinityMask, systemAffinityMask;

memset (tstr, 0u, usizeof(tstr));

lts[0]->idx = 3;

lts[1]->idx = 2;

lts[2]->idx = 1;

lts[3]->idx = 0;

GetProcessAffinityMask (GetCurrentProcess(), &processAffinityMask, &systemAffinityMask);

SetPriorityClass (GetCurrentProcess(), HIGH_PRIORITY_CLASS);

SetThreadAffinityMask (GetCurrentThread (), 1ull);

lts[0]->threadHandle = CreateThread (NULL, 65536u, (void *)locking_thread, (void *)lts[0], 0, (void *)<s[0]->threadId);

#ifndef SINGLE_THREAD

lts[1]->threadHandle = CreateThread (NULL, 65536u, (void *)locking_thread, (void *)lts[1], 0, (void *)<s[1]->threadId);

lts[2]->threadHandle = CreateThread (NULL, 65536u, (void *)locking_thread, (void *)lts[2], 0, (void *)<s[2]->threadId);

lts[3]->threadHandle = CreateThread (NULL, 65536u, (void *)locking_thread, (void *)lts[3], 0, (void *)<s[3]->threadId);

#endif

SetThreadAffinityMask (GetCurrentThread (), processAffinityMask);

threadHold = 0;

#ifdef SINGLE_THREAD

while (lts[0]->idx<4u) {Sleep (1);}

#else

while (lts[0]->idx+lts[1]->idx+lts[2]->idx+lts[3]->idx<16u) {Sleep (1);}

#endif

printf ("T0:%1.1f,%1.1f,%1.1f\n", lts[0]->clocksPerLock, lts[0]->failuresPerLock, lts[0]->preTestsPerLock);

printf ("T1:%1.1f,%1.1f,%1.1f\n", lts[1]->clocksPerLock, lts[1]->failuresPerLock, lts[1]->preTestsPerLock);

printf ("T2:%1.1f,%1.1f,%1.1f\n", lts[2]->clocksPerLock, lts[2]->failuresPerLock, lts[2]->preTestsPerLock);

printf ("T3:%1.1f,%1.1f,%1.1f\n", lts[3]->clocksPerLock, lts[3]->failuresPerLock, lts[3]->preTestsPerLock);

printf ("T*:%1.1f,%1.1f,%1.1f\n", (lts[0]->clocksPerLock+ lts[1]->clocksPerLock+ lts[2]->clocksPerLock+ lts[3]->clocksPerLock)/ 4.,

(lts[0]->failuresPerLock+lts[1]->failuresPerLock+lts[2]->failuresPerLock+lts[3]->failuresPerLock)/4.,

(lts[0]->preTestsPerLock+lts[1]->preTestsPerLock+lts[2]->preTestsPerLock+lts[3]->preTestsPerLock)/4.);

printf ("LC:%u\n", (ud)lockCounter);

return 0;

}

The program was run on a DELL i5-4310U based computer with DDR3-800, 2 cores/2 HTs capable of 2.7GHz and a common L3 cache.

To begin with it appears the impact of WOW64 was negligible.

A single thread performing uncontended lock/unlocks was able to do so once every 110 cycles. Tuning the uncontended lock is useless: any code added to enhance the single XCHG instruction will only make it slower.

With four HTs bombarding the lock variable with lock attempts the situation changes radically. The time required to achieve a successful lock jumps to 994 cycles of which a significant part can be attributed to the 2.2 failed lock attempts. To put it another way, in a high-contention situation on average 3.2 locks must be attempted to achieve a successful lock. Obviously the 110 cycles have not become 110*3.2 but closer to 110*9. So other mechanisms are at play here just as with the tests on the older machine. Also, the average 994 cycles encompasses a range between 716 and 1157

The lock variants implementing pre-testing required about 95% of the cycles reuired by the simplest variant (XCHG). On average they would perform 17 CMPs to find it feasible to attempt 1.75 locks of which 1 was successful. I recommend using pre-testing not only because it is faster: it imposes less strain on the bus-locking mechanism (3.2-1.75=1.45 fewer lock attempts) even though it increases the complexity slightly.

Solution 4

Wikipedia has a good article on spinlocks, here is the x86 implementation

http://en.wikipedia.org/wiki/Spinlock#Example_implementation

Notice their implementation doesn't use the "lock" prefix, because it is redundant on x86 for the "xchg" instruction - it implicitly has lock semantics, as discussed in this Stackoverflow discussion:

On a multicore x86, is a LOCK necessary as a prefix to XCHG?

The REP:NOP is an alias for the PAUSE instruction, you can learn more about that here

How does x86 pause instruction work in spinlock *and* can it be used in other scenarios?

On the issue of memory barriers, here's everything you might want to know

Memory Barriers: a Hardware View for Software Hackers by Paul E. McKenney

http://irl.cs.ucla.edu/~yingdi/paperreading/whymb.2010.06.07c.pdf

Related videos on Youtube

sigvardsen

Updated on July 11, 2022Comments

-

sigvardsen almost 2 years

I'm writing a multithreaded application in c++, where performance is critical. I need to use a lot of locking while copying small structures between threads, for this I have chosen to use spinlocks.

I have done some research and speed testing on this and I found that most implementations are roughly equally fast:

- Microsofts CRITICAL_SECTION, with SpinCount set to 1000, scores about 140 time units

- Implementing this algorithm with Microsofts InterlockedCompareExchange scores about 95 time units

- Ive also tried to use some inline assembly with

__asm {}using something like this code and it scores about 70 time units, but I am not sure that a proper memory barrier has been created.

Edit: The times given here are the time it takes for 2 threads to lock and unlock the spinlock 1,000,000 times.

I know this isn't a lot of difference but as a spinlock is a heavily used object, one would think that programmers would have agreed on the fastest possible way to make a spinlock. Googling it leads to many different approaches however. I would think this aforementioned method would be the fastest if implemented using inline assembly and using the instruction

CMPXCHG8Binstead of comparing 32bit registers. Furthermore memory barriers must be taken into account, this could be done by LOCK CMPXHG8B (I think?), which guarantees "exclusive rights" to the shared memory between cores. At last [some suggests] that for busy waits should be accompanied by NOP:REP that would enable Hyper-threading processors to switch to another thread, but I am not sure whether this is true or not?From my performance-test of different spinlocks, it is seen that there is not much difference, but for purely academic purpose I would like to know which one is fastest. However as I have extremely limited experience in the assembly-language and with memory barriers, I would be happy if someone could write the assembly code for the last example I provided with LOCK CMPXCHG8B and proper memory barriers in the following template:

__asm { spin_lock: ;locking code. spin_unlock: ;unlocking code. }-

huseyin tugrul buyukisik over 11 years+1 for giving good sources and info before asking. i think you gave more than you need. thx

-

Wug over 11 yearsHow much exactly is a lot? It would need to be an awful damn lot to worry about how fast you can spin. You're sure there is no better way you can restrict access here? Remember also that the speed you're spinning at doesn't affect when you actually acquire the lock. It doesn't matter how fast you're spinning, the other guy has to unlock it first. Consider looping over a yield() to pass execution to another running thread or process if it turns out that you're going to be spinning for a long time.

-

harold over 11 years

rep nopakapausealso makes P4 not do retarded things when you leave the spin loop. Intel's manual explicitly recommends its use in spin-wait loops. Are you allowed to useXACQUIREandXRELEASE(not available until Haswell)? -

sigvardsen over 11 years@Wug The time given in the performance tests, are the time it takes 2 threads to simultanously lock, copy 4 ints(to add realism) and unlock spinlock maybe 10000000 times (I don't the source code on this computer). The time units given does not give any information about how many loops have been run.

-

PlasmaHH over 11 yearsWhen you want performance, use lock free/contention free data structures on your fast path, and only locks on your slow path

-

sigvardsen over 11 years@PlasmaHH I know that you can copy data but only up to 32bit on 32bit machines and 64bit on 64bit machines with microsoft interlocked operations. But I am not aware that you can do atomic reads and writes on structures larger than the above given examples? Could you point me to some reading/literature or examples? Can it be done in assembly?

-

GManNickG over 11 years@sigvardsen: If you explained more of what you're doing, we may be able to give alternative solutions.

GManNickG over 11 years@sigvardsen: If you explained more of what you're doing, we may be able to give alternative solutions. -

sigvardsen over 11 years@GManNickG I need to copy a structure that consists of 8 ints = 256 bits from one thread to another - atomicly ofcourse.

-

harold over 11 years@sigvardsen what if you atomically copy a pointer to that data?

-

GManNickG over 11 years@sigvardsen: You're missing the point. Why are you doing that? What problem are you solving?

GManNickG over 11 years@sigvardsen: You're missing the point. Why are you doing that? What problem are you solving? -

Sedat Kapanoglu over 11 yearsI must say that it's hard to believe that an algorithm's bottleneck is performance of spinlocks. Maybe you are overusing them? Maybe you don't need to lock that much? Maybe improving the algorithm design will make this optimization completely unnecessary? Maybe you can sacrifice more memory for the sake of performance? Of course it's perfectly possible that you could have finally ended up with shitty spinlocks but as a rule of thumb: "it's always your fault". codinghorror.com/blog/2008/03/…

-

sigvardsen over 11 years@ssg As I mention in my question i realize that all these implementations of spinlock are almost equally fast. But I rose this question merely of acedemic interest. It is good practice to implement the best algorithm although it is unneccesary.

-

Gunther Piez over 11 yearsMemory barriers have nothing to do with "exclusive rights". It's about memory ordering, make sure to read the Intel docs about the memory ordering on x86, which makes barriers uneccessary for most cases.

-

Sedat Kapanoglu over 11 years@sigvardsen Ok I missed the "purely academic purpose" part, my mistake :)

-

PlasmaHH over 11 years@sigvardsen: on x86_64 you have 16byte atomic swaps, these are usually enough for almost all lock free data structures. Lots of those structures even work (partly) without lock prefixes, just using the fact that for properly aligned things read/writes are atomic, sometimes depending on memory barriers too. There is really lots of material to read on the nets.

-

dave over 11 yearsOf course one thread has to lock/unlock the spin lock. Or else it won't protect anything. And there's no reason why a thread will acquire the lock, be descheduled and then unlock for each time slice.

-

sigvardsen over 11 yearsWhere would you place the PAUSE (NOP:REP) instruction? inside the loop or before?

-

huseyin tugrul buyukisik over 11 yearsin the place of "pause" at the "wait" part. But for older cpu s as far as i know

-

VF1 over 10 yearsIn the code you linked to the Wikipedia implementation, why does the instruction to set the lock value in memory to

0have to be atomic (usingxchginstruction)? It would seem that even if another thread accesses the memory location before it's set to0, all it would see is that the lock is still locked - wouldn't a directmovto the memory location work too? -

amdn over 10 yearsYes, a mov works on new x86 processors but not on some older ones, that is explained in the next section titled "Significant Optimizations"

amdn over 10 yearsYes, a mov works on new x86 processors but not on some older ones, that is explained in the next section titled "Significant Optimizations"On later implementations of the x86 architecture, spin_unlock can safely use an unlocked MOV instead of the slower locked XCHG. This is due to subtle memory ordering rules which support this, even though MOV is not a full memory barrier. However, some processors (some Cyrix processors, some revisions of the Intel Pentium Pro (due to bugs), and earlier Pentium and i486 SMP systems) will do the wrong thing and data protected by the lock could be corrupted. -

VF1 over 10 yearsThanks for pointing that out. If you don't mind me asking, what is the problem avoided by these "subtle memory ordering rules?"

-

amdn over 10 yearsX86 memory ordering requires that stores by one thread be seen by other threads in the same order, which means there is no way another thread can acquire the lock and not see stores done in the critical section before the lock was released. See section (4) of this paper spinroot.com/spin/Doc/course/x86_tso.pdf

amdn over 10 yearsX86 memory ordering requires that stores by one thread be seen by other threads in the same order, which means there is no way another thread can acquire the lock and not see stores done in the critical section before the lock was released. See section (4) of this paper spinroot.com/spin/Doc/course/x86_tso.pdf -

amdn over 10 yearsBy the way, I found that the fact a MOV is sufficient (no serialization or memory barrier required) was "officially" clarified in a reply to Linus Torvalds by an Intel architect back in 1999 lkml.org/lkml/1999/11/24/90 I guess it was later discovered that didn't work for some older x86 processors.

amdn over 10 yearsBy the way, I found that the fact a MOV is sufficient (no serialization or memory barrier required) was "officially" clarified in a reply to Linus Torvalds by an Intel architect back in 1999 lkml.org/lkml/1999/11/24/90 I guess it was later discovered that didn't work for some older x86 processors. -

Peter Cordes about 8 years

Peter Cordes about 8 yearsxchgwith a memory operand, and otherlocked instructions, aren't anywhere near that slow on modern x86 CPUs. They're coherent with cache, but not with DMA, so they don't have to wait for a DRAM access. (And definitely not a PCI bus cycle, unless maybe you use it on a a memory-mapped-IO address!). e.g. on Sandybridge (which was current in 2012),xchgwith memory has a latency of 25 cycles (on a cache hit), according to Agner Fog's tables. If the current core has the cache line in the Exclusive state, then the atomic RMW is easy, and it's the memory-barrier part that takes the time. -

Peter Cordes about 8 yearsWorst-case for

Peter Cordes about 8 yearsWorst-case forxchgis if another core has the cache line in Modified or Exclusive state withlocked instructions in flight, or maybe if no core has that line cached, so it has to read from main memory. Note that it doesn't have to write back to main memory; you'll end up with the cache line in the M state in the current core's L1. -

Olof Forshell about 8 years@Peter Cordes: So why does AF write - on the bottom of page 1 of instruction_tables.pdf - "A LOCK prefix typically costs more than a hundred clock cycles, even on a single processor system. This also applies to the XCHG instruction with a memory operand."? I guess Agner Fog could be considered being both right and wrong ... which is it?

-

Peter Cordes about 8 yearsBest-case is about 25c for an un-contended

Peter Cordes about 8 yearsBest-case is about 25c for an un-contendedxchgwith a cache-line that's already hot in L1, but typical case of 100c sounds reasonable when you consider that it orders other stores with loads, and you probably won't use it when there's no contention. I just timed a tiny loop myself, and even on a CPU as old as Merom/Conroe Core2, I got one per ~25c throughput. (Multiplexchgto different addresses still can't parallelize, because of the memory-barrier effect). Anyway, it definitely doesn't have to do PCIe bus cycles when used on normal memory regions. -

Peter Cordes about 8 yearsBig picture: tuning a lock to be fast in the un-contended case can make sense, because that can be quite low overhead. This happens in the Linux kernel quite frequently, IIRC: the common case is no contention, but locking is still needed.

Peter Cordes about 8 yearsBig picture: tuning a lock to be fast in the un-contended case can make sense, because that can be quite low overhead. This happens in the Linux kernel quite frequently, IIRC: the common case is no contention, but locking is still needed. -

Olof Forshell about 8 yearsYou are sure about this? You have personal experience? I do stackoverflow.com/questions/4912467/…

-

Peter Cordes about 8 yearsInteresting numbers, thanks for the link. No, I don't have personal experience with tuning/measuring locking overhead. Your answer there proves that

Peter Cordes about 8 yearsInteresting numbers, thanks for the link. No, I don't have personal experience with tuning/measuring locking overhead. Your answer there proves thatxchgisn't as slow as it would be if it had to interact with the PCI bus, though. You're also testing a loop that's completely bottlenecked on lock throughput, so there's no other work in the pipeline for the CPU to be doing while thexchgis happening. I think, but haven't tested, thatxchgpipelines pretty well, so in real code that has lots of work to do besides locking, the uncontended-case overhead isn't terrible. -

Olof Forshell about 8 yearsThe most efficient locks are the ones you manage to avoid - if you're skilled enough. Newbies and people who don't understand them will think they are without significant cost and use them much more frequently than others in the same way that most people writing multi-threaded will end up with a slower application. In the end, your code might have to send the LOCK signal out on the bus and wait for the slowest bus-mastering device to return the LOCKA to say that the lock is in place. Or do you claim such situations cannot occur? What if your lock variable has been evicted from the cache?

-

Peter Cordes about 8 yearsOn Intel Core2 (E6600), a

Peter Cordes about 8 yearsOn Intel Core2 (E6600), aclflush [rsp-128]/xchg [rsp-128], eaxloop is the same speed as aclfush [rsp-128]/add [rsp-128], eaxloop. (~410c per iteration at 2.4GHz, with DDR533 DRAM.clflushalone runs ~86c per iteration.) So the implicitlockin thexchgdoesn't make the round trip to DRAM any worse. It's obviously still extremely expensive in this scenario. I'm not trying to claim that locking in general is cheap, and your point that good design to avoid locking is a great one. -

Peter Cordes about 8 yearsI don't think locked insns have to wait for PCI devices. x86 has cache-coherent DMA. This may be a recent thing, enabled by putting the memory controller on the CPU, making it easier for the cache to snoop DMA. I'd remove my downvote, and maybe upvote, if you took out (or provide evidence for) that part about external buses.

Peter Cordes about 8 yearsI don't think locked insns have to wait for PCI devices. x86 has cache-coherent DMA. This may be a recent thing, enabled by putting the memory controller on the CPU, making it easier for the cache to snoop DMA. I'd remove my downvote, and maybe upvote, if you took out (or provide evidence for) that part about external buses. -

Olof Forshell about 8 yearsMy answer from 2011 was excuted on a dual-core processor released in September, 2009. That processor had two L2 caches - one for each core and no (common) L3. The example with two locking threads would have required lots of round trips to RAM to perform a loop (read or read+write to lock, read+write to update num, write to unlock). A successful loop completion would have caused the other thread's cached information to become invalidated, forcing it to start from scratch. I will atempt to run the code on a system with an L3.

-

Peter Cordes about 8 yearsMy single-threaded clflush tests were on an even older CPU (first-gen Core2: large per-core L2 caches), since my Sandybridge motherboard failed and I haven't got a replacement yet. My prediction is lower access times because it's only a round trip to L3 instead of DRAM when the other thread has invalidated the cache line in the first core's L1/L2.

Peter Cordes about 8 yearsMy single-threaded clflush tests were on an even older CPU (first-gen Core2: large per-core L2 caches), since my Sandybridge motherboard failed and I haven't got a replacement yet. My prediction is lower access times because it's only a round trip to L3 instead of DRAM when the other thread has invalidated the cache line in the first core's L1/L2. -

Olof Forshell about 8 yearsThis document support.amd.com/TechDocs/47414_15h_sw_opt_guide.pdf shows the unlikely case where an xchg instruction will result in a bus lock on a modern architecture. Published in 2014 it appears to still be in effect.

-

Peter Cordes about 8 yearsAre you talking about section 11.1.4? They say "In some hardware implementations", which I think means they're talking about CPUs other than AMD Family 15h (Bulldozer-family). It's possible that even Bulldozer falls back to a bus lock for

Peter Cordes about 8 yearsAre you talking about section 11.1.4? They say "In some hardware implementations", which I think means they're talking about CPUs other than AMD Family 15h (Bulldozer-family). It's possible that even Bulldozer falls back to a bus lock forlocked insn that span two cache lines. Thanks for digging that up; interesting note. It almost certainly won't happen on an unaligned op that doesn't cross any cache-line boundaries, though. Bulldozer has efficient unaligned access support for AVX and integer ops, so they probably manage to avoid any horrible fallbacks for unaligned within a cache line. -

Olof Forshell about 8 yearsIt's not the op that needs to be aligned, it's the lock variable itself. In any case i hesitate to categorize the xchg (w lock) as an op like any other: it has specific, system-affecting properties that an ordinary integer op does not.

-

Olof Forshell about 8 yearsAbout your test (4 messages up) did you try it with two threads tied to separate cores? My test was two threads on two cores (affinity), 32-bit code running on 64-bit W7.

-

Peter Cordes about 8 yearsNo, I didn't try with two threads. I was intentionally trying a different approach to measuring main memory latency, but I guess it would be more useful to have contended-lock numbers from the same hardware. re: alignment of the lock: yes, that's what I meant. i.e. When people say "aligned load", they mean a load from an aligned address. So "aligned op" means a locked operation on an aligned address, i.e. the lock variable is aligned. My guess (which could easily be wrong) about unaligned lock vars that don't cross cache lines is based on the idea that the CPU probably locks a whole line.

Peter Cordes about 8 yearsNo, I didn't try with two threads. I was intentionally trying a different approach to measuring main memory latency, but I guess it would be more useful to have contended-lock numbers from the same hardware. re: alignment of the lock: yes, that's what I meant. i.e. When people say "aligned load", they mean a load from an aligned address. So "aligned op" means a locked operation on an aligned address, i.e. the lock variable is aligned. My guess (which could easily be wrong) about unaligned lock vars that don't cross cache lines is based on the idea that the CPU probably locks a whole line. -

Olof Forshell almost 8 yearsThe individual cores are not aware of cache lines. The cache mechanism loads cache lines with memory requested by the core to mitigate the slow response time of RAM. On today's x86s a cache line is 64-bytes long aligned onto an even 64-byte RAM address. Even if you only want the 43rd byte in a cache line the mechanism loads all 64 bytes. If a location in a cache line is modified the mechanism writes that cache line to memory. In the same manner the core is unaware that exchange has an explicit lock: it executes the instruction, wait states are inserted and finally the core sees the data.

-

Peter Cordes almost 8 yearsPrivate L1 cache is part each core. AMD's optimization guide which you linked earlier (pg 202: 11.1.4 ... "Cache Line Boundary") explicitly refers to a core locking its own cache instead of taking a bus lock. They say "naturally aligned", but the title of the section says "cache line boundary". Also, what part of the core doesn't need to know that

Peter Cordes almost 8 yearsPrivate L1 cache is part each core. AMD's optimization guide which you linked earlier (pg 202: 11.1.4 ... "Cache Line Boundary") explicitly refers to a core locking its own cache instead of taking a bus lock. They say "naturally aligned", but the title of the section says "cache line boundary". Also, what part of the core doesn't need to know thatxchghas an implicit lock? It has to trigger a memory barrier (like MFENCE) as well itself being atomic. Your description sounds like what might happen on a simple in-order CPU. -

Peter Cordes almost 8 yearsNice update, interesting numbers. However, you're only measuring locking throughput, not the impact of locking in a workload that has lots of non-locking work in the pipeline. That's the case where I was suggesting it's worth avoiding slowdowns in the un-contended case. It's similar to measuring

Peter Cordes almost 8 yearsNice update, interesting numbers. However, you're only measuring locking throughput, not the impact of locking in a workload that has lots of non-locking work in the pipeline. That's the case where I was suggesting it's worth avoiding slowdowns in the un-contended case. It's similar to measuringdivpsslowness with a loop that only runsdivps, vs. a loop that does many dozens ofaddps/mulpsoperations and onedivps. As long as there is instruction-level parallelism available,divpsshould have low impact on total throughput, since it's just a single port0 uop. -

Peter Cordes almost 8 yearsObviously

Peter Cordes almost 8 yearsObviouslyxchghas much more impact on the pipeline thandivps, even when it's not part of the critical path dependency chain. But it can still potentially overlap with other instructions. Certainly with non-memory operations. -

Peter Cordes almost 8 yearsThis implementation spins on a

Peter Cordes almost 8 yearsThis implementation spins on alock cmpxchgrather than an atomic load before trying the exchange. (See Necropolis's answer).pauseavoids a memory-ordering mis-speculation when you spin on a load instead of alocked instruction. See my answer on another question for a simple/minimal asm spinlock implementation that I think avoids any obvious problems. -

Olof Forshell almost 8 yearsThe OP still wrote: "I need to use a lot of locking while copying small structures between threads, for this I have chosen to use spinlocks". Although my code shows it is possible for each of four cores to achieve 2.7 million locks/unlocks per second it is not inconceivable that the OP uses so many of them that his multi-threaded code would be better off single-threaded without locks.

-

Olof Forshell almost 8 yearsPlease cut the theoretical argumentation. I've presented real numbers on real machines and you poke at them because you think this or that reasoning is or isn't logical because you have read something somewhere. Do some experimentation of your own instead of guessing. You can start by using my program. It shows the examples I have tested so you don't have to ask me what I have or haven't tested.

-

Goaler444 about 7 yearsDoes this also work for 64-bit? Why does it solely say x86 spinlock?

Goaler444 about 7 yearsDoes this also work for 64-bit? Why does it solely say x86 spinlock? -

huseyin tugrul buyukisik about 7 years@Goaler444 x86 meaning general architecture name and should also work for x86_64 too with 64bit lock_addr using cmpxchgq instead of cmpxchgl

-

Gukki5 over 3 yearsone of the best and most thorough answers i’ve ever read thank you!

Gukki5 over 3 yearsone of the best and most thorough answers i’ve ever read thank you! -

Gukki5 over 3 yearsTLDR; sell your soul to 👹 before ever using any lock of any kind no matter how’s it’s implemented, unless you absolutely MUST.

Gukki5 over 3 yearsTLDR; sell your soul to 👹 before ever using any lock of any kind no matter how’s it’s implemented, unless you absolutely MUST. -

Peter Cordes about 3 years@OlofForshell: I finally got around to testing my claim about uncontended

Peter Cordes about 3 years@OlofForshell: I finally got around to testing my claim about uncontendedxchgscaling well, i.e. if each thread it taking / releasing a different lock (in a different cache line). godbolt.org/z/MEjboaqMf is C++ code that compiles to a loop that just repeats anxchg [rdi], ecx. The total runtime of the program on my i7-6700k is 463ms whether it runs on 1 or 4 threads (with differentatomic<int>objects), so that rules out any kind of system-wide bus lock, confirming that it's just a cache-lock (Can num++ be atomic for 'int num'?) -

Peter Cordes about 3 yearsOf course threads contending for the same lock slow each other down, and everything you say about minimizing locking in general is good, but the one thing that's not horribly slow is for a thread to release and re-acquire a lock if no other threads want it.

Peter Cordes about 3 yearsOf course threads contending for the same lock slow each other down, and everything you say about minimizing locking in general is good, but the one thing that's not horribly slow is for a thread to release and re-acquire a lock if no other threads want it. -

Peter Cordes about 3 yearsI had earlier suggested that it would be better to check read-only to see if the lock is available before even trying the first time. That's probably not good, and could lead to a share request to get read-only access before an RFO to get exclusive ownership. See discussion in Does cmpxchg write destination cache line on failure? If not, is it better than xchg for spinlock? - spinning read-only is good after you've already failed once, but probably not the first time.

Peter Cordes about 3 yearsI had earlier suggested that it would be better to check read-only to see if the lock is available before even trying the first time. That's probably not good, and could lead to a share request to get read-only access before an RFO to get exclusive ownership. See discussion in Does cmpxchg write destination cache line on failure? If not, is it better than xchg for spinlock? - spinning read-only is good after you've already failed once, but probably not the first time. -

Olof Forshell about 3 yearsI do think you need to time them. I suggest you should at the very least see really fast uncontended locks, "less fast" locks that have been slowed by system-wide bus contention and "slow" times where an interrupt might have occurred. If every run requires 463 milliseconds it could be as you say or it could be that system-wide contention is rare which could be the case with infrequent bus mastering events. The interrupt loading looks fairly constant. If you time events individually you will either see complete homogenity or you won't, in which case you need to present hypotheses as to why.

-

Olof Forshell about 3 yearsLocks can be directly contended by other threads wanting them or indirectly by a system wide bus contention which has nothing to do with the lock itself, it simply says that the bus cannot vouch for a lock at this moment in time being 100% safe due to devices that may overwrite the memory containing the lock if they aren't first allowed to finish what they are doing. This may sound ludicrous but from a system view the bus lock mechanism cannot and should not be privy to what the executing software is doing - it is simply an extremely blunt and costly but absolutely necessary mechanism.

-

Peter Cordes about 3 yearsDidn't see your comments since you didn't @user address them to me. A core can perform a "cache lock" to do an atomic RMW any time it has MESI exclusive ownership of a cache line. This exclusive ownership is the same thing that's required to commit normal stores to L1d cache, so from an outside perspective there's no visible sign (on any bus outside a CPU core) that a core did an atomic RMW on an aligned object in normal cacheable memory. Only for cache-line split and maybe also for uncacheable memory would a bus lock be necessary.

Peter Cordes about 3 yearsDidn't see your comments since you didn't @user address them to me. A core can perform a "cache lock" to do an atomic RMW any time it has MESI exclusive ownership of a cache line. This exclusive ownership is the same thing that's required to commit normal stores to L1d cache, so from an outside perspective there's no visible sign (on any bus outside a CPU core) that a core did an atomic RMW on an aligned object in normal cacheable memory. Only for cache-line split and maybe also for uncacheable memory would a bus lock be necessary. -

Peter Cordes about 3 yearsOf course, if a core doesn't already have exclusive ownership of a cache line (e.g. after some other core just unlocked it), getting exclusive ownership will take maybe 40 to 70 nanoseconds (hundreds of cycles) , depending on the interconnect between cores. I think that's what you're describing as "less fast" locks; normally no "bus lock" is involved. x86 has cache-coherent DMA; bus-mastering by a device writing to a physical address doesn't invalidate cache lines other than one the device is writing to. Bus lock is only needed for rare stuff like line-split locks.

Peter Cordes about 3 yearsOf course, if a core doesn't already have exclusive ownership of a cache line (e.g. after some other core just unlocked it), getting exclusive ownership will take maybe 40 to 70 nanoseconds (hundreds of cycles) , depending on the interconnect between cores. I think that's what you're describing as "less fast" locks; normally no "bus lock" is involved. x86 has cache-coherent DMA; bus-mastering by a device writing to a physical address doesn't invalidate cache lines other than one the device is writing to. Bus lock is only needed for rare stuff like line-split locks.

![Slipknot - Psychosocial [OFFICIAL VIDEO]](https://i.ytimg.com/vi/5abamRO41fE/hq720.jpg?sqp=-oaymwEXCNAFEJQDSFryq4qpAwkIARUAAIhCGAE=&rs=AOn4CLCsxFAEnZLRc4RuGAiiZWFE93-HIA)