Fastest way to find lines of a file from another larger file in Bash

Solution 1

A small piece of Perl code solved the problem. This is the approach taken:

- store the lines of

file1.txtin a hash - read

file2.txtline by line, parse and extract the second field - check if the extracted field is in the hash; if so, print the line

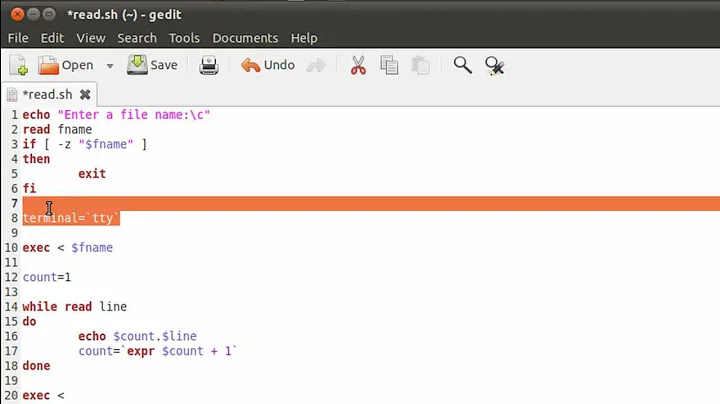

Here is the code:

#!/usr/bin/perl -w

use strict;

if (scalar(@ARGV) != 2) {

printf STDERR "Usage: fgrep.pl smallfile bigfile\n";

exit(2);

}

my ($small_file, $big_file) = ($ARGV[0], $ARGV[1]);

my ($small_fp, $big_fp, %small_hash, $field);

open($small_fp, "<", $small_file) || die "Can't open $small_file: " . $!;

open($big_fp, "<", $big_file) || die "Can't open $big_file: " . $!;

# store contents of small file in a hash

while (<$small_fp>) {

chomp;

$small_hash{$_} = undef;

}

close($small_fp);

# loop through big file and find matches

while (<$big_fp>) {

# no need for chomp

$field = (split(/\|/, $_))[1];

if (defined($field) && exists($small_hash{$field})) {

printf("%s", $_);

}

}

close($big_fp);

exit(0);

I ran the above script with 14K lines in file1.txt and 1.3M lines in file2.txt. It finished in about 13 seconds, producing 126K matches. Here is the time output for the same:

real 0m11.694s

user 0m11.507s

sys 0m0.174s

I ran @Inian's awk code:

awk 'FNR==NR{hash[$1]; next}{for (i in hash) if (match($0,i)) {print; break}}' file1.txt FS='|' file2.txt

It was way slower than the Perl solution, since it is looping 14K times for each line in file2.txt - which is really expensive. It aborted after processing 592K records of file2.txt and producing 40K matched lines. Here is how long it took:

awk: illegal primary in regular expression 24/Nov/2016||592989 at 592989

input record number 675280, file file2.txt

source line number 1

real 55m5.539s

user 54m53.080s

sys 0m5.095s

Using @Inian's other awk solution, which eliminates the looping issue:

time awk -F '|' 'FNR==NR{hash[$1]; next}$2 in hash' file1.txt FS='|' file2.txt > awk1.out

real 0m39.966s

user 0m37.916s

sys 0m0.743s

time LC_ALL=C awk -F '|' 'FNR==NR{hash[$1]; next}$2 in hash' file1.txt FS='|' file2.txt > awk.out

real 0m41.057s

user 0m38.475s

sys 0m0.904s

awk is very impressive here, given that we didn't have to write an entire program to do it.

I ran @oliv's Python code as well. It took about 15 hours to complete the job, and looked like it produced the right results. Building a huge regex isn't as efficient as using a hash lookup. Here the time output:

real 895m14.862s

user 806m59.219s

sys 1m12.147s

I tried to follow the suggestion to use parallel. However, it failed with fgrep: memory exhausted error, even with very small block sizes.

What surprised me was that fgrep was totally unsuitable for this. I aborted it after 22 hours and it produced about 100K matches. I wish fgrep had an option to force the content of -f file to be kept in a hash, just like what the Perl code did.

I didn't check join approach - I didn't want the additional overhead of sorting the files. Also, given fgrep's poor performance, I don't believe join would have done better than the Perl code.

Thanks everyone for your attention and responses.

Solution 2

A Perl solution. [See Note below.]

Use a hash for the first file. As you read the big file line-by-line, extract the field by regex (captures the first pattern between ||) or split (gets the second word) and print if it exists. They likely differ in speed a bit (time them). The defined check isn't needed in the regex while for split use // (defined-or) that short-circuits.

use warnings;

use strict;

# If 'prog smallfile bigfile' is the preferred use

die "Usage: $0 smallfile bigfile\n" if @ARGV != 2;

my ($smallfile, $bigfile) = @ARGV;

open my $fh, '<', $smallfile or die "Can't open $smallfile: $!";

my %word = map { chomp; $_ => 1 } <$fh>;

open $fh, '<', $bigfile or die "Can't open $bigfile: $!";

while (<$fh>)

{

exists $word{ (/\|([^|]+)/)[0] } && print;

# Or

#exists $word{ (split /\|/)[1] // '' } && print;

}

close $fh;

Avoiding the if branch and using short-circuit is faster, but only very little. On billions of lines these tweaks add up but again not to too much. It may (or may not) be a tad bit faster to read the small file line by line, instead of in list context as above, but this should not be noticeable.

Update Writing to STDOUT saves two operations and I repeatedly time it to be a little faster than writing to a file. Such usage is also consistent with most UNIX tools so I changed to write to STDOUT. Next, the exists test is not needed and dropping it spares an operation. However, I consistently get a touch better runtimes with it, while it also conveys the purpose better. Altogether I am leaving it in. Thanks to ikegami for comments.

Note The commented out version is about 50% faster than the other, by my benchmark below. These are both given because they are different, one finding the first match and the other the second field. I am keeping it this way as a more generic choice, since the question is ambiguous on that.

Some comparisons (benchmark) [Updated for writing to STDOUT, see "Update" above]

There is an extensive analysis in the answer by HåkonHægland, timing one run of most solutions. Here is another take, benchmarking the two solutions above, the OP's own answer, and the posted fgrep one, expected to be fast and used in the question and in many answers.

I build test data in the following way. A handful of lines of the length roughly as shown are made with random words, for both files, so to match in the second field. Then I pad this "seed" for data samples with lines that won't match, so to mimic ratios between sizes and matches quoted by OP: for 14K lines in small file there are 1.3M lines in the big file, yielding 126K matches. Then these samples are written repeatedly to build full data files as OP's, List::Util::shuffle-ed each time.

All runs compared below produce 106_120 matches for the above file sizes (diff-ed for a check), so the matching frequency is close enough.

They are benchmarked by calling complete programs using my $res = timethese(60 ...). The result of cmpthese($res) on v5.16 are

Rate regex cfor split fgrep

regex 1.05/s -- -23% -35% -44%

cfor 1.36/s 30% -- -16% -28%

split 1.62/s 54% 19% -- -14%

fgrep 1.89/s 80% 39% 17% --

The fact that the optimized C-program fgrep comes on top isn't surprising. The lag of "regex" behind "split" may be due to the overhead of starting the engine for little matches, many times. This may vary over Perl versions, given the evolving regex engine optimizations. I include the answer of @codeforester ("cfor") since it was claimed to be fastest, and its 24% lag behind the very similar "split" is likely due to scattered small inefficiencies (see a comment below this answer).†

This isn't shatteringly different, while there are sure variations across hardware and software and over data details. I ran this on different Perls and machines, and the notable difference is that in some cases fgrep was indeed an order of magnitude faster.

The OP's experience of very slow fgrep is surprising. Given their quoted run times, order of magnitude slower than the above, I'd guess that there's an old system to "blame."

Even though this is completely I/O based there are concurrency benefits from putting it on multiple cores and I'd expect a good speedup, up to a factor of a few.

† Alas, the comment got deleted (?). In short: unneeded use of a scalar (costs), of an if branch, of defined, of printf instead of print (slow!). These matter for runtime on 2 billion lines.

Solution 3

I have tried to do a comparison between some of the methods presented here.

First I created a Perl script to generate the input files file1.txt and file2.txt. In order to compare some of the solutions, I made sure that the the words from file1.txt only could appear in the second field in file2.txt. Also to be able to use the join solution presented by @GeorgeVasiliou, I sorted file1.txt and file2.txt. Currently I generated the input files based on only 75 random words ( taken from https://www.randomlists.com/random-words ). Only 5 of these 75 words was used in file1.txt the remaining 70 words was used to fill up the fields in file2.txt. It might be necessary to increase the number of words substantially to get realistic results ( according to the OP, the original file1.txt contained 14000 words). In the tests below I used a file2.txt with 1000000 ( 1 million ) lines. The script also generates the file regexp1.txt required by the grep solution of @BOC.

gen_input_files.pl:

#! /usr/bin/env perl

use feature qw(say);

use strict;

use warnings;

use Data::Printer;

use Getopt::Long;

GetOptions ("num_lines=i" => \my $nlines )

or die("Error in command line arguments\n");

# Generated random words from site: https://www.randomlists.com/random-words

my $word_filename = 'words.txt'; # 75 random words

my $num_match_words = 5;

my $num_file2_lines = $nlines || 1_000_000;

my $file2_words_per_line = 3;

my $file2_match_field_no = 2;

my $file1_filename = 'file1.txt';

my $file2_filename = 'file2.txt';

my $file1_regex_fn = 'regexp1.txt';

say "generating $num_file2_lines lines..";

my ( $words1, $words2 ) = get_words( $word_filename, $num_match_words );

write_file1( $file1_filename, $words2 );

write_file2(

$file2_filename, $words1, $words2, $num_file2_lines,

$file2_words_per_line, $file2_match_field_no

);

write_BOC_regexp_file( $file1_regex_fn, $words2 );

sub write_BOC_regexp_file {

my ( $fn, $words ) = @_;

open( my $fh, '>', $fn ) or die "Could not open file '$fn': $!";

print $fh '\\|' . (join "|", @$words) . '\\|';

close $fh;

}

sub write_file2 {

my ( $fn, $words1, $words2, $nlines, $words_per_line, $field_no ) = @_;

my $nwords1 = scalar @$words1;

my $nwords2 = scalar @$words2;

my @lines;

for (1..$nlines) {

my @words_line;

my $key;

for (1..$words_per_line) {

my $word;

if ( $_ != $field_no ) {

my $index = int (rand $nwords1);

$word = @{ $words1 }[$index];

}

else {

my $index = int (rand($nwords1 + $nwords2) );

if ( $index < $nwords2 ) {

$word = @{ $words2 }[$index];

}

else {

$word = @{ $words1 }[$index - $nwords2];

}

$key = $word;

}

push @words_line, $word;

}

push @lines, [$key, (join "|", @words_line)];

}

@lines = map { $_->[1] } sort { $a->[0] cmp $b->[0] } @lines;

open( my $fh, '>', $fn ) or die "Could not open file '$fn': $!";

print $fh (join "\n", @lines);

close $fh;

}

sub write_file1 {

my ( $fn, $words ) = @_;

open( my $fh, '>', $fn ) or die "Could not open file '$fn': $!";

print $fh (join "\n", sort @$words);

close $fh;

}

sub get_words {

my ( $fn, $N ) = @_;

open( my $fh, '<', $fn ) or die "Could not open file '$fn': $!";

my @words = map {chomp $_; $_} <$fh>;

close $fh;

my @words1 = @words[$N..$#words];

my @words2 = @words[0..($N - 1)];

return ( \@words1, \@words2 );

}

Next, I created a sub folder solutions with all the test cases:

$ tree solutions/

solutions/

├── BOC1

│ ├── out.txt

│ └── run.sh

├── BOC2

│ ├── out.txt

│ └── run.sh

├── codeforester

│ ├── out.txt

│ ├── run.pl

│ └── run.sh

[...]

Here the files out.txt is the output from the greps for each solution. The scripts run.sh runs the solution for the given test case.

Notes on the different solutions

-

BOC1: First solution presented by @BOCgrep -E -f regexp1.txt file2.txt -

BOC2: Second solution suggested by @BOC:LC_ALL=C grep -E -f regexp1.txt file2.txt codeforester: Accepted Perl solution by @codeforester ( see source )-

codeforester_orig: Original solution presented by @codeforested:fgrep -f file1.txt file2.txt dawg: Python solution using dictionary and split line proposed by @dawg ( see source )-

gregory1: solution using Gnu Parallel suggested by @gregoryparallel -k --pipepart -a file2.txt --block "$block_size" fgrep -F -f file1.txtSee note below regarding how to choose

$block_size. hakon1: Perl solution provided by @HåkonHægland (see source). This solution requires compilation of the c-extension the first time the code is run. It does not require recompilation whenfile1.txtorfile2.txtchanges. Note: The time used to compile the c-extension at the initial run is not included in the run times presented below.-

ikegami: Solution using assembled regexp and usinggrep -Pas given by @ikegami. Note: The assembled regexp was written to a separate fileregexp_ikegami.txt, so the runtime of generating the regexp is not included in the comparison below. This is the code used:regexp=$(< "regexp_ikegami.txt") grep -P "$regexp" file2.txt -

inian1: First solution by @Inian usingmatch()awk 'FNR==NR{ hash[$1]; next } { for (i in hash) if (match($0,i)) {print; break} }' file1.txt FS='|' file2.txt -

inian2: Second solution by @Inian usingindex()awk 'FNR==NR{ hash[$1]; next } { for (i in hash) if (index($0,i)) {print; break} }' file1.txt FS='|' file2.txt -

inian3: Third solution by @Inian checking only$2field:awk 'FNR==NR{ hash[$1]; next } $2 in hash' file1.txt FS='|' file2.txt -

inian4: 4th soultion by @Inian ( basically the same ascodeforester_origwithLC_ALL) :LC_ALL=C fgrep -f file1.txt file2.txt -

inian5: 5th solution by @Inian (same asinian1but withLC_ALL):LC_ALL=C awk 'FNR==NR{ hash[$1]; next } { for (i in hash) if (match($0,i)) {print; break} }' file1.txt FS='|' file2.txt inian6: Same asinian3but withLC_ALL=C. Thanks to @GeorgeVasiliou for suggestion.jjoao: Compiled flex-generated C code as proposed by @JJoao (see source ). Note: Recompilation of the exectuable must be done each timefile1.txtchanges. The time used to compile the executable is not included in the run times presented below.oliv: Python script provided by @oliv ( see source )-

Vasiliou: Usingjoinas suggested by @GeorgeVasiliou:join --nocheck-order -11 -22 -t'|' -o 2.1 2.2 2.3 file1.txt file2.txt Vasiliou2: Same asVasilioubut withLC_ALL=C.zdim: Using Perl script provided by @zdim ( see source ). Note: This uses the regexp search version ( instead of split line solution ).zdim2: Same aszdimexcept that it uses thesplitfunction instead of regexp search for the field infile2.txt.

Notes

I experimented a little bit with Gnu parallel (see

gregory1solution above) to determine the optimal block size for my CPU. I have 4 cores, and and currently it seems that the optimal choice is to devide the file (file2.txt) into 4 equal sized chunks, and run a single job on each of the 4 processors. More testing might be needed here. So for the first test case wherefile2.txtis 20M, I set$block_sizeto 5M ( seegregory1solution above), whereas for the more realistic case presented below wherefile2.txtis 268M, a$block_sizeof 67M was used.-

The solutions

BOC1,BOC2,codeforester_orig,inian1,inian4,inian5, andgregory1all used loose matching. Meaning that the words fromfile1.txtdid not have to match exactly in field #2 offile2.txt. A match anywhere on the line was accepted. Since this behavior made it more difficult to compare them with the other methods, some modified methods were also introduced. The first two methods calledBOC1BandBOC2Bused a modifiedregexp1.txtfile. The lines in the originalregexp1.txtwhere on the form\|foo1|foo2|...|fooN\|which would match the words at any field boundary. The modified file,regexp1b.txt, anchored the match to field #2 exclusively using the form^[^|]*\|foo1|foo2|...|fooN\|instead.Then the rest of the modified methods

codeforester_origB,inian1B,inian4B,inian5B, andgregory1Bused a modifiedfile1.txt. Instead of a literal word per line, the modified filefile1b.txtused one regex per line on the form:^[^|]*\|word1\| ^[^|]*\|word2\| ^[^|]*\|word3\| [...]and in addition,

fgrep -fwas replaced bygrep -E -ffor these methods.

Running the tests

Here is the script used for running all the tests. It uses the Bash time command to record the time spent for each script. Note that the time command returns three different times call real, user, and sys. First I used user + sys, but realized that this was incorrect when using Gnu parallel command, so the time reported below is now the real part returned by time. See this question for more information about the different times returned by time.

The first test is run with file1.txt containing 5 lines, and file2.txt containing 1000000 lines. Here is the first 52 lines of the run_all.pl script, the rest of the script is available here.

run_all.pl

#! /usr/bin/env perl

use feature qw(say);

use strict;

use warnings;

use Cwd;

use Getopt::Long;

use Data::Printer;

use FGB::Common;

use List::Util qw(max shuffle);

use Number::Bytes::Human qw(format_bytes);

use Sys::Info;

GetOptions (

"verbose" => \my $verbose,

"check" => \my $check,

"single-case=s" => \my $case,

"expected=i" => \my $expected_no_lines,

) or die("Error in command line arguments\n");

my $test_dir = 'solutions';

my $output_file = 'out.txt';

my $wc_expected = $expected_no_lines; # expected number of output lines

my $tests = get_test_names( $test_dir, $case );

my $file2_size = get_file2_size();

my $num_cpus = Sys::Info->new()->device( CPU => () )->count;

chdir $test_dir;

my $cmd = 'run.sh';

my @times;

for my $case (@$tests) {

my $savedir = getcwd();

chdir $case;

say "Running '$case'..";

my $arg = get_cmd_args( $case, $file2_size, $num_cpus );

my $output = `bash -c "{ time -p $cmd $arg; } 2>&1"`;

my ($user, $sys, $real ) = get_run_times( $output );

print_timings( $user, $sys, $real ) if $verbose;

check_output_is_ok( $output_file, $wc_expected, $verbose, $check );

print "\n" if $verbose;

push @times, $real;

#push @times, $user + $sys; # this is wrong when using Gnu parallel

chdir $savedir;

}

say "Done.\n";

print_summary( $tests, \@times );

Results

Here is the output from running the tests:

$ run_all.pl --verbose

Running 'inian3'..

..finished in 0.45 seconds ( user: 0.44, sys: 0.00 )

..no of output lines: 66711

Running 'inian2'..

..finished in 0.73 seconds ( user: 0.73, sys: 0.00 )

..no of output lines: 66711

Running 'Vasiliou'..

..finished in 0.09 seconds ( user: 0.08, sys: 0.00 )

..no of output lines: 66711

Running 'codeforester_orig'..

..finished in 0.05 seconds ( user: 0.05, sys: 0.00 )

..no of output lines: 66711

Running 'codeforester'..

..finished in 0.45 seconds ( user: 0.44, sys: 0.01 )

..no of output lines: 66711

[...]

Summary

[Results obtained by @Vasiliou are shown in the middle column.]

|Vasiliou

My Benchmark |Results | Details

-------------------------------|---------|----------------------

inian4 : 0.04s |0.22s | LC_ALL fgrep -f [loose]

codeforester_orig : 0.05s | | fgrep -f [loose]

Vasiliou2 : 0.06s |0.16s | [LC_ALL join [requires sorted files]]

BOC1 : 0.06s | | grep -E [loose]

BOC2 : 0.07s |15s | LC_ALL grep -E [loose]

BOC2B : 0.07s | | LC_ALL grep -E [strict]

inian4B : 0.08s | | LC_ALL grep -E -f [strict]

Vasiliou : 0.08s |0.23s | [join [requires sorted files]]

gregory1B : 0.08s | | [parallel + grep -E -f [strict]]

ikegami : 0.1s | | grep -P

gregory1 : 0.11s |0.5s | [parallel + fgrep -f [loose]]

hakon1 : 0.14s | | [perl + c]

BOC1B : 0.14s | | grep -E [strict]

jjoao : 0.21s | | [compiled flex generated c code]

inian6 : 0.26s |0.7s | [LC_ALL awk + split+dict]

codeforester_origB : 0.28s | | grep -E -f [strict]

dawg : 0.35s | | [python + split+dict]

inian3 : 0.44s |1.1s | [awk + split+dict]

zdim2 : 0.4s | | [perl + split+dict]

codeforester : 0.45s | | [perl + split+dict]

oliv : 0.5s | | [python + compiled regex + re.search()]

zdim : 0.61s | | [perl + regexp+dict]

inian2 : 0.73s |1.7s | [awk + index($0,i)]

inian5 : 18.12s | | [LC_ALL awk + match($0,i) [loose]]

inian1 : 19.46s | | [awk + match($0,i) [loose]]

inian5B : 42.27s | | [LC_ALL awk + match($0,i) [strict]]

inian1B : 85.67s | | [awk + match($0,i) [strict]]

Vasiliou Results : 2 X CPU Intel 2 Duo T6570 @ 2.10GHz - 2Gb RAM-Debian Testing 64bit- kernel 4.9.0.1 - no cpu freq scaling.

A more realistic test case

I then created a more realistic case with file1.txt having 100 words and file2.txt having 10 million lines (268Mb file size). I extracted 1000 random words from the dictionary at /usr/share/dict/american-english using shuf -n1000 /usr/share/dict/american-english > words.txt then extracted 100 of these words into file1.txt and then constructed file2.txt the same way as described above for the first test case. Note that the dictionary file was UTF-8 encoded, and I stripped away all non-ASCII characters from the words.txt.

Then I run the test without the three slowest methods from the previous case. I.e. inian1, inian2, and inian5 were left out. Here are the new results:

gregory1 : 0.86s | [parallel + fgrep -f [loose]]

Vasiliou2 : 0.94s | [LC_ALL join [requires sorted files]]

inian4B : 1.12s | LC_ALL grep -E -f [strict]

BOC2B : 1.13s | LC_ALL grep -E [strict]

BOC2 : 1.15s | LC_ALL grep -E [loose]

BOC1 : 1.18s | grep -E [loose]

ikegami : 1.33s | grep -P

Vasiliou : 1.37s | [join [requires sorted files]]

hakon1 : 1.44s | [perl + c]

inian4 : 2.18s | LC_ALL fgrep -f [loose]

codeforester_orig : 2.2s | fgrep -f [loose]

inian6 : 2.82s | [LC_ALL awk + split+dict]

jjoao : 3.09s | [compiled flex generated c code]

dawg : 3.54s | [python + split+dict]

zdim2 : 4.21s | [perl + split+dict]

codeforester : 4.67s | [perl + split+dict]

inian3 : 5.52s | [awk + split+dict]

zdim : 6.55s | [perl + regexp+dict]

gregory1B : 45.36s | [parallel + grep -E -f [strict]]

oliv : 60.35s | [python + compiled regex + re.search()]

BOC1B : 74.71s | grep -E [strict]

codeforester_origB : 75.52s | grep -E -f [strict]

Note

The grep based solutions were looking for a match on the whole line, so in this case they contained some false matches: the methods codeforester_orig, BOC1, BOC2, gregory1, inian4, and oliv extracted 1,087,609 lines out of 10,000,000 lines, whereas the other methods extracted the correct 997,993 lines from file2.txt.

Notes

I tested this on my Ubuntu 16.10 laptop (Intel Core i7-7500U CPU @ 2.70GHz)

The whole benchmark study is available here.

Solution 4

Did you try Awk that could speed up things a bit:

awk 'FNR==NR{hash[$1]; next}{for (i in hash) if (match($0,i)) {print; break}}' file1.txt FS='|' file2.txt

(or) using index() function in Awk as suggested by comments from Benjamin W., below

awk 'FNR==NR{hash[$1]; next}{for (i in hash) if (index($0,i)) {print; break}}' file1.txt FS='|' file2.txt

(or) a more direct regex match as suggested by Ed Morton in comments,

awk 'FNR==NR{hash[$1]; next}{for (i in hash) if ($0~i) {print; break}}' file1.txt FS='|' file2.txt

is all you need. I'm guessing this will be faster but not exactly sure on files with million+ entries. Here the problem is with the possibility match in anywhere along the line. Had the same been in any particular column (e.g. say $2 alone), a faster approach could be

awk 'FNR==NR{hash[$1]; next}$2 in hash' file1.txt FS='|' file2.txt

Also you could speed-things up by playing with the locale set in your system. Paraphrasing from this wonderful Stéphane Chazelas's answer on the subject, you could speed up things pretty quickly by setting passing the locale LC_ALL=C to the command locally being run.

On any GNU based system, the defaults for the locale

$ locale

LANG=en_US.UTF-8

LC_CTYPE="en_US.UTF-8"

LC_NUMERIC="en_US.UTF-8"

LC_TIME="en_US.UTF-8"

LC_COLLATE="en_US.UTF-8"

LC_MONETARY="en_US.UTF-8"

LC_MESSAGES="en_US.UTF-8"

LC_PAPER="en_US.UTF-8"

LC_NAME="en_US.UTF-8"

LC_ADDRESS="en_US.UTF-8"

LC_TELEPHONE="en_US.UTF-8"

LC_MEASUREMENT="en_US.UTF-8"

LC_IDENTIFICATION="en_US.UTF-8"

LC_ALL=

With one variable LC_ALL, you can set all LC_ type variables at once to a specified locale

$ LC_ALL=C locale

LANG=en_US.UTF-8

LC_CTYPE="C"

LC_NUMERIC="C"

LC_TIME="C"

LC_COLLATE="C"

LC_MONETARY="C"

LC_MESSAGES="C"

LC_PAPER="C"

LC_NAME="C"

LC_ADDRESS="C"

LC_TELEPHONE="C"

LC_MEASUREMENT="C"

LC_IDENTIFICATION="C"

LC_ALL=C

So what does this impact?

Simply put, when using the locale C it will default to the server's base Unix/Linux language of ASCII. Basically when you grep something, by default your locale is going to be internationalized and set to UTF-8, which can represent every character in the Unicode character set to help display any of the world's writing systems, currently over more than 110,000 unique characters, whereas with ASCII each character is encoded in a single byte sequence and its character set comprises of no longer than 128 unique characters.

So it translates to this, when using grep on a file encoded in UTF-8 character-set, it needs to match each character with any of the hundred thousand unique characters, but just 128 in ASCII, so use your fgrep as

LC_ALL=C fgrep -F -f file1.txt file2.txt

Also, the same can be adapted to the Awk, since it uses a regex match with the match($0,i) call, setting the C locale could speed up the string match.

LC_ALL=C awk 'FNR==NR{hash[$1]; next}{for (i in hash) if (match($0,i)) {print; break}}' file1.txt FS='|' file2.txt

Solution 5

Assumptions: 1. You want to run this search on just your local workstation. 2. Your have multiple cores/cpus to take advantage of a parallel search.

parallel --pipepart -a file2.txt --block 10M fgrep -F -f file1.txt

Some further tweaks depending on the context: A. Disable NLS with LANG=C (this is mentioned already in another answer) B. Set a max number of matches with the -m flag.

Note: I'm guessing that file2 is ~4GB and the 10M block size is ok, but you may need to optimize the block size to get the fastest run.

Related videos on Youtube

codeforester

Scripting and automation enthusiast. Fun fact: Code is the reason for bugs. More code = more bugs! Write reusable code!

Updated on June 20, 2022Comments

-

codeforester almost 2 years

codeforester almost 2 yearsI have two files,

file1.txtandfile2.txt.file1.txthas about 14K lines andfile2.txthas about 2 billions.file1.txthas a single fieldf1per line whilefile2.txthas 3 fields,f1throughf3, delimited by|.I want to find all lines from

file2.txtwheref1offile1.txtmatchesf2offile2.txt(or anywhere on the line if we don't want to spend extra time splitting the values offile2.txt).file1.txt (about 14K lines, not sorted):

foo1 foo2 ... bar1 bar2 ...file2.txt (about 2 billion lines, not sorted):

date1|foo1|number1 date2|foo2|number2 ... date1|bar1|number1 date2|bar2|number2 ...Output expected:

date1|foo1|number1 date2|foo2|number2 ... date1|bar1|number1 date2|bar2|number2 ...Here is what I have tried and it seems to be taking several hours to run:

fgrep -F -f file1.txt file2.txt > file.matchedI wonder if there is a better and faster way of doing this operation with the common Unix commands or with a small script.

-

Inian about 7 yearsYou could let us know the timing of the below solutions on your actual input.

Inian about 7 yearsYou could let us know the timing of the below solutions on your actual input. -

codeforester about 7 years@Inian - I will definitely do that. Waiting for more answers.

codeforester about 7 years@Inian - I will definitely do that. Waiting for more answers. -

Inian about 7 yearsUpdated my answer for matching anywhere in the line, but I am guessing it is going to be tad slower when matching in

Inian about 7 yearsUpdated my answer for matching anywhere in the line, but I am guessing it is going to be tad slower when matching in$2alone. -

Mark Reed about 7 yearsI would be not shocked, but somewhat surprised, if you found something significantly faster than

fgrepfor this task. A two-billion-line text file is not going to be fast to process no matter what.. -

Jose Ricardo Bustos M. about 7 yearsAre the data somehow ordered? are

Jose Ricardo Bustos M. about 7 yearsAre the data somehow ordered? areC-based solutions available? -

CAB about 7 yearsSee accepted solution for Fastest possible grep

-

codeforester about 7 years@JoseRicardoBustosM. - the data is not ordered. Just updated the question.

codeforester about 7 years@JoseRicardoBustosM. - the data is not ordered. Just updated the question. -

gregory about 7 yearshow many cores/cpus does the machine you're running this on have? And, are you open to running this search on multiple servers?

gregory about 7 yearshow many cores/cpus does the machine you're running this on have? And, are you open to running this search on multiple servers? -

codeforester about 7 years@gregory - this is for my work laptop - MBP with a single CPU and 4 cores. Multiple servers not preferred.

codeforester about 7 years@gregory - this is for my work laptop - MBP with a single CPU and 4 cores. Multiple servers not preferred. -

Jim D. about 7 yearsIf you want to benchmark the solutions, it will be necessary to drop the file system cache to fairly compare. See, e.g. this.

Jim D. about 7 yearsIf you want to benchmark the solutions, it will be necessary to drop the file system cache to fairly compare. See, e.g. this. -

Håkon Hægland about 7 yearsI am a little bit confused. You say that you can match either field no 2 exactly or anywhere on the line, but then the first solution (matching exactly field no 2) is clearly more restrictive in general. For example, given the word

Håkon Hægland about 7 yearsI am a little bit confused. You say that you can match either field no 2 exactly or anywhere on the line, but then the first solution (matching exactly field no 2) is clearly more restrictive in general. For example, given the wordfoo1, the first solution will not matchfoo1|date|number1, whereas the second solution (match anywhere on the line) will accept this as a match. So which method are you actually going to use for your problem? -

codeforester about 7 years@HåkonHægland - I should have stated it more clearly - yes, I do need to match the second field, not the entire line. It especially make sense for the hash based solution.

codeforester about 7 years@HåkonHægland - I should have stated it more clearly - yes, I do need to match the second field, not the entire line. It especially make sense for the hash based solution. -

Håkon Hægland about 7 years@codeforester It would be nice if you could upload

Håkon Hægland about 7 years@codeforester It would be nice if you could uploadfile1.txtto for example pastebin.com and provide a link to the file. Then we could try to test codes on at least some real data :) -

Ed Morton about 7 years@codeforester please make up your mind and edit your question to state clearly if you want to match the f1 value in just the 2nd field of f2 or anywhere in the line in f2. Don't say "I'd like X but Y would be OK too" - just state your requirement, X or Y. Also state if a partial match is considered a match ( e.g. does

fooin f1 matchfoobarin f2?) and if you're looking for a regexp or string match (e.g. doesf.*rin f1 matchfoobarin f2?).

-

-

George Vasiliou about 7 years@codeforester Obviously this solution is only vaild with your previous version of the question : pattern in file 1 to match field 2 of file 2. It would be interesting to see the timing results also, even if we compare only the seconf field.

-

linuxfan says Reinstate Monica about 7 yearsThe note about locale is really good. I don't know if it works (I suppose yes), but simply having thought about it is worth.

linuxfan says Reinstate Monica about 7 yearsThe note about locale is really good. I don't know if it works (I suppose yes), but simply having thought about it is worth. -

Benjamin W. about 7 years

Benjamin W. about 7 yearsindexmight be faster thanmatch. -

codeforester about 7 yearsWhy do we need to create the huge regex from patterns array?

codeforester about 7 yearsWhy do we need to create the huge regex from patterns array? -

codeforester about 7 years@Inian - thank you for your effort. I ran your

codeforester about 7 years@Inian - thank you for your effort. I ran yourawkcode and it terminated with an error after 55 minutes. I realized that the big file has nulls in column2 and awk didn't like it. It produced 40K matches - I expected 126K matches for the 1.3M lines in big file. Obviously, 55 minutes is too much, that too for the partial job. I feelawkis simply not suited for file sizes like this. -

Inian about 7 years@codeforester: Thanks for trying the same,

Inian about 7 years@codeforester: Thanks for trying the same,big file has nulls in column2is a new requirement, I can modify the answer for it. But on a billion line file, no shell tool can scale smoothly. Did you try thefgrepwithLC_ALL=Clocale? And can you let me know whhich one you tried? I had multiple solutions withmatch(),index() -

codeforester about 7 yearsI ran this over a 1.3M lines file2.txt. It has been running for more than 3.5 hours and so far has produced 86K results (of the 126K expected matches). Definitely not optimal.

codeforester about 7 yearsI ran this over a 1.3M lines file2.txt. It has been running for more than 3.5 hours and so far has produced 86K results (of the 126K expected matches). Definitely not optimal. -

codeforester about 7 yearsThanks @Inian. I shall try that one as well. I am going to publish the detailed results before the bounty closes.

codeforester about 7 yearsThanks @Inian. I shall try that one as well. I am going to publish the detailed results before the bounty closes. -

Inian about 7 years@codeforester: Do try anything except this

Inian about 7 years@codeforester: Do try anything except thisawk 'FNR==NR{hash[$1]; next}$2 in hash' file1.txt FS='|' file2.txt -

codeforester about 7 yearsYou are almost on the right track! There is no need to load the second (bigger) file into the hash. Please see my solution below.

codeforester about 7 yearsYou are almost on the right track! There is no need to load the second (bigger) file into the hash. Please see my solution below. -

Inian about 7 years@codeforester: Is there a reason for why this was down-vote?

Inian about 7 years@codeforester: Is there a reason for why this was down-vote? -

codeforester about 7 years@Inian - I down voted because the

codeforester about 7 years@Inian - I down voted because theawksolution is definitely unsuitable for this requirement. I knewawkis generally not good when big files are involved, and this example kind of settled it. I also down voted the python solution and left a comment for the Perl solution. -

User9102d82 about 7 yearsYes, You are right. Could have done that, actually should have done that but anyways, how much time did this one take?

-

codeforester about 7 yearsI didn't run it since I knew it is not at all optimal because of the memory consumption. The

codeforester about 7 yearsI didn't run it since I knew it is not at all optimal because of the memory consumption. Thefgrepcommand earlier had made my MPB's fan go crazy and hence didn't have the courage to try this one! -

Inian about 7 years@codeforester: To be honest, there is no definitive answer for the question. People can only do code-golf ( the best among the other answers using least resources), I did my best to solve it, as the OP your feedback of downvoting is not a healthy approach here as there is no 100% solution for this. Do appreciate people for the time they are spending to answer your problem.

Inian about 7 years@codeforester: To be honest, there is no definitive answer for the question. People can only do code-golf ( the best among the other answers using least resources), I did my best to solve it, as the OP your feedback of downvoting is not a healthy approach here as there is no 100% solution for this. Do appreciate people for the time they are spending to answer your problem. -

Inian about 7 yearsAnd claiming

Inian about 7 yearsAnd claimingAwk is not good for big filesis not correct. see lyness.io/… -

George Vasiliou about 7 yearsNice testing. I'm surprised that Join solution could achieve good results. Also notice that join solution should require sort before to work - Please re-check. Moreover i think that you missed codeforester accepted solution (perl code) , and is quite strange that zdim perl solution is much slower than grep while OP claims the opposite (and we tend to believe him :) )

-

Håkon Hægland about 7 years@GeorgeVasiliou Thanks for the comments! I was also a bit surprised over the results. Note that

Håkon Hægland about 7 years@GeorgeVasiliou Thanks for the comments! I was also a bit surprised over the results. Note thatfile1.txtandfile2.txtare in fact sorted. I uploaded everything to GitHub here. Please try run the code on your machine and report back. Just dogit clone https://github.com/hakonhagland/fast-grep-bench.gitand then runrun_case.sh 75w-5w-1Mwto run exactly the same case as I did :) -

gregory about 7 yearsYour "fgrep: memory exhausted" is surprising; I know you're using a MBP, so I assume you're using the BSD grep version. Can you try gnu grep with the -F flag? (can be installed with homebrew and run with gfgrep).

gregory about 7 yearsYour "fgrep: memory exhausted" is surprising; I know you're using a MBP, so I assume you're using the BSD grep version. Can you try gnu grep with the -F flag? (can be installed with homebrew and run with gfgrep). -

gregory about 7 yearsI believe agrep uses a hash of the patterns when using -f. You might want to give that a try; it too is installable using homebrew.

gregory about 7 yearsI believe agrep uses a hash of the patterns when using -f. You might want to give that a try; it too is installable using homebrew. -

Håkon Hægland about 7 years@GeorgeVasiliou Updated answer to include the accepted Perl solution by codeforester. Sorry I forgot it.

Håkon Hægland about 7 years@GeorgeVasiliou Updated answer to include the accepted Perl solution by codeforester. Sorry I forgot it. -

Inian about 7 years@codeforester: Appreciate your feedback, any requirement if you have.

Inian about 7 years@codeforester: Appreciate your feedback, any requirement if you have. -

Ed Morton about 7 yearsMake sure your input contains things you do not want to match. For example if you ONLY want to do exact string matches on the 2nd field of file2, then include

fooandf.*oin file1 plusa|foobar|bandfoo|a|bin file2 and so on. It's always trivial to write tools that match on the things you do want but then harder to exclude the things you don't want so the only way to really test a solution is to put effort into creating test cases that include the things you could imagine might match but should not (typically partial, regexp, or wrong-part-of-line matches). -

Ed Morton about 7 years@codeforester wrt

awk solution is definitely unsuitable for this requirement. I knew awk is generally not good when big files are involved,- what are you talking about? Awk is well suited for your question and is excellent when big files are involved since it's designed and highly optimized to ONLY to text manipulation. -

Ed Morton about 7 years@Inian

awk 'FNR==NR{hash[$1]; next}$2 in hash' file1.txt FS='|' file2.txtis THE awk solution for a full string match on field2. Where you wrotematch(i,$0)ITYMmatch($0,i)but that's needlessly calling a function (and populating RSTART and RLENGTH) vs just$0~i. The match() and index() versions wouldn't be useful anyway though as awk is splitting each line of file2 into fields and so cannot be faster thangreporgrep -Ffor a regexp or string partial match on the whole line. -

Inian about 7 years@EdMorton: As ever incorporated your valuable comments.

Inian about 7 years@EdMorton: As ever incorporated your valuable comments. -

George Vasiliou about 7 yearsI downloaded your small files (file1 5 words - file2 1M lines)and i run some of the solutions directly. Some results: 1.

Inian3 C localle awk hash - time 0.7 secs - 1.1 secs without C locale-2.Inian4 - C localle fgrep , time 0.22 secs-3.gregory1 parallel, time 0.5 secs-4.BOC2 grep -E wih C localle , time 15 sec- 5.inian2 - awk index , time 1.7 sec-6.join solution without sorting 0.23 secs. All results redirected to file3.txt - matches were 66711 for all cases. 2 X CPU Intel 2 Duo T6570 @ 2.10GHz - 2Gb RAM-Debian Testing 64bit- kernel 4.9.0.1 - no cpu freq scaling. -

codeforester about 7 years@EdMorton: Thanks for chiming in. I wonder why

codeforester about 7 years@EdMorton: Thanks for chiming in. I wonder whyawkis so slow in this case. The Perl script took just a few seconds andawkdidn't complete in several hours. -

Håkon Hægland about 7 years@GeorgeVasiliou Thanks for the results! One question:

Håkon Hægland about 7 years@GeorgeVasiliou Thanks for the results! One question:BOC2 15sshould it be 1.5s or 0.15s instead of 15s? -

Håkon Hægland about 7 years@GeorgeVasiliou Updated answer with your results, and added

Håkon Hægland about 7 years@GeorgeVasiliou Updated answer with your results, and addedinian6method (same asinian3but withLC_ALL=C). Thanks for the suggestion, it seemsinian6is siginificantly faster thaninian3. -

George Vasiliou about 7 years@HåkonHægland For BOC2 , i did the test several times and it was 15 seconds indeed. It is not a typo error. I don't know why

fgrep -Fis fast as hell andgrep -Eis that slow. Same conditions, same machine. I can not explain it. -

George Vasiliou about 7 years@HåkonHægland Since this post tends to become a future benchmark reference, i would suggest to include in your main answer the direct location of the RAW file1 and file2.txt (both small files and large files). I had to search a lot in your github to locate the correct files to wget them. By providing raw links , anybody can wget the files and make a simple test with

time(no need to get your whole comparison script). I did all tests using# time <solution>- for example# time LC_ALL=C fgrep -F -f file1.txt file2.txt >file3.txt -

George Vasiliou about 7 yearsOne last comment to it whom it may concern: has been proved in one of my small amateur projects[1] that grep can be 100% faster by disabling the cpu frequency scaling. In some machines (like one of mine) cpu freq scaling is enabled by default with

powersaveoptions. By changing cpu scaling to "performace" or by manually applying max cpu freq regular grep finished the job in 50% less time than previous "powersave" governor. @codeforester you might also want to check this in your machine. [1]: codereview.stackexchange.com/questions/146383/… -

Ed Morton about 7 years@codeforester please don't say

awk is so slow, etc. - its a specific script you ran that was slow. What was that script? There is no way that the right awk script ('FNR==NR{hash[$1]; next}$2 in hash') would take more than a few seconds for the task you described. Also did your input contain the rainy day cases I suggested so you can tell if the 2 scripts handle those correctly? I mean I have no idea since idk which scripts you ran but the perl script might be fast because it doesn't actually handle rainy day cases. -

Ed Morton about 7 yearsI wish you'd included regexp metacharacters in file1 so we could filter out solutions that use regexp instead of string comparisons. Also do you know if any of the words from file1 were partial matches for words in field2 of file2 (e.g.

foovsfoobar)? You should have some of those to again filter out non-solutions. Finally - did you do 3rd-iteration timing to remove the effects of cacheing? -

ikegami about 7 yearsHalf of the solutions you benchmarked don't even work! The following fail to handle

file1.txtfoo1file2.txtdate1|foo12|number5: BOC1, BOC2, codeforester_orig, gregory1, inian2, inian4, oliv -

ikegami about 7 yearsOne less op if you change

exists $word{ (/\|([^|]+)/)[0] }to$word{ (/\|([^|]+)/)[0] }. There's no reason not to print to STDOUT, which removes another two ops. -

ikegami about 7 years1)

defined($field)should be unnecessary. If it is necessary, consider just turning off "uninitialized" warnings instead. 2)exists($small_hash{$field})can be replaced with the (ever so slightly) faster$small_hash{$field}if you assigned1instead ofundefas the hash values. 3)printf("%s", $_);is a slow version ofprint;. -

zdim about 7 years@ikegami Thank you for comments -- they touch on things I wasn't sure about. (1) Without

existsit fetches (and returns) the value. I thought thatexists(somehow) avoids that (while it is one function call more)? (2) Is there no overhead to printing 'out' and having shell redirect? I have compared (timed) these in the past and never found direct writes to file to be faster, but I don't understand it. -

zdim about 7 years@ikegami For whatever it's worth, complete comparison: 1.61/s (with

exists, write to file), 1.62/s (withexists, write to stdout, best), 1.56/s (withoutexists, write to file), 1.57/s (withoutexists, write to stdout). It appears (in this one benchmark on this system) thatexistshelps (a tiny bit) while the direct write is slower than writing to stdout (I do not understand this). -

Håkon Hægland about 7 yearsI interpreted the OP's question as: "second field (of file2.txt) should be equal to one of the words in file1.txt". If so, we have a match. So then you are right, many of the solutions can give wrong results. I also mentioned that in my answer: For the large test case in my answer, many of the methods indeed gave false matches.

Håkon Hægland about 7 yearsI interpreted the OP's question as: "second field (of file2.txt) should be equal to one of the words in file1.txt". If so, we have a match. So then you are right, many of the solutions can give wrong results. I also mentioned that in my answer: For the large test case in my answer, many of the methods indeed gave false matches. -

codeforester about 7 years@EdMorton: I stand corrected. @Inian's solution

codeforester about 7 years@EdMorton: I stand corrected. @Inian's solutionawk 'FNR==NR{hash[$1]; next}$2 in hash' file1.txt FS='|' file2.txtdid finish in 40 seconds and that is quite impressive. -

codeforester about 7 yearsI realized the mistake that I had not tested @Inian's optimized

codeforester about 7 yearsI realized the mistake that I had not tested @Inian's optimizedawksolution. Just updated my answer to include that. -

Håkon Hægland about 7 yearsMaybe we should change

Håkon Hægland about 7 yearsMaybe we should changefile1.txtfor the methods that give false matches to for example^[^|]*\|foo1\|? Then they should give correct results (provided their command is changed togrep -E -finstead of usingfgrep) according to the assumed specifications of the OP. And the comparison between the methods would become less confusing :) -

Ole Tange about 7 yearsIf the files are bigger: gnu.org/software/parallel/…

Ole Tange about 7 yearsIf the files are bigger: gnu.org/software/parallel/… -

Inian about 7 years@codeforester: Appreciate you awarding me the bounty, hope the answer was quite helpful to you.

Inian about 7 years@codeforester: Appreciate you awarding me the bounty, hope the answer was quite helpful to you. -

ikegami about 7 years@Håkon Hægland, Curious as to why you didn't add this to your benchmark when you made the other recent addition. Is it because you don't have R::A? I'm curious how it compares to BOC's i.e. how much the groupings from R::A helps.

-

ikegami about 7 yearsNice how fixing

gregory1changed it from the slowest solution to the fastest solution that works! -

Håkon Hægland about 7 years@zdim I updated my test-suite to include your last update using the short-curcuit

Håkon Hægland about 7 years@zdim I updated my test-suite to include your last update using the short-curcuitifand printing toSTDOUTinstead of printing to the file, i.e. I used this:exists $word{ (/\|([^|]+)/)[0] } && print. However, there was practically no difference in the timings. For the small case in my answer I still got 0.61s run time, and for the large case the run time was still around 6.55s. Note, that the output from the script was still saved to file using shell redirection (zdim.pl > out.txt). -

Håkon Hægland about 7 years.. so I cannot see that using

Håkon Hægland about 7 years.. so I cannot see that usingzdim.pl(without shell redirection) and saving the output toout.txtwithin the script would be any different (be more efficient). -

zdim about 7 years@HåkonHægland Thank you. No, that may not show much of a difference. What does is uncommenting the line with

splitand commenting out the line with regex. So to usesplit-based solution instead of the regex one (both are just one line :). That should give 50% speedup. (Btw, the short-circuit has been there all along, I never had anif. That also helps only very little, possibly unnoticed. Also btw, previous to this change this script wasn't printing anything toSTDOUT, but was writing out a separate file.) -

zdim about 7 years@HåkonHægland I am keeping the regex-based one as the "main" solution (while the other one is commented out) merely because it is not clear in the question whether OP wants any match or the second field -- and finding any match strikes me as more generic (so, regex). I might as well just switch to have the faster code be the "main" solution. All it takes is moving one

#from one line to another. -

Håkon Hægland about 7 years@zdim Yes, you are right! Commenting out the

Håkon Hægland about 7 years@zdim Yes, you are right! Commenting out thesplitimproved performance. I tested it now: Small case: 0.4s, large case: 4.21s, that is also clearly better than the Perl script ofcodeforester -

zdim about 7 years@HåkonHægland Alright, that makes sense -- thank you! It has to be clearly better (but not by all that much), as explained in text. While the regex-based one does lag behind the

split, I guess because starting the regex engine is a big overhead for the super-simple straight match that is needed, compared to straightforward and simple work thatsplitdoes (break by space and pick one element to return). -

dawg about 7 yearsDid you adjust for those solutions that

dawg about 7 yearsDid you adjust for those solutions thatprintvs those that write to a file? Printing can be a substantial IO penalty. For an apples to apples comparison, they should all print or all write to a file... -

Håkon Hægland about 7 years@dawg Yes I pretty confident that I did that :) You can check how the methods are implemented here. All methods are being run either directly or indirectly through the shell script

Håkon Hægland about 7 years@dawg Yes I pretty confident that I did that :) You can check how the methods are implemented here. All methods are being run either directly or indirectly through the shell scriptrun.shin the pertinent subfolder of solutions. So for example, your python script is being run usingrun.py >out.txt, whererun.pyis the Python script. -

Håkon Hægland about 7 years... In fact the tests should not work if they printed to

Håkon Hægland about 7 years... In fact the tests should not work if they printed toSTDOUTinstead of to file, since I later (after the method has been run) read their output file to check if they extracted the right number of lines fromfile2.txt. -

JJoao about 7 years@HåkonHægland, very cool (+1). I know that this question is "old" but I could not resist :-) . Could you time my answer just for curiosity? (I know I am not a wining candidate)

-

Håkon Hægland about 7 yearsHi JJoao. Thanks for the input! I updated my answer to include this method also.

Håkon Hægland about 7 yearsHi JJoao. Thanks for the input! I updated my answer to include this method also. -

JJoao about 7 years@HåkonHægland, thank you; The comparison between this approach and the others is still difficult for me (flex for very big expressions and the compilation often take a lot of time) . Could you give-me the time of the final "run" ? (

a.out < file2.txt > out) -

Håkon Hægland about 7 yearsIf you are wondering if the compilation time is included in the results I presented: It is not. I mentioned that in the beginning of the question, where I describe the different methods. I am not familiar with

Håkon Hægland about 7 yearsIf you are wondering if the compilation time is included in the results I presented: It is not. I mentioned that in the beginning of the question, where I describe the different methods. I am not familiar withflexso I cannot tell if 38s is to be expected or not for the large case, or if something else is wrong (that causes the method to take so long time). -

JJoao about 7 years@HåkonHægland, sorry to all the trouble I am giving you. I am surprised about the 38 s -- that is why I like benchmarks! I am going to study it.

-

JJoao about 7 years@HåkonHægland, Sorry again: I was generating the wrong flex code. Updated -- this time I believe it will be 10 times faster.

-

Håkon Hægland about 7 yearsThanks, I have updated my answer to use the new

Håkon Hægland about 7 yearsThanks, I have updated my answer to use the newa.flfiles. It looks much better now :) -

George Vasiliou about 7 years@jjoao This topic has so many info gathered together that everytime i see a grep question in SE i point people here... These solutions with benchmarks should be a "grep like" wiki!

-

JJoao about 7 years@GeorgeVasiliou, I "came" to this question because I saw a link posted by you :) ...

-

JJoao about 7 years@HåkonHægland, and thank you again for your patience... ( the influence of optimizers

flex -Fandcc -Owas bigger than I expected) -

George Vasiliou about 7 years@JJoao That is Cool! :-)))

-

George Vasiliou about 7 yearsAt small files test, my solution achieved 0.23 seconds in my machine. I was able to reduce in my machine time down to 0.16 seconds just by applying

LC_ALL=Cin front of join... Do you want to give a try with your files to verify if there is a performance increase with C locale? -

Håkon Hægland about 7 years@GeorgeVasiliou Thanks! I have updated my answer. This was really impressive :)

Håkon Hægland about 7 years@GeorgeVasiliou Thanks! I have updated my answer. This was really impressive :) -

George Vasiliou about 7 years@HåkonHægland - Would you mind to benchmark this solution as well , using your small and big files?

-

Håkon Hægland about 7 yearsHi George! Thanks for the input. My first impression is that

Håkon Hægland about 7 yearsHi George! Thanks for the input. My first impression is that$2 in ashould be equivalent toa[$2]. But maybe I am wrong, I do not know all details of awk inner workings. But I believe both requires a hash lookup.. and therefore same speed -

Håkon Hægland about 7 yearsI tested it now and the speed seems to be the same as for

Håkon Hægland about 7 yearsI tested it now and the speed seems to be the same as forinian3in my comparison answer. So this could indicate that the methods are indeed equivalent. What do you think? -

George Vasiliou about 7 years@HåkonHægland Hi! Ok, good to know.So we can't claim that this method is faster. In my old machine , it seems that gave better results than inian3. I will re-check. Did you try also with C localle?

-

Ole Tange over 6 yearsNewer versions of

Ole Tange over 6 yearsNewer versions ofparallelwill compute the block size if you give it a negative number:--block-size -1= one chuck per jobslot,-2= two chunks per jobslot. -

codeforester about 6 yearsThat may be a good idea. I wanted a simple command line solution.

codeforester about 6 yearsThat may be a good idea. I wanted a simple command line solution.

![Write Your Own Bash Scripts for Automation [Tutorial]](https://i.ytimg.com/vi/PPQ8m8xQAs8/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCwQaGsEd2aIIwg4kHP-QI1pGNjCg)