gedit can't recognize character encoding, but gvim can

Solution 1

iconv is probably what you'll want to use. iconv -l will show you the available encodings and then you can use a couple of commands to recode them all:

# all text files are in ./originals/

# new files will be written to ./newversions/

mkdir -p newversions

cd originals

for file in *.txt; do

cat $file | iconv -f ASCII -t utf-8 > ../newversions/$file;

done

If you want to do this with files you don't the encoding of (because they're all over the place), you want to bring in a few more commands: find, file, awk and sed. The last two are just there to process the output of file.

for file in find . -type f -exec file --mime {} \; | grep "ascii" | awk '{print $1}' | sed s/.$//; do

...

I've no idea if this actually works so I certainly wouldn't run it from anything but the least important directory you have (make a testing folder with some known ASCII files in). The syntax of find might preclude it from being within a for loop. I'd hope that somebody else with more bash experience could jump in there and sort it out so it does the right thing.

Solution 2

Gedit can detect the correct character set only if it is listed at "File-Open-Character encoding". You can alter this list but keep in mind that the order is important.

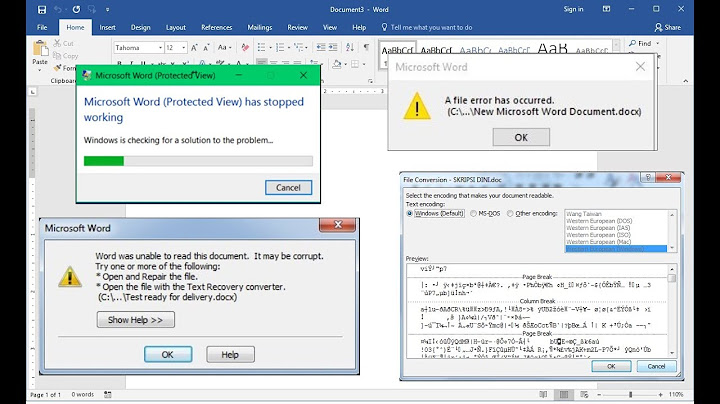

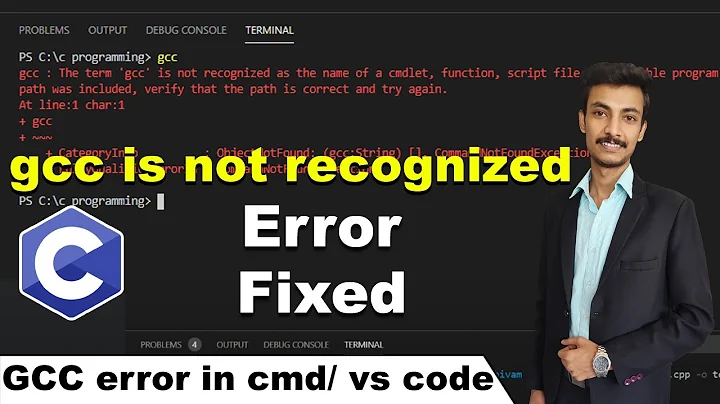

Related videos on Youtube

Peter.O

Free! Free at last! ... my Windows box died in September 2010 ... and I'm free at last!

Updated on September 17, 2022Comments

-

Peter.O over 1 year

I have a lot of plain text files which come from a Windows environment.

Many of them use a whacky default Windows code-page, which is neither ASCII (7 bits) nor UTF-8.gvim has no problem opening these files, but gedit fails to do so.

gvim reports the encoding as latin1.I assume that gvim is making a "smart" assumption about the code-page.

(I believe this code-page still has international variants).Some questions arise from this:

(1). Is there some way the gedit can be told to recoginze this code-page?

** NB. [Update] For this point (1), see my answer, below.

** For points (2) and (3). see Oli's answer.(2). Is there a way to scan the file system to identify these problem files?

(3). Is there a batch converting tool to convert these files to UTF-8?

(.. this old-world text mayhem was actually the final straw which brought me over to Ubuntu... UTF-8 system-wide by default Brilliant)

[UPDATE]

** NB: ** I now consider the following Update to be partially irrelevent, because the "problem" files aren't the "problem" (see my answer below).

I've left it here, because is may be of some general use to someone.

I've worked out a rough and ready way to identify the problem files...

Thefilecommand was not suitable, because it identified my example file as ASCII... but an ASCII file is 100% UTF-8 compliant...As I mentioned in a comment below, the test for an invalid first byte of a UTF-8 codepoint is:

- if the first byte (of a UTF-8 codepoint) is between 0x80 and 0xBF (reserved for additional bytes), or greater than 0xF7 ("overlong form"), that is considered an error

I know

sed(a bit, via a Win32 port), so I've managed to cobble together a RegEx pattern which finds these offending bytes.It's an ugly line, so look away now if regular expressions scare you :)

I'd really appreciate it if someone points out how to use hex values in a range [] expression.. I've just used the or operator \|

fqfn="/my/fully/qualified/filename" sed -n "/\x80\|\x81\|\x82\|\x83\|\x84\|\x85\|\x86\|\x87\|\x88\|\x89\|\x8A\|\x8B\|\x8C\|\x8D\|\x8E\|\x8F\|\x90\|\x91\|\x92\|\x93\|\x94\|\x95\|\x96\|\x97\|\x98\|\x99\|\x9A\|\x9B\|\x9C\|\x9D\|\x9E\|\x9F\|\xA0\|\xA1\|\xA2\|\xA3\|\xA4\|\xA5\|\xA6\|\xA7\|\xA8\|\xA9\|\xAA\|\xAB\|\xAC\|\xAD\|\xAE\|\xAF\|\xB0\|\xB1\|\xB2\|\xB3\|\xB4\|\xB5\|\xB6\|\xB7\|\xB8\|\xB9\|\xBA\|\xBB\|\xBC\|\xBD\|\xBE\|\xBF\|\xF8\|\xF9\|\xFA\|\xFB\|\xFC\|\xFD\|\xFE\|\xFF/p" "${fqfn}"So, I'll now graft this into Oli's batch solution... Thanks Oli!

PS. Here is the invalid UTF-8 byte it found in my sample file ...

"H.Bork, Gøte-borg." ... the "ø" = F8 hex... which is an invalid UTF-8 character. -

Peter.O over 13 yearsAha thanks; that sorts out the batch encoding.. I actually used a Win32 port of iconv a couplo of years ago, to convert between UTF-8 and UTF-16... I thought it was only for conversion between different unicode encodings, but as you've pointed out, it does much more.... Do you know of any way to actually find these files? They are scattered over many many directories...

-

Oli over 13 yearsYou'd want to expand the script to use

find(to find all .txt files) and its-execflag to run something against that file. Then you'd want thefilecommand to detect the charset. Hang on a minute. I'll edit my answer to show you what I'm thinking. -

Peter.O over 13 yearsI tried

file, but it returns ASCII... I think this is what I need to detect ...If the first byte (of a UTF-8 codepoint) is between 0x80 and 0xBF (reserved for additional bytes), or greater than 0xF7 ("overlong form"), that is considered an error -

carnendil about 11 yearsin case you don't have a clue, is there any way to "detect" from the terminal which codepage you need?

carnendil about 11 yearsin case you don't have a clue, is there any way to "detect" from the terminal which codepage you need? -

Peter.O about 11 yearsTrying to establish which codepage was intended by the original author is not possible in many situations. Sub-sets of several codepages often coincide and produce a "valid" state (for that codepage and that text). The matching codepage can be technically valid, but text may show as the wrong symbol. In these cases, it really requires that the text be proof-read by a person!

-

Peter.O about 11 yearsRegarding ways to detect the codepage via the terminal;

iconvandrecodecan be used, but it is clumsy, as there are often multiple "valid" possibilities. You need to just keep testing codepages (valid and invalid) until you find a technically valid codepage... I just useemacsinstead... which, by the way, is scriptable. ie. You can call emac's elisp scripting language from a bash script (just as you may call awk).