Get the bounding box coordinates in the TensorFlow object detection API tutorial

Solution 1

I tried printing output_dict['detection_boxes'] but I am not sure what the numbers mean

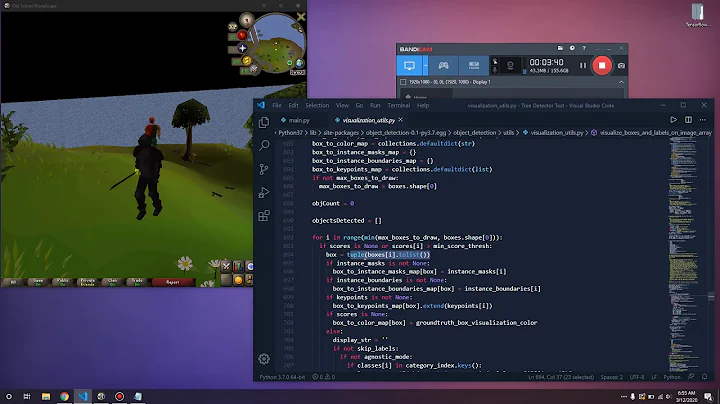

You can check out the code for yourself. visualize_boxes_and_labels_on_image_array is defined here.

Note that you are passing use_normalized_coordinates=True. If you trace the function calls, you will see your numbers [ 0.56213236, 0.2780568 , 0.91445708, 0.69120586] etc. are the values [ymin, xmin, ymax, xmax] where the image coordinates:

(left, right, top, bottom) = (xmin * im_width, xmax * im_width,

ymin * im_height, ymax * im_height)

are computed by the function:

def draw_bounding_box_on_image(image,

ymin,

xmin,

ymax,

xmax,

color='red',

thickness=4,

display_str_list=(),

use_normalized_coordinates=True):

"""Adds a bounding box to an image.

Bounding box coordinates can be specified in either absolute (pixel) or

normalized coordinates by setting the use_normalized_coordinates argument.

Each string in display_str_list is displayed on a separate line above the

bounding box in black text on a rectangle filled with the input 'color'.

If the top of the bounding box extends to the edge of the image, the strings

are displayed below the bounding box.

Args:

image: a PIL.Image object.

ymin: ymin of bounding box.

xmin: xmin of bounding box.

ymax: ymax of bounding box.

xmax: xmax of bounding box.

color: color to draw bounding box. Default is red.

thickness: line thickness. Default value is 4.

display_str_list: list of strings to display in box

(each to be shown on its own line).

use_normalized_coordinates: If True (default), treat coordinates

ymin, xmin, ymax, xmax as relative to the image. Otherwise treat

coordinates as absolute.

"""

draw = ImageDraw.Draw(image)

im_width, im_height = image.size

if use_normalized_coordinates:

(left, right, top, bottom) = (xmin * im_width, xmax * im_width,

ymin * im_height, ymax * im_height)

Solution 2

I've got exactly the same story. Got an array with roughly hundred boxes (output_dict['detection_boxes']) when only one was displayed on an image. Digging deeper into code which is drawing a rectangle was able to extract that and use in my inference.py:

#so detection has happened and you've got output_dict as a

# result of your inference

# then assume you've got this in your inference.py in order to draw rectangles

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

# This is the way I'm getting my coordinates

boxes = output_dict['detection_boxes']

# get all boxes from an array

max_boxes_to_draw = boxes.shape[0]

# get scores to get a threshold

scores = output_dict['detection_scores']

# this is set as a default but feel free to adjust it to your needs

min_score_thresh=.5

# iterate over all objects found

for i in range(min(max_boxes_to_draw, boxes.shape[0])):

#

if scores is None or scores[i] > min_score_thresh:

# boxes[i] is the box which will be drawn

class_name = category_index[output_dict['detection_classes'][i]]['name']

print ("This box is gonna get used", boxes[i], output_dict['detection_classes'][i])

Solution 3

The above answer did not work for me, I had to do some changes. So if that doesn't help maybe try this.

# This is the way I'm getting my coordinates

boxes = detections['detection_boxes'].numpy()[0]

# get all boxes from an array

max_boxes_to_draw = boxes.shape[0]

# get scores to get a threshold

scores = detections['detection_scores'].numpy()[0]

# this is set as a default but feel free to adjust it to your needs

min_score_thresh=.5

# # iterate over all objects found

coordinates = []

for i in range(min(max_boxes_to_draw, boxes.shape[0])):

if scores[i] > min_score_thresh:

class_id = int(detections['detection_classes'].numpy()[0][i] + 1)

coordinates.append({

"box": boxes[i],

"class_name": category_index[class_id]["name"],

"score": scores[i]

})

print(coordinates)

Here each item(dictionary) in the coordinates list, is a box to be drawn on the image with boxes coordinates (normalised), class_name and score.

Related videos on Youtube

Mandy

Updated on November 30, 2021Comments

-

Mandy over 2 years

Mandy over 2 yearsI am new to both Python and Tensorflow. I am trying to run the object detection tutorial file from the Tensorflow Object Detection API, but I cannot find where I can get the coordinates of the bounding boxes when objects are detected.

Relevant code:

# The following processing is only for single image detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0]) detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])The place where I assume bounding boxes are drawn is like this:

# Visualization of the results of detection. vis_util.visualize_boxes_and_labels_on_image_array( image_np, output_dict['detection_boxes'], output_dict['detection_classes'], output_dict['detection_scores'], category_index, instance_masks=output_dict.get('detection_masks'), use_normalized_coordinates=True, line_thickness=8) plt.figure(figsize=IMAGE_SIZE) plt.imshow(image_np)I tried printing

output_dict['detection_boxes']but I am not sure what the numbers mean. There are a lot.array([[ 0.56213236, 0.2780568 , 0.91445708, 0.69120586], [ 0.56261235, 0.86368728, 0.59286624, 0.8893863 ], [ 0.57073039, 0.87096912, 0.61292225, 0.90354401], [ 0.51422435, 0.78449738, 0.53994244, 0.79437423], ...... [ 0.32784131, 0.5461576 , 0.36972913, 0.56903434], [ 0.03005961, 0.02714229, 0.47211722, 0.44683522], [ 0.43143299, 0.09211366, 0.58121657, 0.3509962 ]], dtype=float32)I found answers for similar questions, but I don't have a variable called boxes as they do. How can I get the coordinates?

-

Mandy about 6 yearsOkay. It seems that output_dict['detection_boxes'] contains all the overlapping boxes, and that's why there are so many arrays.Thank you!

Mandy about 6 yearsOkay. It seems that output_dict['detection_boxes'] contains all the overlapping boxes, and that's why there are so many arrays.Thank you! -

CMCDragonkai about 6 yearsWhat determines how many overlapping boxes there are? And also why are there so many overlapping boxes, why is this passed to the visualisation layer to merge?

-

Web Nexus almost 5 yearsI know this is an old question, but I thought this may help somebody. You can limit the number of overlapping boxes if you increase the

Web Nexus almost 5 yearsI know this is an old question, but I thought this may help somebody. You can limit the number of overlapping boxes if you increase themin_score_threshwithin thevisualize_boxes_and_labels_on_image_arrayfunction input variables. By default it is set to0.5, for my project for example, I have had to increase this to0.8. -

tpk over 2 yearsThe normalised bboxes are the format -

ymin, xmin, ymax, xmaxgithub.com/tensorflow/models/blob/… -

Kirikkayis about 2 yearsI get the following error: ---> 32 boxes = detections['detection_boxes'].numpy()[0] AttributeError: 'numpy.ndarray' object has no attribute 'numpy'

-

Shreyas Vedpathak about 2 years@Kirikkayis that means your variable is already a NumPy array.