Getting gradient of model output w.r.t weights using Keras

To get the gradients of model output with respect to weights using Keras you have to use the Keras backend module. I created this simple example to illustrate exactly what to do:

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras import backend as k

model = Sequential()

model.add(Dense(12, input_dim=8, init='uniform', activation='relu'))

model.add(Dense(8, init='uniform', activation='relu'))

model.add(Dense(1, init='uniform', activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

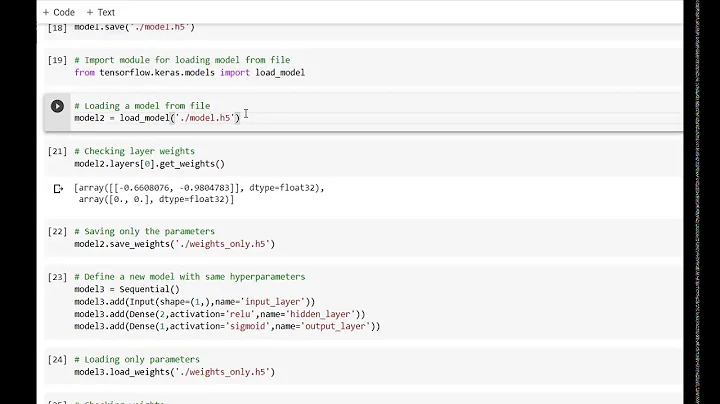

To calculate the gradients we first need to find the output tensor. For the output of the model (what my initial question asked) we simply call model.output. We can also find the gradients of outputs for other layers by calling model.layers[index].output

outputTensor = model.output #Or model.layers[index].output

Then we need to choose the variables that are in respect to the gradient.

listOfVariableTensors = model.trainable_weights

#or variableTensors = model.trainable_weights[0]

We can now calculate the gradients. It is as easy as the following:

gradients = k.gradients(outputTensor, listOfVariableTensors)

To actually run the gradients given an input, we need to use a bit of Tensorflow.

trainingExample = np.random.random((1,8))

sess = tf.InteractiveSession()

sess.run(tf.initialize_all_variables())

evaluated_gradients = sess.run(gradients,feed_dict={model.input:trainingExample})

And thats it!

Related videos on Youtube

Matt S

Updated on July 09, 2022Comments

-

Matt S almost 2 years

I am interested in building reinforcement learning models with the simplicity of the Keras API. Unfortunately, I am unable to extract the gradient of the output (not error) with respect to the weights. I found the following code that performs a similar function (Saliency maps of neural networks (using Keras))

get_output = theano.function([model.layers[0].input],model.layers[-1].output,allow_input_downcast=True) fx = theano.function([model.layers[0].input] ,T.jacobian(model.layers[-1].output.flatten(),model.layers[0].input), allow_input_downcast=True) grad = fx([trainingData])Any ideas on how to calculate the gradient of the model output with respect to the weights for each layer would be appreciated.

-

ssierral over 7 yearsHave you had any advance? I am getting the following error using a similar saliency function: github.com/fchollet/keras/issues/1777#issuecomment-250040309

-

Matt S over 7 yearsI have not had any success with Keras. However, I have been able to do this using tensorflow.

-

Matt S over 7 yearsgithub.com/yanpanlau/DDPG-Keras-Torcs CriticNetwork.py uses the tensorflow backend to calculate gradients while using Keras for actually building the net architecture

-

-

bones.felipe about 7 yearsI've run this code (with theano as backend) and the following error is raised: "TypeError: cost must be a scalar.". I wonder, can this be achieved with a backend-agnostic approach?

-

Aleksandar Jovanovic over 6 yearsMatt S, how do the gradients get calculated without specifying the labels in sess.run?

Aleksandar Jovanovic over 6 yearsMatt S, how do the gradients get calculated without specifying the labels in sess.run? -

Matt S over 6 yearsI am taking gradient w.r.t input. If you want gradient w.r.t loss then you need to define the loss function, replace outputTensor in k.gradients with loss_fn, and then pass the labels to the feed dict.

-

sahdeV about 6 yearsI believe you meant 'gradient w.r.t. output.'

-

beepretty almost 6 years@MattS hi, Matt. Just saw your answer and could you please explain how to pass the labels to the feed dict? Thank you so much!

beepretty almost 6 years@MattS hi, Matt. Just saw your answer and could you please explain how to pass the labels to the feed dict? Thank you so much! -

Alex over 5 yearsThe problem with this solution is that it doesn't solve the problem of how to get those gradients out of Keras at training time. Sure, for some random toy input I can just do what you wrote above, but if I want the gradients that were computed in an actual training step performed by Keras' fit() function, how do I get those? They are not part of the fetch list that is passed to sess.run() somewhere deep down in the depths of the Keras code, so I can't have those unless I spend a month of understanding and rewriting the Keras training engine :/

-

Daniel Möller about 5 years@Alex, they're inside the optimizer. Some inspiration: stackoverflow.com/questions/51140950/…

Daniel Möller about 5 years@Alex, they're inside the optimizer. Some inspiration: stackoverflow.com/questions/51140950/… -

Daniel Möller about 5 yearsReading the code for the

Daniel Möller about 5 yearsReading the code for theSGDoptimizer also brings some ideas. -

kz28 about 4 yearsFor

gradients = k.gradients(outputTensor, listOfVariableTensors), it works only when the outputTensor is a scalar tensor. K.gradients() expects the first parameter to be the loss (usually a scalar tensor). (I know for the original question, it asks the gradient w.r.t the output) -

sajed zarrinpour about 4 yearsvery nice answer! thank you, this helped me a lot. I want to emphasize the fact that the

sajed zarrinpour about 4 yearsvery nice answer! thank you, this helped me a lot. I want to emphasize the fact that thegradientsvector is consists of the gradients of all layers to the requested layer. then the gradient of the output of the model can be obtained viagradients[-1]. Moreover, you can take the gradient of thisgradientsvector. In that case, the last item in the result would beNone -

Francesco Boi almost 4 years@Alex I am facing this problem too, particularly to log gradient information into tensorboard, when using keras. Did u find a solution? I have seen the

write_gradsparameter ofkeras.callbacks.TensorBoardis deprecate but could not find a valid alternative.