GLSL: How to get pixel x,y,z world position?

Solution 1

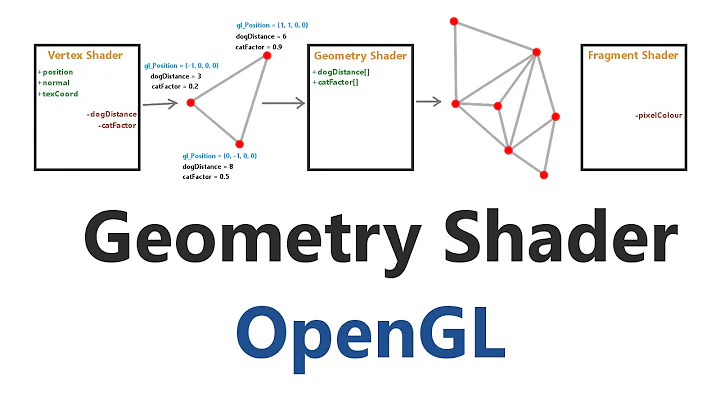

In vertex shader you have gl_Vertex (or something else if you don't use fixed pipeline) which is the position of a vertex in model coordinates. Multiply the model matrix by gl_Vertex and you'll get the vertex position in world coordinates. Assign this to a varying variable, and then read its value in fragment shader and you'll get the position of the fragment in world coordinates.

Now the problem in this is that you don't necessarily have any model matrix if you use the default modelview matrix of OpenGL, which is a combination of both model and view matrices. I usually solve this problem by having two separate matrices instead of just one modelview matrix:

- model matrix (maps model coordinates to world coordinates), and

- view matrix (maps world coordinates to camera coordinates).

So just pass two different matrices to your vertex shader separately. You can do this by defining

uniform mat4 view_matrix;

uniform mat4 model_matrix;

In the beginning of your vertex shader. And then instead of ftransform(), say:

gl_Position = gl_ProjectionMatrix * view_matrix * model_matrix * gl_Vertex;

In the main program you must write values to both of these new matrices. First, to get the view matrix, do the camera transformations with glLoadIdentity(), glTranslate(), glRotate() or gluLookAt() or what ever you prefer as you would normally do, but then call glGetFloatv(GL_MODELVIEW_MATRIX, &array); in order to get the matrix data to an array. And secondly, in a similar way, to get the model matrix, also call glLoadIdentity(); and do the object transformations with glTranslate(), glRotate(), glScale() etc. and finally call glGetFloatv(GL_MODELVIEW_MATRIX, &array); to get the matrix data out of OpenGL, so you can send it to your vertex shader. Especially note that you need to call glLoadIdentity() before beginning to transform the object. Normally you would first transform the camera and then transform the object which would result in one matrix that does both the view and model functions. But because you're using separate matrices you need to reset the matrix after camera transformations with glLoadIdentity().

gl_FragCoord are the pixel coordinates and not world coordinates.

Solution 2

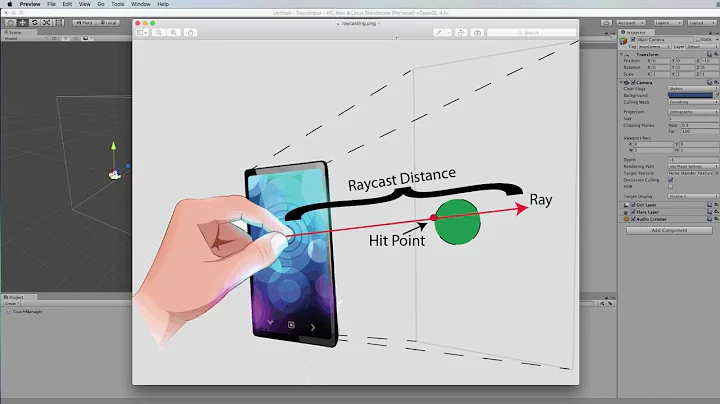

Or you could just divide the z coordinate by the w coordinate, which essentially un-does the perspective projection; giving you your original world coordinates.

ie.

depth = gl_FragCoord.z / gl_FragCoord.w;

Of course, this will only work for non-clipped coordinates..

But who cares about clipped ones anyway?

Solution 3

You need to pass the World/Model matrix as a uniform to the vertex shader, and then multiply it by the vertex position and send it as a varying to the fragment shader:

/*Vertex Shader*/

layout (location = 0) in vec3 Position

uniform mat4 World;

uniform mat4 WVP;

//World FragPos

out vec4 FragPos;

void main()

{

FragPos = World * vec4(Position, 1.0);

gl_Position = WVP * vec4(Position, 1.0);

}

/*Fragment Shader*/

layout (location = 0) out vec4 Color;

...

in vec4 FragPos

void main()

{

Color = FragPos;

}

Related videos on Youtube

Rookie

Updated on July 09, 2022Comments

-

Rookie almost 2 years

I want to adjust the colors depending on which xyz position they are in the world.

I tried this in my fragment shader:

varying vec4 verpos; void main(){ vec4 c; c.x = verpos.x; c.y = verpos.y; c.z = verpos.z; c.w = 1.0; gl_FragColor = c; }but it seems that the colors change depending on my camera angle/position, how do i make the coords independent from my camera position/angle?

Heres my vertex shader:

varying vec4 verpos; void main(){ gl_Position = ftransform(); verpos = gl_ModelViewMatrix*gl_Vertex; }Edit2: changed title, so i want world coords, not screen coords!

Edit3: added my full code

-

Hannesh over 13 yearsDon't multiply by your gl_ModelViewMatrix.

-

-

Rookie over 13 yearsi cant get it work, it still varies the colors when i change my camera position/rotation. see my current code in edits.

-

kynnysmatto over 13 years@Rookie, sorry, I edited my answer a couple of times. have you read and understood this latest version?

-

Rookie over 13 years"So just pass two different matrices to your vertex shader" how? sorry im quite noob in GLSL / matrices... i have no clue how rendering works internally in opengl.

-

Rookie over 13 yearsi dont rotate my objects via glrotatef() (nor scale etc.) is the model_matrix then needed at all? damn.. i thought it would be a simple function call.. but looks like i have to rewrite my whole rendering code...

-

Rookie over 13 yearswhen i have set the arrays, what call i make in the fragment shader to get xyz?

-

Rookie over 13 yearsoh well, i use glrotatef() once to rotate my world... ofc, so i guess i need model_matrix too

-

Rookie over 13 yearsalso, why do i have to call

glGetFloatv(GL_MODELVIEW_MATRIX, &array);twice? -

kynnysmatto over 13 yearsIf you don't rotate and scale your objects but you move them (glTranslate) then you still need the model_matrix. If you did everything right, you don't need to call anything in the fragment shader. Just read the variable verpos like you have in your code. And the reason you call glGetFloatv twice is that the first time you get the view matrix and the next time(s) you get the model matrices for each object. You want to call it every time after you have set up the transformation for camera or objects with glTranslate, glRotate, glScale, gluLookAt etc.

-

kynnysmatto over 13 yearsSo if you have a camera and three objects, you would do the following: set up camera transformation, call glGetFloatv and save the result in the view matrix. Then call glLoadIdentity and set up the transformation for the first object and call glGetFloatv and save the result in the model matrix. At that point model matrix has the model matrix for that particular object and you can use it with rendering that object and its shaders. Then proceed to the next object and do the same thing. So glGetFloatv will be called four times total. (Once for camera and once per each object)

-

Rookie over 13 yearsSo i need to do

gl_Position = gl_ProjectionMatrix * view_matrix * model_matrix * gl_Vertex;and then again:verpos = gl_ProjectionMatrix * view_matrix * model_matrix * gl_Vertex;? -

kynnysmatto over 13 yearsAlmost. That would give you the 2D location of the vertex on the screen (in what is called homogeneous coordinates). model_matrix transforms model coordinates to world coordinates. In turn, view_matrix transforms world coordinates to a coordinate system where camera is the origin. Furthermore, gl_ProjctionMatrix transforms camera coordinates to (normalized) 2D display coordinates. So to transform the model coordinates only to the world coordinate stage, just use

verpos = model_matrix * gl_Vertex; -

kynnysmatto over 13 yearsAnd you're right that you needed to rewrite quite a lot of things, but it's actually good in the long run because having separate view and model matrices gives you a lot more freedom do to things easier, if you decide to code more shaders in the future.

-

kynnysmatto over 13 yearsTo be able to say

verpos = model_matrix * gl_Vertexis the whole reason why you wanted to separate model matrix from view matrix. -

mpen about 12 yearsThis will essentially give me the distance from the camera to a fragment? Sweet...that's exactly what I wanted.

![OpenGL Modelview Matrix and 3D Transformations [Shaders Monthly #3]](https://i.ytimg.com/vi/cKbC-Jkd-3I/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBfPrvxlD3agMaI9GLQo6XNqQlk9w)