hadoop connection refused on port 9000

Solution 1

Use absolute path for this and make sure the hadoop user has permissions to access this directory:-

<property>

<name>dfs.data.dir</name>

<value>~/hacking/hd-data/dn</value>

</property>

also make sure you format this path like

# hadoop namenode -format

Solution 2

Modify the core-sit.xml from

hdfs://localhost:9000

to

hdfs:// YOUR REAL MASTER IP ADDRESS:9000

e.g.

hdfs://192.168.111.10:9000

works for me!

Solution 3

The short and sweet answer is that your node service is not running. simply do

$HADOOP_HOME/bin/hdfs

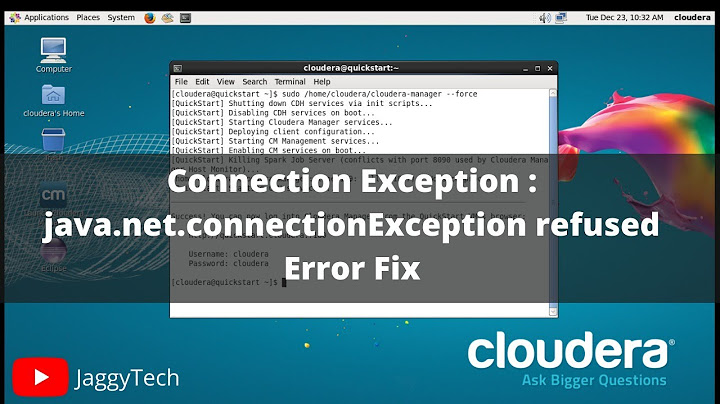

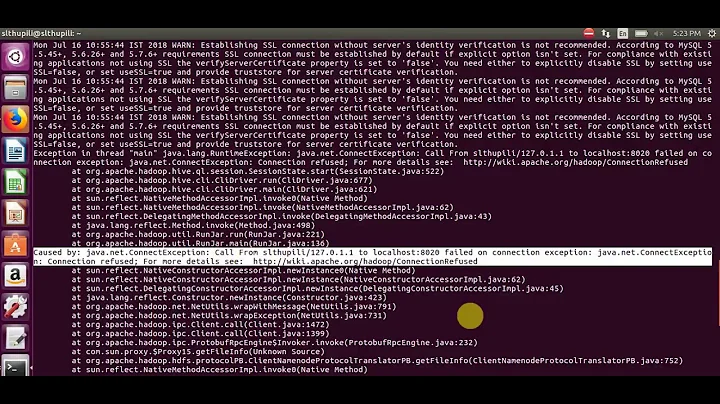

Related videos on Youtube

semaph0r

B.Sc in computer vision. Working as Backend Dev and DevOps Engineer. Experienced in robotics, real-time rendering, 3D computer graphics, image recognition, 3D reconstruction. Programming languages I prefer: C++, Python, C#, Go, Java

Updated on July 31, 2022Comments

-

semaph0r almost 2 years

semaph0r almost 2 yearsI want to setup a hadoop-cluster in pseudo-distributed mode for development. Trying to start the hadoop cluster fails due to refused connection on port 9000.

These are my configs (pretty standard):

site-core.xml:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>~/hacking/hd-data/tmp</value> </property> <property> <name>fs.checkpoint.dir</name> <value>~/hacking/hd-data/snn</value> </property> </configuration>hdfs-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.name.dir</name> <value>~/hacking/hd-data/nn</value> </property> <property> <name>dfs.data.dir</name> <value>~/hacking/hd-data/dn</value> </property> <property> <name>dfs.permissions.supergroup</name> <value>hadoop</value> </property> </configuration>haddop-env.sh - here I changed the config to IPv4 mode only (see last line):

# Set Hadoop-specific environment variables here. # The only required environment variable is JAVA_HOME. All others are # optional. When running a distributed configuration it is best to # set JAVA_HOME in this file, so that it is correctly defined on # remote nodes. # The java implementation to use. Required. export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64 # Extra Java CLASSPATH elements. Optional. # export HADOOP_CLASSPATH= # The maximum amount of heap to use, in MB. Default is 1000. # export HADOOP_HEAPSIZE=2000 # Extra Java runtime options. Empty by default. # export HADOOP_OPTS=-server # Command specific options appended to HADOOP_OPTS when specified export HADOOP_NAMENODE_OPTS="-Dcom.sun.management.jmxremote $HADOOP_NAMENODE_OPTS" export HADOOP_SECONDARYNAMENODE_OPTS="-Dcom.sun.management.jmxremote $HADOOP_SECONDARYNAMENODE_OPTS" export HADOOP_DATANODE_OPTS="-Dcom.sun.management.jmxremote $HADOOP_DATANODE_OPTS" export HADOOP_BALANCER_OPTS="-Dcom.sun.management.jmxremote $HADOOP_BALANCER_OPTS" export HADOOP_JOBTRACKER_OPTS="-Dcom.sun.management.jmxremote $HADOOP_JOBTRACKER_OPTS" # export HADOOP_TASKTRACKER_OPTS= # The following applies to multiple commands (fs, dfs, fsck, distcp etc) # export HADOOP_CLIENT_OPTS # Extra ssh options. Empty by default. # export HADOOP_SSH_OPTS="-o ConnectTimeout=1 -o SendEnv=HADOOP_CONF_DIR" # Where log files are stored. $HADOOP_HOME/logs by default. # export HADOOP_LOG_DIR=${HADOOP_HOME}/logs # File naming remote slave hosts. $HADOOP_HOME/conf/slaves by default. # export HADOOP_SLAVES=${HADOOP_HOME}/conf/slaves # host:path where hadoop code should be rsync'd from. Unset by default. # export HADOOP_MASTER=master:/home/$USER/src/hadoop # Seconds to sleep between slave commands. Unset by default. This # can be useful in large clusters, where, e.g., slave rsyncs can # otherwise arrive faster than the master can service them. # export HADOOP_SLAVE_SLEEP=0.1 # The directory where pid files are stored. /tmp by default. # export HADOOP_PID_DIR=/var/hadoop/pids # A string representing this instance of hadoop. $USER by default. # export HADOOP_IDENT_STRING=$USER # The scheduling priority for daemon processes. See 'man nice'. # export HADOOP_NICENESS=10 # Disabling IPv6 for HADOOP export HADOOP_OPTS=-Djava.net.preferIPv4Stack=true/etc/hosts:

127.0.0.1 localhost zaphod # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allroutersBut at the beginning after calling

./start-dfs.shfollowing lines are in the log files:hadoop-pschmidt-datanode-zaphod.log

2013-08-19 21:21:59,430 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting DataNode STARTUP_MSG: host = zaphod/127.0.1.1 STARTUP_MSG: args = [] STARTUP_MSG: version = 0.20.204.0 STARTUP_MSG: build = git://hrt8n35.cc1.ygridcore.net/ on branch branch-0.20-security-204 -r 65e258bf0813ac2b15bb4c954660eaf9e8fba141; compiled by 'hortonow' on Thu Aug 25 23:25:52 UTC 2011 ************************************************************/ 2013-08-19 21:22:03,950 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties 2013-08-19 21:22:04,052 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source MetricsSystem,sub=Stats registered. 2013-08-19 21:22:04,064 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s). 2013-08-19 21:22:04,065 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: DataNode metrics system started 2013-08-19 21:22:07,054 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source ugi registered. 2013-08-19 21:22:07,060 WARN org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Source name ugi already exists! 2013-08-19 21:22:08,709 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 0 time(s). 2013-08-19 21:22:09,710 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 1 time(s). 2013-08-19 21:22:10,711 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 2 time(s). 2013-08-19 21:22:11,712 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 3 time(s). 2013-08-19 21:22:12,712 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 4 time(s). 2013-08-19 21:22:13,713 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 5 time(s). 2013-08-19 21:22:14,714 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 6 time(s). 2013-08-19 21:22:15,714 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 7 time(s). 2013-08-19 21:22:16,715 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 8 time(s). 2013-08-19 21:22:17,716 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 9 time(s). 2013-08-19 21:22:17,717 INFO org.apache.hadoop.ipc.RPC: Server at localhost/127.0.0.1:9000 not available yet, Zzzzz...hadoop-pschmidt-namenode-zaphod.log

2013-08-19 21:21:59,443 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = zaphod/127.0.1.1 STARTUP_MSG: args = [] STARTUP_MSG: version = 0.20.204.0 STARTUP_MSG: build = git://hrt8n35.cc1.ygridcore.net/ on branch branch-0.20-security-204 -r 65e258bf0813ac2b15bb4c954660eaf9e8fba141; compiled by 'hortonow' on Thu Aug 25 23:25:52 UTC 2011 ************************************************************/ 2013-08-19 21:22:03,950 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties 2013-08-19 21:22:04,052 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source MetricsSystem,sub=Stats registered. 2013-08-19 21:22:04,064 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s). 2013-08-19 21:22:04,064 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system started 2013-08-19 21:22:06,050 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source ugi registered. 2013-08-19 21:22:06,056 WARN org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Source name ugi already exists! 2013-08-19 21:22:06,095 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source jvm registered. 2013-08-19 21:22:06,097 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source NameNode registered. 2013-08-19 21:22:06,232 INFO org.apache.hadoop.hdfs.util.GSet: VM type = 64-bit 2013-08-19 21:22:06,234 INFO org.apache.hadoop.hdfs.util.GSet: 2% max memory = 17.77875 MB 2013-08-19 21:22:06,235 INFO org.apache.hadoop.hdfs.util.GSet: capacity = 2^21 = 2097152 entries 2013-08-19 21:22:06,235 INFO org.apache.hadoop.hdfs.util.GSet: recommended=2097152, actual=2097152 2013-08-19 21:22:06,748 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsOwner=pschmidt 2013-08-19 21:22:06,748 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: supergroup=hadoop 2013-08-19 21:22:06,748 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: isPermissionEnabled=true 2013-08-19 21:22:06,754 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: dfs.block.invalidate.limit=100 2013-08-19 21:22:06,768 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 2013-08-19 21:22:07,262 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Registered FSNamesystemStateMBean and NameNodeMXBean 2013-08-19 21:22:07,322 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Caching file names occuring more than 10 times 2013-08-19 21:22:07,326 INFO org.apache.hadoop.hdfs.server.common.Storage: Storage directory /home/pschmidt/hacking/hadoop-0.20.204.0/~/hacking/hd-data/nn does not exist. 2013-08-19 21:22:07,329 ERROR org.apache.hadoop.hdfs.server.namenode.FSNamesystem: FSNamesystem initialization failed. org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /home/pschmidt/hacking/hadoop-0.20.204.0/~/hacking/hd-data/nn is in an inconsistent state: storage directory does not exist or is not accessible. at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:291) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.loadFSImage(FSDirectory.java:97) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.initialize(FSNamesystem.java:379) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.<init>(FSNamesystem.java:353) at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:254) at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:434) at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1153) at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1162) 2013-08-19 21:22:07,331 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /home/pschmidt/hacking/hadoop-0.20.204.0/~/hacking/hd-data/nn is in an inconsistent state: storage directory does not exist or is not accessible. at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:291) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.loadFSImage(FSDirectory.java:97) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.initialize(FSNamesystem.java:379) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.<init>(FSNamesystem.java:353) at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:254) at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:434) at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1153) at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1162) 2013-08-19 21:22:07,332 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at zaphod/127.0.1.1 ************************************************************/After reformatting the hdfs following output is displayed:

13/08/19 21:50:21 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = zaphod/127.0.0.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 0.20.204.0 STARTUP_MSG: build = git://hrt8n35.cc1.ygridcore.net/ on branch branch-0.20-security-204 -r 65e258bf0813ac2b15bb4c954660eaf9e8fba141; compiled by 'hortonow' on Thu Aug 25 23:25:52 UTC 2011 ************************************************************/ Re-format filesystem in ~/hacking/hd-data/nn ? (Y or N) Y 13/08/19 21:50:26 INFO util.GSet: VM type = 64-bit 13/08/19 21:50:26 INFO util.GSet: 2% max memory = 17.77875 MB 13/08/19 21:50:26 INFO util.GSet: capacity = 2^21 = 2097152 entries 13/08/19 21:50:26 INFO util.GSet: recommended=2097152, actual=2097152 13/08/19 21:50:27 INFO namenode.FSNamesystem: fsOwner=pschmidt 13/08/19 21:50:27 INFO namenode.FSNamesystem: supergroup=hadoop 13/08/19 21:50:27 INFO namenode.FSNamesystem: isPermissionEnabled=true 13/08/19 21:50:27 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 13/08/19 21:50:27 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 13/08/19 21:50:27 INFO namenode.NameNode: Caching file names occuring more than 10 times 13/08/19 21:50:27 INFO common.Storage: Image file of size 110 saved in 0 seconds. 13/08/19 21:50:28 INFO common.Storage: Storage directory ~/hacking/hd-data/nn has been successfully formatted. 13/08/19 21:50:28 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at zaphod/127.0.0.1 ************************************************************/Using

netstat -lpten | grep java:tcp 0 0 0.0.0.0:50301 0.0.0.0:* LISTEN 1000 50995 9875/java tcp 0 0 0.0.0.0:35471 0.0.0.0:* LISTEN 1000 51775 9639/java tcp6 0 0 :::2181 :::* LISTEN 1000 20841 2659/java tcp6 0 0 :::36743 :::* LISTEN 1000 20524 2659/javaUsing

netstat -lpten | grep 9000returns nothing, assuming that there is no application bound to this designated port after all.What else can I look for to get my hdfs up and running. Don't hesitate to ask for further logs and config files.

Thanks in advance.

-

Andrew Martin over 10 yearsHave you modified your mapred-site.xml as well?

-

Andrew Martin over 10 yearsAlso, do you have appropriate permissions to access Hadoop?

-

erencan over 10 yearsWhat is the results of

erencan over 10 yearsWhat is the results ofjpscommand on the system? -

semaph0r over 10 years7211 TaskTracker 6979 JobTracker 6634 DataNode 6404 NameNode 8827 Jps 6866 SecondaryNameNode 7886 HRegionServer 2775 QuorumPeerMain

semaph0r over 10 years7211 TaskTracker 6979 JobTracker 6634 DataNode 6404 NameNode 8827 Jps 6866 SecondaryNameNode 7886 HRegionServer 2775 QuorumPeerMain

-

-

semaph0r over 10 yearsWorks for me! I think the relative path caused the problems. Hadoop seems to be somewhat fragile in some cases... Thanks!

semaph0r over 10 yearsWorks for me! I think the relative path caused the problems. Hadoop seems to be somewhat fragile in some cases... Thanks! -

Yongwei Wu over 7 yearsI had the similar problem. And I did the format some time ago. The weird thing is that I found I needed to format it again today!

-

Mooncrater about 5 years

Mooncrater about 5 yearstelnet localhost 9000still refuses connection. -

Luchao Qi over 4 yearsBTW I'm working on Ubuntu 18.04.2 LTS / Hadoop 3.1.2 After struggling for 2 days it finally works

Luchao Qi over 4 yearsBTW I'm working on Ubuntu 18.04.2 LTS / Hadoop 3.1.2 After struggling for 2 days it finally works