Hive / S3 error: "No FileSystem for scheme: s3"

Problem discussed here.

https://github.com/ramhiser/spark-kubernetes/issues/3

You need to add reference to aws sdk jars to hive library path. That way it can recognize file schemes,

s3, s3n, and s3a

Hope it helps.

EDIT1:

hadoop-aws-2.7.4 has implementations on how to interact with those file systems. Verifying the jar it has all the implementations to handle those schema.

org.apache.hadoop.fs tells hadoop to see which file system implementation it need to look.

Below classes are implamented in those jar,

org.apache.hadoop.fs.[s3|s3a|s3native]

The only thing still missing is, the library is not getting added to hive library path. Is there anyway you can verify that path is added to hive library path?

EDIT2:

Reference to library path setting,

How can I access S3/S3n from a local Hadoop 2.6 installation?

Related videos on Youtube

Xaphanius

I am a Computer Engineering student that loves books (fantasy/fiction/thrillers) and also RPGs. I have a high level of interest in Math (i.e. Discrete Math and Pure Algebra), but I also love to learn new languages -both programming and "nonprogramming" like Japanese-.

Updated on June 04, 2022Comments

-

Xaphanius almost 2 years

I am running Hive from a container (this image: https://hub.docker.com/r/bde2020/hive/) in my local computer.

I am trying to create a Hive table stored as a CSV in S3 with the following command:

CREATE EXTERNAL TABLE local_test (name STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES TERMINATED BY '\n' STORED AS TEXTFILE LOCATION 's3://mybucket/local_test/';However, I am getting the following error:

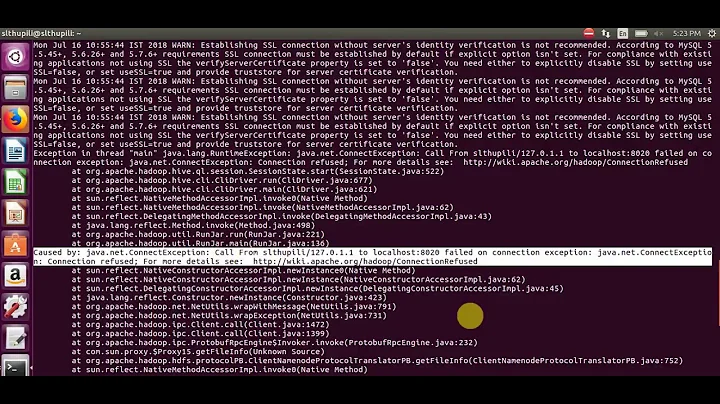

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:Got exception: java.io.IOException No FileSystem for scheme: s3)

What is causing it? Do I need to set up something else?

Note: I am able to run

aws s3 ls mybucketand also to create Hive tables in another directory, like/tmp/. -

Xaphanius about 6 yearsI am not using Spark, but I will try to reference this aws sdk jar in Hive library path. Could you explain a little bit more about it? I downloaded a hadoop-aws-2.7.4.jar and added to Hive using ADD JAR /path_to_jar, but it still doesn't work...

-

Usman Azhar about 6 yearsDid you tried changing it to s3a instead of s3..

Usman Azhar about 6 yearsDid you tried changing it to s3a instead of s3..s3a://mybucket/local_test/? -

Xaphanius about 6 yearsTrying with s3a gives

FAILED: SemanticException java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found -

Kannaiyan about 6 yearsAdded reference how to add path to hadoop.

-

Xaphanius about 6 yearsThanks for your help, but I am still having the same problem. I tried to add the jar directly in Hive, but it didn't work. I am able to run

hadoop fs -ls "s3n://ACCESS_KEY:SECRET_KEY@mybucket/"however.