How and when to use /dev/shm for efficiency?

You don't use /dev/shm. It exists so that the POSIX C library can provide shared memory support via the POSIX API. Not so you can poke at stuff in there.

If you want an in-memory filesystem of your very own, you can mount one wherever you want it.

mount -t tmpfs tmpfs /mnt/tmp, for example.

A Linux tmpfs is a temporary filesystem that only exists in RAM. It is implemented by having a file cache without any disk storage behind it. It will write its contents into the swap file under memory pressure. If you didn't want the swapfile you can use a ramfs.

I don't know where you got the idea of using /dev/shm for efficiency in reading files, because that isn't what it does at all.

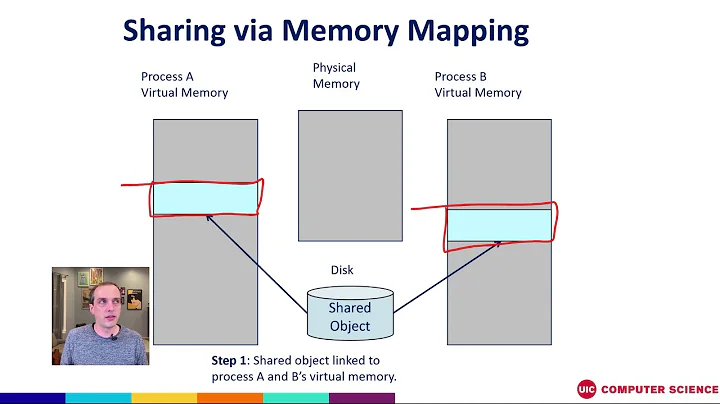

Maybe you were thinking of using memory mapping, via the mmap system call?

Read this answer here: https://superuser.com/a/1030777/4642 it covers a lot of tmpfs information.

Related videos on Youtube

ilija139

PhD candidate at NUS, under supervision of Jiashi Feng and Shuicheng Yan, generously supported by an NGS scholarship. Research interests: Deep Learning, Representation Learning, Natural Language Processing and Generation, Question Answering

Updated on June 04, 2022Comments

-

ilija139 almost 2 years

How is

/dev/shmmore efficient than writing the file on the regular file system? As far as I know,/dev/shmis also a space on the HDD so the read/write speeds are the same.My problem is, I have a 96GB file and only 64GB RAM (+ 64GB swap). Then, multiple threads from the same process need to read small random chunks of the file (about 1.5MB).

Is

/dev/shma good use case for this?

Will it be faster than opening the file in read-only mode from/homeand then passing over to the threads to do the reading the required random chunks?-

Barmar about 7 years

/dev/shmis not on the hard disk. It's a virtual filesystem implemented in memory, that's why it's faster. -

ilija139 about 7 years@Barmar right, just realised that... Then makes sense to use it, especially since it can be shared from multiple processes so it won't take 96GB per process that wants to use it.

-