How big should batch size and number of epochs be when fitting a model in Keras?

Solution 1

Since you have a pretty small dataset (~ 1000 samples), you would probably be safe using a batch size of 32, which is pretty standard. It won't make a huge difference for your problem unless you're training on hundreds of thousands or millions of observations.

To answer your questions on Batch Size and Epochs:

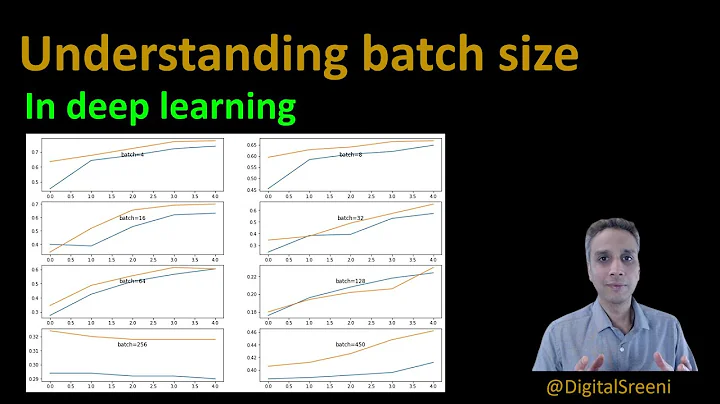

In general: Larger batch sizes result in faster progress in training, but don't always converge as fast. Smaller batch sizes train slower, but can converge faster. It's definitely problem dependent.

In general, the models improve with more epochs of training, to a point. They'll start to plateau in accuracy as they converge. Try something like 50 and plot number of epochs (x axis) vs. accuracy (y axis). You'll see where it levels out.

What is the type and/or shape of your data? Are these images, or just tabular data? This is an important detail.

Solution 2

Great answers above. Everyone gave good inputs.

Ideally, this is the sequence of the batch sizes that should be used:

{1, 2, 4, 8, 16} - slow

{ [32, 64],[ 128, 256] }- Good starters

[32, 64] - CPU

[128, 256] - GPU for more boost

Solution 3

I use Keras to perform non-linear regression on speech data. Each of my speech files gives me features that are 25000 rows in a text file, with each row containing 257 real valued numbers. I use a batch size of 100, epoch 50 to train Sequential model in Keras with 1 hidden layer. After 50 epochs of training, it converges quite well to a low val_loss.

Solution 4

I used Keras to perform non linear regression for market mix modelling. I got best results with a batch size of 32 and epochs = 100 while training a Sequential model in Keras with 3 hidden layers. Generally batch size of 32 or 25 is good, with epochs = 100 unless you have large dataset. in case of large dataset you can go with batch size of 10 with epochs b/w 50 to 100. Again the above mentioned figures have worked fine for me.

Solution 5

tf.keras.callbacks.EarlyStopping

With Keras you can make use of tf.keras.callbacks.EarlyStopping which automatically stops training if the monitored loss has stopped improving. You can allow epochs with no improvement using the parameter patience.

It helps to find the plateau from which you can go on refining the number of epochs or may even suffice to reach your goal without having to deal with epochs at all.

Related videos on Youtube

Comments

-

pr338 about 2 years

I am training on 970 samples and validating on 243 samples.

How big should batch size and number of epochs be when fitting a model in Keras to optimize the val_acc? Is there any sort of rule of thumb to use based on data input size?

-

daniel451 over 8 yearsI would say this highly depends on your data. If you are just playing around with some simple task, like XOR-Classifiers, a few hundred epochs with a batch size of 1 is enough to get like 99.9% accuracy. For MNIST I mostly experienced reasonable results with something around 10 to 100 for batch size and less than 100 epochs. Without details to your problem, your architecture, your learning rules / cost functions, your data and so on one can not answer this accurately.

daniel451 over 8 yearsI would say this highly depends on your data. If you are just playing around with some simple task, like XOR-Classifiers, a few hundred epochs with a batch size of 1 is enough to get like 99.9% accuracy. For MNIST I mostly experienced reasonable results with something around 10 to 100 for batch size and less than 100 epochs. Without details to your problem, your architecture, your learning rules / cost functions, your data and so on one can not answer this accurately. -

kRazzy R almost 6 yearsis there a way to include all the data in every training epoch?

kRazzy R almost 6 yearsis there a way to include all the data in every training epoch? -

Wickkiey about 5 years@kRazzyR . Actually for every training all the data will be considered with splited batch. if you want to include all the data in a single time use batchsize of data length.

-

-

BallpointBen almost 7 yearsThe batch size should pretty much be as large as possible without exceeding memory. The only other reason to limit batch size is that if you concurrently fetch the next batch and train the model on the current batch, you may be wasting time fetching the next batch (because it's so large and the memory allocation may take a significant amount of time) when the model has finished fitting to the current batch, in which case it might be better to fetch batches more quickly to reduce model downtime.

-

Peter over 5 yearsI often see values for batch size which are a multiple of 8. Is there a formal reason for this choice?

Peter over 5 yearsI often see values for batch size which are a multiple of 8. Is there a formal reason for this choice? -

Ian Rehwinkel about 5 yearsFor me, these values were very bad. I ended up using a batch-size of 3000 for my model, which is way more than you proposed here.

-

Anurag Gupta about 5 yearsValue for batch size should be (preferred) in powers of 2. stackoverflow.com/questions/44483233/…

-

Markus almost 5 yearsHmm is there any source why you state this as given fact?

Markus almost 5 yearsHmm is there any source why you state this as given fact? -

Beltino Goncalves almost 5 yearsHere's a cited source using these batch sizes on a CNN model. Hope this is a good use to you. ~Cheers arxiv.org/pdf/1606.02228.pdf#page=3&zoom=150,0,125

Beltino Goncalves almost 5 yearsHere's a cited source using these batch sizes on a CNN model. Hope this is a good use to you. ~Cheers arxiv.org/pdf/1606.02228.pdf#page=3&zoom=150,0,125 -

IanCZane about 4 yearsThis seems to be a gross oversimplification. Batch size will generally depend on the per-item complexity of your input set as well as the amount of memory you're working with. In my experience, I get the best results by gradually scaling my batch size. For me, I've had the best luck starting with 1 and doubling my batch size every

nhours of training, withndepending on the complexity or size of the dataset until I reach the memory limits of my machine, then continuing to train on the largest batch size possible for as long as possible. -

Dom045 over 3 yearsDoes a larger epoch result in overfitting? Does having more data and less epoch result in underfitting?

-

Prasanjit Rath over 3 years"For large dataset, batch size of 10...", isn't the understanding correct that more the batch size, better it is, as gradients are averaged over a batch

-

Recap_Hessian almost 3 years@Peter. This may be helpful stackoverflow.com/questions/44483233/….

-

Kennet Celeste over 2 yearsbig ouch ......

-

J R almost 2 yearsI concur with @alexanderdavide. Early stopping callback should always be used, then one doesn't have to deal with epoch size