How can I cat multiple files together into one without intermediary file?

Solution 1

If you don't need random access into the final big file (i.e., you just read it through once from start to finish), you can make your hundreds of intermediate files appear as one. Where you would normally do

$ consume big-file.txt

instead do

$ consume <(cat file1 file2 ... fileN)

This uses Unix process substitution, sometimes also called "anonymous named pipes."

You may also be able to save time and space by splitting your input and doing the processing at the same time; GNU Parallel has a --pipe switch that will do precisely this. It can also reassemble the outputs back into one big file, potentially using less scratch space as it only needs to keep number-of-cores pieces on disk at once. If you are literally running your hundreds of processes at the same time, Parallel will greatly improve your efficiency by letting you tune the amount of parallelism to your machine. I highly recommend it.

Solution 2

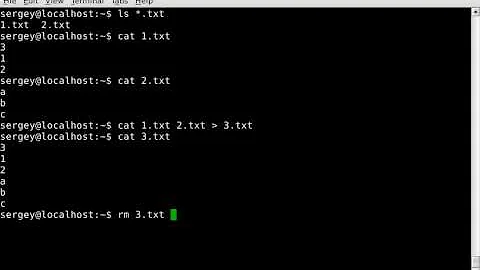

When concatenating files back together, you could delete the small files as they get appended:

for file in file1 file2 file3 ... fileN; do

cat "$file" >> bigFile && rm "$file"

done

This would avoid needing double the space.

There is no other way of magically making files magically concatenate. The filesystem API simply doesn't have a function that does that.

Solution 3

Maybe dd would be faster because you wouldn't have to pass stuff between cat and the shell. Something like:

mv file1 newBigFile

dd if=file2 of=newBigFile seek=$(stat -c %s newBigFile)

Solution 4

Is it possible for you to simply not split the file? Instead process the file in chunks by setting the file pointer in each of your parallel workers. If the file needs to be processed in a line oriented way, that makes it trickier but it can still be done. Each worker needs to understand that rather than starting at the offset you give it, it must first seek byte by byte to the next newline +1. Each worker must also understand that it does not process the set amount of bytes you give it but must process up the the first newline after the set amount of bytes it is allocated to process.

The actual allocation and setting of the file pointer is pretty straightforward. If there are n workers, each one processes n/file size bytes and the file pointer starts at the worker number * n/file_size.

is there some reason that kind of plan is not sufficient?

Solution 5

all I really need is for the hundreds of files to reappear as 1 file...

The reason it isn't practical to just join files that way at a filesystem level because text files don't usually fill a disk block exactly, so the data in subsequent files would have to be moved up to fill in the gaps, causing a bunch of reads/writes anyway.

Related videos on Youtube

Wing

Updated on August 03, 2020Comments

-

Wing almost 4 years

Here is the problem I'm facing:

- I am string processing a text file ~100G in size.

- I'm trying to improve the runtime by splitting the file into many hundreds of smaller files and processing them in parallel.

- In the end I cat the resulting files back together in order.

The file read/write time itself takes hours, so I would like to find a way to improve the following:

cat file1 file2 file3 ... fileN >> newBigFileThis requires double the diskspace as

file1...fileNtakes up 100G, and thennewBigFiletakes another 100Gb, and thenfile1...fileNgets removed.The data is already in

file1...fileN, doing thecat >>incurs read and write time when all I really need is for the hundreds of files to reappear as 1 file...

-

Matt Ball over 13 yearsIt sounds like you should be using something with a bit more muscle than a Unix shell.

-

Wing over 13 yearsThanks, but unfortunately I can't change the company's file servers and hardwares...

-

Andrew Flanagan over 13 yearsOf course your circumstance may prohibit this but if it's presented to management as an ADDITION to an existing servers disk storage (rather than a replacement), it may be considered. If you can have an SSD that's used only for this task and it saves 2hrs of processing time each day, I think they'd be convinced of the cost savings.

-

Robie Basak over 13 yearsInstead of modifying the workers, the shell could supply the workers with an

stdinthat is already just the segment that it should work on, for example usingsedto select a line range. If the output needs to be coordinated, GNU Parallel could help with this. -

Wing over 13 yearsThis whole thing is done in perl, where the original script tries to do string manipulations through the whole 100G file serially. Right now I have it splitting the file and processing the chunks via fork(), but now the read/write time is bottlenecking the runtime. I do not have to do the initial split I suppose like you said, but I still have to write out the processed chunks and then put them back together in 1 file, right?

-

frankc over 13 yearsi definitely think that dd, combined with deleting the files as you copy them as Robie Basak suggested, will make for the most recombining solution, short of implementing a custom cp/unlink command with mmap. I am convinced nothing would be more efficient than eliminating the splitting entirely, however.

-

Wing over 13 yearsIf I do not split the file and have each child process read the original 100G file working at different lines, will I get bottlenecked by 200 processes trying to read the same file?

-

frankc over 13 years@wing, It is possible that you will have io contention but it's hard to predict because it depends on how the file is fragmented, what kind of storage you have etc. A more sophisticated approach would be to have each worker read from a memory queue that a master i/o reading process dispatches work to so that io reads are sequential, however any of this performs can only be determined experimentally because we can't easily predict the impact of OS file caching etc.

-

Michael about 12 yearsI haven't tested this, but it sounds like the most useful suggestion

-

dfrankow about 12 yearsProcess substitution looks awesome because it doesn't put things on disk. So you can do "consume <(cmd1 file1) <(cmd2 file2) <(cmd3 file3)". However, here it's equivalent to the more traditional "cat file1 file2 ... | consume".

-

Kieveli almost 7 yearsNice =) That worked peachy. needed an echo; before the done.