How can I count files with a particular extension, and the directories they are in?

Solution 1

I haven't examined the output with symlinks but:

find . -type f -iname '*.c' -printf '%h\0' |

sort -z |

uniq -zc |

sed -zr 's/([0-9]) .*/\1 1/' |

tr '\0' '\n' |

awk '{f += $1; d += $2} END {print f, d}'

- The

findcommand prints the directory name of each.cfile it finds. -

sort | uniq -cwill gives us how many files are in each directory (thesortmight be unnecessary here, not sure) - with

sed, I replace the directory name with1, thus eliminating all possible weird characters, with just the count and1remaining - enabling me to convert to newline-separated output with

tr - which I then sum up with awk, to get the total number of files and the number of directories that contained those files. Note that

dhere is essentially the same asNR. I could have omitted inserting1in thesedcommand, and just printedNRhere, but I think this is slightly clearer.

Up until the tr, the data is NUL-delimited, safe against all valid filenames.

With zsh and bash, you can use printf %q to get a quoted string, which would not have newlines in it. So, you might be able to do something like:

shopt -s globstar dotglob nocaseglob

printf "%q\n" **/*.c | awk -F/ '{NF--; f++} !c[$0]++{d++} END {print f, d}'

However, even though ** is not supposed to expand for symlinks to directories, I could not get the desired output on bash 4.4.18(1) (Ubuntu 16.04).

$ shopt -s globstar dotglob nocaseglob

$ printf "%q\n" ./**/*.c | awk -F/ '{NF--; f++} !c[$0]++{d++} END {print f, d}'

34 15

$ echo $BASH_VERSION

4.4.18(1)-release

But zsh worked fine, and the command can be simplified:

$ printf "%q\n" ./**/*.c(D.:h) | awk '!c[$0]++ {d++} END {print NR, d}'

29 7

D enables this glob to select dot files, . selects regular files (so, not symlinks), and :h prints only the directory path and not the filename (like find's %h) (See sections on Filename Generation and Modifiers). So with the awk command we just need to count the number of unique directories appearing, and the number of lines is the file count.

Solution 2

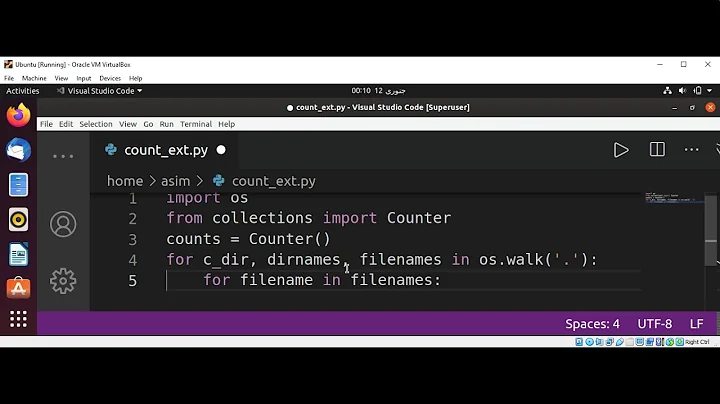

Python has os.walk, which makes tasks like this easy, intuitive, and automatically robust even in the face of weird filenames such as those that contain newline characters. This Python 3 script, which I had originally posted in chat, is intended to be run in the current directory (but it doesn't have to be located in the current directory, and you can change what path it passes to os.walk):

#!/usr/bin/env python3

import os

dc = fc = 0

for _, _, fs in os.walk('.'):

c = sum(f.endswith('.c') for f in fs)

if c:

dc += 1

fc += c

print(dc, fc)

That prints the count of directories that directly contain at least one file whose name ends in .c, followed by a space, followed by the count of files whose names end in .c. "Hidden" files--that is, files whose names start with .--are included, and hidden directories are similarly traversed.

os.walk recursively traverses a directory hierarchy. It enumerates all the directories that are recursively accessible from the starting point you give it, yielding information about each of them as a tuple of three values, root, dirs, files. For each directory it traverses to (including the first one whose name you give it):

-

rootholds the pathname of that directory. Note that this is totally unrelated to the system's "root directory"/(and also unrelated to/root) though it would go to those if you start there. In this case,rootstarts at the path.--i.e., the current directory--and goes everywhere below it. -

dirsholds a list of the pathnames of all the subdirectories of the directory whose name is currently held inroot. -

filesholds a list of the pathnames of all the files that reside in the directory whose name is currently held inrootbut that are not themselves directories. Note that this includes other kinds of files than regular files, including symbolic links, but it sounds like you don't expect any such entries to end in.cand are interested in seeing any that do.

In this case, I only need to examine the third element of the tuple, files (which I call fs in the script). Like the find command, Python's os.walk traverses into subdirectories for me; the only thing I have to inspect myself is the names of the files each of them contains. Unlike the find command, though, os.walk automatically provides me a list of those filenames.

That script does not follow symbolic links. You very probably don't want symlinks followed for such an operation, because they could form cycles, and because even if there are no cycles, the same files and directories may be traversed and counted multiple times if they are accessible through different symlinks.

If you ever did want os.walk to follow symlinks--which you usually wouldn't--then you can pass followlinks=true to it. That is, instead of writing os.walk('.') you could write os.walk('.', followlinks=true). I reiterate that you would rarely want that, especially for a task like this where you are recursively enumerating an entire directory structure, no matter how big it is, and counting all the files in it that meet some requirement.

Solution 3

Find + Perl:

$ find . -type f -iname '*.c' -printf '%h\0' |

perl -0 -ne '$k{$_}++; }{ print scalar keys %k, " $.\n" '

7 29

Explanation

The find command will find any regular files (so no symlinks or directories) and then print the name of directory they are in (%h) followed by \0.

-

perl -0 -ne: read the input line by line (-n) and apply the script given by-eto each line. The-0sets the input line separator to\0so we can read null-delimited input. -

$k{$_}++:$_is a special variable that takes the value of the current line. This is used as a key to the hash%k, whose values are the number of times each input line (directory name) was seen. -

}{: this is a shorthand way of writingEND{}. Any commands after the}{will be executed once, after all input has been processed. -

print scalar keys %k, " $.\n":keys %kreturns an array of the keys in the hash%k.scalar keys %kgives the number of elements in that array, the number of directories seen. This is printed along with the current value of$., a special variable that holds the current input line number. Since this is run at the end, the current input line number will be the number of the last line, so the number of lines seen so far.

You could expand the perl command to this, for clarity:

find . -type f -iname '*.c' -printf '%h\0' |

perl -0 -e 'while($line = <STDIN>){

$dirs{$line}++;

$tot++;

}

$count = scalar keys %dirs;

print "$count $tot\n" '

Solution 4

Small shellscript

I suggest a small bash shellscript with two main command lines (and a variable filetype to make it easy to switch in order to look for other file types).

It does not look for or in symlinks, only regular files.

#!/bin/bash

filetype=c

#filetype=pdf

# count the 'filetype' files

find -type f -name "*.$filetype" -ls|sed 's#.* \./##'|wc -l | tr '\n' ' '

# count directories containing 'filetype' files

find -type d -exec bash -c "ls -AF '{}'|grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null && echo '{} contains file(s)'" \;|grep 'contains file(s)$'|wc -l

Verbose shellscript

This is a more verbose version that also considers symbolic links,

#!/bin/bash

filetype=c

#filetype=pdf

# counting the 'filetype' files

echo -n "number of $filetype files in the current directory tree: "

find -type f -name "*.$filetype" -ls|sed 's#.* \./##'|wc -l

echo -n "number of $filetype symbolic links in the current directory tree: "

find -type l -name "*.$filetype" -ls|sed 's#.* \./##'|wc -l

echo -n "number of $filetype normal files in the current directory tree: "

find -type f -name "*.$filetype" -ls|sed 's#.* \./##'|wc -l

echo -n "number of $filetype symbolic links in the current directory tree including linked directories: "

find -L -type f -name "*.$filetype" -ls 2> /tmp/c-counter |sed 's#.* \./##' | wc -l; cat /tmp/c-counter; rm /tmp/c-counter

# list directories with and without 'filetype' files (good for manual checking; comment away after test)

echo '---------- list directories:'

find -type d -exec bash -c "ls -AF '{}'|grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null && echo '{} contains file(s)' || echo '{} empty'" \;

echo ''

#find -L -type d -exec bash -c "ls -AF '{}'|grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null && echo '{} contains file(s)' || echo '{} empty'" \;

# count directories containing 'filetype' files

echo -n "number of directories with $filetype files: "

find -type d -exec bash -c "ls -AF '{}'|grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null && echo '{} contains file(s)'" \;|grep 'contains file(s)$'|wc -l

# list and count directories including symbolic links, containing 'filetype' files

echo '---------- list all directories including symbolic links:'

find -L -type d -exec bash -c "ls -AF '{}' |grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null && echo '{} contains file(s)' || echo '{} empty'" \;

echo ''

echo -n "number of directories (including symbolic links) with $filetype files: "

find -L -type d -exec bash -c "ls -AF '{}'|grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null && echo '{} contains file(s)'" \; 2>/dev/null |grep 'contains file(s)$'|wc -l

# count directories without 'filetype' files (good for checking; comment away after test)

echo -n "number of directories without $filetype files: "

find -type d -exec bash -c "ls -AF '{}'|grep -e '\.'${filetype}$ -e '\.'${filetype}'\*'$ > /dev/null || echo '{} empty'" \;|grep 'empty$'|wc -l

Test output

From short shellscript:

$ ./ccntr

29 7

From verbose shellscript:

$ LANG=C ./c-counter

number of c files in the current directory tree: 29

number of c symbolic links in the current directory tree: 1

number of c normal files in the current directory tree: 29

number of c symbolic links in the current directory tree including linked directories: 42

find: './cfiles/2/2': Too many levels of symbolic links

find: './cfiles/dirlink/2': Too many levels of symbolic links

---------- list directories:

. empty

./cfiles contains file(s)

./cfiles/2 contains file(s)

./cfiles/2/b contains file(s)

./cfiles/2/a contains file(s)

./cfiles/3 empty

./cfiles/3/b contains file(s)

./cfiles/3/a empty

./cfiles/1 contains file(s)

./cfiles/1/b empty

./cfiles/1/a empty

./cfiles/space d contains file(s)

number of directories with c files: 7

---------- list all directories including symbolic links:

. empty

./cfiles contains file(s)

./cfiles/2 contains file(s)

find: './cfiles/2/2': Too many levels of symbolic links

./cfiles/2/b contains file(s)

./cfiles/2/a contains file(s)

./cfiles/3 empty

./cfiles/3/b contains file(s)

./cfiles/3/a empty

./cfiles/dirlink empty

find: './cfiles/dirlink/2': Too many levels of symbolic links

./cfiles/dirlink/b contains file(s)

./cfiles/dirlink/a contains file(s)

./cfiles/1 contains file(s)

./cfiles/1/b empty

./cfiles/1/a empty

./cfiles/space d contains file(s)

number of directories (including symbolic links) with c files: 9

number of directories without c files: 5

$

Solution 5

Here's my suggestion:

#!/bin/bash

tempfile=$(mktemp)

find -type f -name "*.c" -prune >$tempfile

grep -c / $tempfile

sed 's_[^/]*$__' $tempfile | sort -u | grep -c /

This short script creates a tempfile, finds every file in and under the current directory ending in .c and writes the list to the tempfile. grep is then used to count the files (following How can I get a count of files in a directory using the command line?) twice: The second time, directories that are listed multiple times are removed using sort -u after stripping filenames from each line using sed.

This also works properly with newlines in filenames: grep -c / counts only lines with a slash and therefore considers only the first line of a multi-line filename in the list.

Output

$ tree

.

├── 1

│ ├── 1

│ │ ├── test2.c

│ │ └── test.c

│ └── 2

│ └── test.c

└── 2

├── 1

│ └── test.c

└── 2

$ tempfile=$(mktemp);find -type f -name "*.c" -prune >$tempfile;grep -c / $tempfile;sed 's_[^/]*$__' $tempfile | sort -u | grep -c /

4

3

Related videos on Youtube

Zanna

Updated on September 18, 2022Comments

-

Zanna over 1 year

Zanna over 1 yearI want to know how many regular files have the extension

.cin a large complex directory structure, and also how many directories these files are spread across. The output I want is just those two numbers.I've seen this question about how to get the number of files, but I need to know the number of directories the files are in too.

- My filenames (including directories) might have any characters; they may start with

.or-and have spaces or newlines. - I might have some symlinks whose names end with

.c, and symlinks to directories. I don't want symlinks to be followed or counted, or I at least want to know if and when they are being counted. - The directory structure has many levels and the top level directory (the working directory) has at least one

.cfile in it.

I hastily wrote some commands in the (Bash) shell to count them myself, but I don't think the result is accurate...

shopt -s dotglob shopt -s globstar mkdir out for d in **/; do find "$d" -maxdepth 1 -type f -name "*.c" >> out/$(basename "$d") done ls -1Aq out | wc -l cat out/* | wc -lThis outputs complaints about ambiguous redirects, misses files in the current directory, and trips up on special characters (for example, redirected

findoutput prints newlines in filenames) and writes a whole bunch of empty files (oops).How can I reliably enumerate my

.cfiles and their containing directories?

In case it helps, here are some commands to create a test structure with bad names and symlinks:

mkdir -p cfiles/{1..3}/{a..b} && cd cfiles mkdir space\ d touch -- i.c -.c bad\ .c 'terrible .c' not-c .hidden.c for d in space\ d 1 2 2/{a..b} 3/b; do cp -t "$d" -- *.c; done ln -s 2 dirlink ln -s 3/b/i.c filelink.cIn the resulting structure, 7 directories contain

.cfiles, and 29 regular files end with.c(ifdotglobis off when the commands are run) (if I've miscounted, please let me know). These are the numbers I want.Please feel free not to use this particular test.

N.B.: Answers in any shell or other language will be tested & appreciated by me. If I have to install new packages, no problem. If you know a GUI solution, I encourage you to share (but I might not go so far as to install a whole DE to test it) :) I use Ubuntu MATE 17.10.

-

WinEunuuchs2Unix about 6 yearsWriting a program to deal with bad programming habits turned out to be quite challenging ;)

WinEunuuchs2Unix about 6 yearsWriting a program to deal with bad programming habits turned out to be quite challenging ;)

- My filenames (including directories) might have any characters; they may start with

-

Zanna about 6 yearsThat's awesome. Uses exactly what's needed and no more. Thank you for teaching :)

Zanna about 6 yearsThat's awesome. Uses exactly what's needed and no more. Thank you for teaching :) -

muru about 6 years@Zanna if you post some commands to recreate a directory structure with symlinks, and the expected output with symlinks, I might be able to fix this accordingly.

muru about 6 years@Zanna if you post some commands to recreate a directory structure with symlinks, and the expected output with symlinks, I might be able to fix this accordingly. -

Zanna about 6 yearsI have added some commands to make a (needlessly complicated as usual) test structure with symlinks.

Zanna about 6 yearsI have added some commands to make a (needlessly complicated as usual) test structure with symlinks. -

muru about 6 years@Zanna I think this command doesn't need any adjustments to get

muru about 6 years@Zanna I think this command doesn't need any adjustments to get29 7. If I add-Ltofind, that goes up to41 10. Which output do you need? -

Zanna about 6 years

Zanna about 6 years29 7is what I want & I will edit to make that clearer. No need to do anything. I only mentioned the symlinks (as Eliah Kagan suggested in chat) in case anyone who answered wanted to talk about how their method treated symlinks, so anyone with a similar task would be in no doubt about whether they were being counted. -

muru about 6 yearsAdded a zsh + awk method. There's probably some way to get zsh itself to print the count for me, but no idea how.

muru about 6 yearsAdded a zsh + awk method. There's probably some way to get zsh itself to print the count for me, but no idea how. -

terdon about 6 yearsThis doesn't count the files in a specific directory. As you point out, it counts all files (or directories, or any other type of file) matching

terdon about 6 yearsThis doesn't count the files in a specific directory. As you point out, it counts all files (or directories, or any other type of file) matching.c(note that it will break if there's a file named-.cin the current directory since you're not quoting*.c) and then it will print all directories in the system, irrespective of whether they contain .c files. -

WinEunuuchs2Unix about 6 years@terdon You can pass a directory

WinEunuuchs2Unix about 6 years@terdon You can pass a directory~/my_c_progs/*.c. It's counting 638 directories with.cprograms, the total directories is show later as286,705. I'll revise the answer to double quote `"*.c". Thanks for the tip. -

terdon about 6 yearsYes, you can use something like

terdon about 6 yearsYes, you can use something likelocate -r "/path/to/dir/.*\.c$", but that isn't mentioned anywhere in your answer. You only give a link to another answer that mentions this but with no explanation of how to adapt it to answer the question being asked here. Your entire answer is focused on how to count the total number of files and directories on the system, which isn't relevant to the question asked which was "how can I count the number of .c files, and the number of directories containing .c files in a specific directory". Also, your numbers are wrong, try it on the example in the OP. -

WinEunuuchs2Unix about 6 years@terdon Thanks for your input. I've improved the answer with your suggestions and an answer you posted on other SE site for

WinEunuuchs2Unix about 6 years@terdon Thanks for your input. I've improved the answer with your suggestions and an answer you posted on other SE site for$PWDvariable: unix.stackexchange.com/a/188191/200094 -

muru about 6 yearsNow you have to ensure that

muru about 6 yearsNow you have to ensure that$PWDdoesn't contain characters that maybe special in a regex -

WinEunuuchs2Unix about 6 years@muru I'm starting to hate regex. In my testing it didn't change if it was used or not, ie same

WinEunuuchs2Unix about 6 years@muru I'm starting to hate regex. In my testing it didn't change if it was used or not, ie same.cfile count and directory count. Mind you I don't have special characters in file names like Zanna has. I do have-in directory names, ielinux-headers-4.4.0-98and that works fine. Are you suggesting there might beNLnew line character or something? Trying to perceive a problem is infinitely more difficult than solving a known one. -

muru about 6 yearsAnd of course you have to ensure that the relevant folder is being indexed by

muru about 6 yearsAnd of course you have to ensure that the relevant folder is being indexed bylocate. -

WinEunuuchs2Unix about 6 years@muru On my system

WinEunuuchs2Unix about 6 years@muru On my systemlocateindexes everything in/etc/fstabwhich is two drives, 5 partitions. It excludes cell phone and USB sticks. -

muru about 6 yearsWho knows what you have done to your system? So you won't fix the answer to account for

muru about 6 yearsWho knows what you have done to your system? So you won't fix the answer to account forPWDcontaining special characters or for it not being indexed bylocate? -

WinEunuuchs2Unix about 6 years@muru I read earlier putting

WinEunuuchs2Unix about 6 years@muru I read earlier putting$PWDoutside the"*.c" spares it from regex special character handling. Also I usedmkdir "Directory with space" and copied a C program into it and it worked correctly. Suggesting it's true compared to: apple.stackexchange.com/questions/52459/… Further I've readsudo updatedbhandles indices properly. Perhaps Zanna can compare our two answers on her data and let us know if counts differ. -

muru about 6 yearsSpace is not a regex special character. The quotes are removed by your shell and never seen by

muru about 6 yearsSpace is not a regex special character. The quotes are removed by your shell and never seen bylocate. -

WinEunuuchs2Unix about 6 years@muru It appears to be working now except I get 30 files where everyone else gets 29 files.

WinEunuuchs2Unix about 6 years@muru It appears to be working now except I get 30 files where everyone else gets 29 files. -

sudodus about 6 yearsYou count

sudodus about 6 yearsYou countcfiles/filelink.c, which is a symlink, and it is not counted, when we count only regular files. -

WinEunuuchs2Unix about 6 years@sudodus Thanks for catching that. I'll fine-tune it after work.

WinEunuuchs2Unix about 6 years@sudodus Thanks for catching that. I'll fine-tune it after work. -

sudodus about 6 years... or provide both a method for only regular files and a method that includes symlinks.

sudodus about 6 years... or provide both a method for only regular files and a method that includes symlinks. -

WinEunuuchs2Unix about 6 years...before leaving for work I was experimenting with

WinEunuuchs2Unix about 6 years...before leaving for work I was experimenting withtest ! -hso!(not operand) could be omitted for second scenario (iftestcommand works as expected).