How can I get a list of all indexed pages for my domain?

Solution 1

I have used Screaming Frog, today in fact, and I really love that tool. You get a lot of information in quite a short amount of time. You'll get the metadata in a csv and you can manipulate it easily in Excel. Export everything and then use filters for each column to only display text/html and not images or CSS files.

I am doing that for a site migration right now and used it for one in the past. How many pages are you talking? Here's a comparison on Moz of Xenu vs SF.

Solution 2

You could also search for site:mydomain.com on Google to get a list of all their indexed pages from your domain, including subdomains.

Solution 3

As Google never will return more than 1000 results, my key was from standalone Perl script to query ( with the help of Lynx --accept-cookies ) several segments for

site:myweb.xxx in the way https://www.google.es/search?q=site:www.955170000.com+%2B+"AA"&num=50&filter=0

The script calculates the string for search, now is "AA" , next will seek "AB" and so on until "ZZ", but you can select at your way, include numbers, and other characters.

Then each search result ( in my case are only 50 returned results) is filtered to seek for each link for each indexed page. All them are logged to a file. Now we need to pass though | sort | uniq this file to wipe out repeated links. I added up to 120 seconds between queries , otherwise Google will claim you re using a robot.

That means in this way ( form AA to AZ ) and 100 results per page I can collect up to 78K indexed pages in 26 hours of processing ( running from unique IP, but you can put 2 or more machines with different IP and save time ).

If you need to collect over 78K ( as not over 100 results per query, and maximum is 1000 total results for each search ) of course you can try up to 1000 for each search string, and in theory you would be able to catch over 7,8 Millions of pages.

Notice many may be duplicated so once you fetch all possible results from Google you need to sort nd filter unique results ( I used sort and uniq *nix commands to do that )

Next step , i.e. to detect duplicate content, or other issues is now easy, or put all collected url into next script to do an URL removal in GWT ( again limited to around 1000 per day ) or re-subscribe uploading to re-index ( limited to around 30K links by Google )

Solution 4

Whatever outdated e-commerce cart you are using now, if you are able to programatically generate all the product and category URLs in the old platform, you don't need to use 301 redirect. You can use the same URLs in Magento (by updating the core_url_rewrite table). This is a special feature of Magento.

I used to do SEO for a Magento website, which earlier used an outdated e-commerce cart just like yours. The migration was done to Magento keeping the same old URLs.

A word of caution about Magento. There is too much hype about Magento. Although it uses MVC architecture, it is one of the worst e-commerce platforms. This is especially true for large catalogs (true in your case, as you say you have tens of thousands of products and categories). Unless you are using the Enterprise Edition, which uses advanced caching to speed up things (and is very expensive), the Community edition will not help your cause.

They say Magento is SEO friendly. Nothing can be farther from the truth. Magento's own URLs (related to products and product reviews) are a complete mess as regards SEO. It generates multiple URLs (paths) for the same product, if it is assigned to multiple categories. In that case, you need to use Catalog Url Rewrite management anyway, which can be a headache.

Magneto's URL problems were solely responsible for causing a lot of damage to the business of the website I am talking about by hurting their search engine rankings.

Anyway, you shouldn't be bothering too much about which pages are in Google's index (which is multi-layered). Assume that all of them are indexed and 301 redirect all of them, if you decide to use 301 redirect.

Thanks,

Satyabrata Das

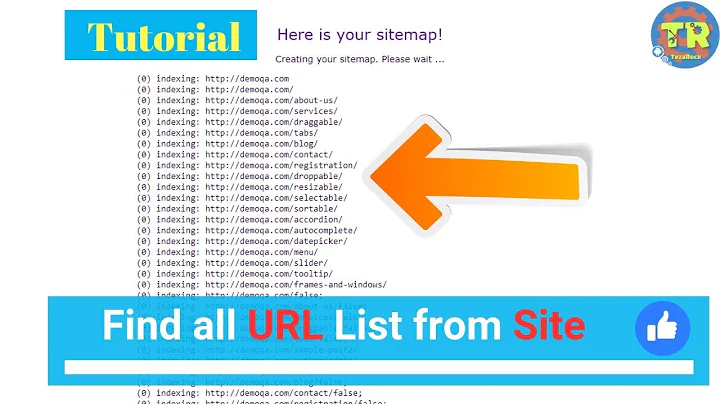

Related videos on Youtube

JR.XYZA

Updated on September 18, 2022Comments

-

JR.XYZA over 1 year

We're in the process of migrating an outdated e-commerce cart to Magento. Part of this process will entail configuring proper 301 redirects. I'm aiming to get a list of all indexed pages to throw our solution against and resolve as many potential issues as possible before going live.

Ideally I'd just need a CSV containing the URIs indexed by the Search Engines for our domain.

Looking at a similar question here, it seems there is no simple way to export this data from either Google or Bing's Webmaster tools, given that this cart has tens of thousands of products (and thus tens of thousands of indexed pages).

I've run across a few other 3rd party utilities such as Screaming Frog and web-based ones like searchenginegenie and internetmarketingninjas, but I've never used any of them and am hesitant to start throwing additional traffic at our site unless I know we'll get what we need out of it.

Has anyone out there used these tools to do something similar, or found some way to retrieve more than the top 1000 records from GWT (or something similar from Bing)?

-

zigojacko almost 11 yearsTo add to this, Screaming Frog or Xenu won't specify which URL's from your website are indexed in search engines though. Great tools though all the same for crawling your website and outputting a list of URL's.

zigojacko almost 11 yearsTo add to this, Screaming Frog or Xenu won't specify which URL's from your website are indexed in search engines though. Great tools though all the same for crawling your website and outputting a list of URL's. -

Duarte Patrício almost 11 yearsMagento is easily made SEO friendly in the settings. You don't need to use redirects to fix multiple product URLs, just set the URL paths not to use category URLs, or if you do want the category URLs included in the URL, just use the canonical settings.

-

MrWhite almost 11 years"Download chart data" does not download a list of indexed URLs, it simply downloads the chart data... a list of dates and numbers.

-

John Conde almost 11 yearsTwo posts, two endorsements of Screaming Frog and a link to moz.com. Do you have any relationship with that product and site?

-

JR.XYZA almost 11 yearsGWT reports ~80k indexed pages. While getting the exact list of what's indexed on Google/etc would be nice, it's my understanding that it's not possible for more than 1k pages. I'll take a closer look at SF, thanks!

-

JR.XYZA almost 11 yearsOur particular problem is that the old platform has bare product/category IDs passed in the URL via GET. Obviously we want to use friendlier URLs in Magento, hence the 301s. Thanks for expressing your opinions on working with Magento from an SEO perspective; these are all issues we're aware of and have plans for.

-

JR.XYZA almost 11 yearsI've spent some time with the trial version of Screaming Frog and it looks like the full version will do what we need quite nicely. Thanks for the recommendation and the helpful link!

-

Revious over 8 yearsI get an error in Excel...