How do CUDA blocks/warps/threads map onto CUDA cores?

Solution 1

Two of the best references are

I'll try to answer each of your questions.

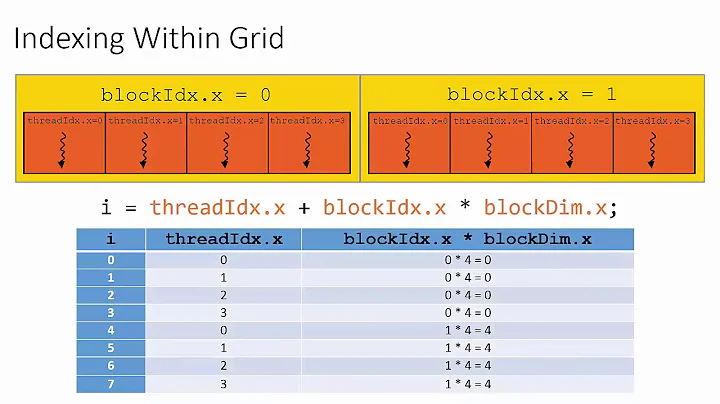

The programmer divides work into threads, threads into thread blocks, and thread blocks into grids. The compute work distributor allocates thread blocks to Streaming Multiprocessors (SMs). Once a thread block is distributed to a SM the resources for the thread block are allocated (warps and shared memory) and threads are divided into groups of 32 threads called warps. Once a warp is allocated it is called an active warp. The two warp schedulers pick two active warps per cycle and dispatch warps to execution units. For more details on execution units and instruction dispatch see 1 p.7-10 and 2.

4'. There is a mapping between laneid (threads index in a warp) and a core.

5'. If a warp contains less than 32 threads it will in most cases be executed the same as if it has 32 threads. Warps can have less than 32 active threads for several reasons: number of threads per block is not divisible by 32, the program execute a divergent block so threads that did not take the current path are marked inactive, or a thread in the warp exited.

6'. A thread block will be divided into WarpsPerBlock = (ThreadsPerBlock + WarpSize - 1) / WarpSize There is no requirement for the warp schedulers to select two warps from the same thread block.

7'. An execution unit will not stall on a memory operation. If a resource is not available when an instruction is ready to be dispatched the instruction will be dispatched again in the future when the resource is available. Warps can stall at barriers, on memory operations, texture operations, data dependencies, ... A stalled warp is ineligible to be selected by the warp scheduler. On Fermi it is useful to have at least 2 eligible warps per cycle so that the warp scheduler can issue an instruction.

See reference 2 for differences between a GTX480 and GTX560.

If you read the reference material (few minutes) I think you will find that your goal does not make sense. I'll try to respond to your points.

1'. If you launch kernel<<<8, 48>>> you will get 8 blocks each with 2 warps of 32 and 16 threads. There is no guarantee that these 8 blocks will be assigned to different SMs. If 2 blocks are allocated to a SM then it is possible that each warp scheduler can select a warp and execute the warp. You will only use 32 of the 48 cores.

2'. There is a big difference between 8 blocks of 48 threads and 64 blocks of 6 threads. Let's assume that your kernel has no divergence and each thread executes 10 instructions.

- 8 blocks with 48 threads = 16 warps * 10 instructions = 160 instructions

- 64 blocks with 6 threads = 64 warps * 10 instructions = 640 instructions

In order to get optimal efficiency the division of work should be in multiples of 32 threads. The hardware will not coalesce threads from different warps.

3'. A GTX560 can have 8 SM * 8 blocks = 64 blocks at a time or 8 SM * 48 warps = 512 warps if the kernel does not max out registers or shared memory. At any given time on a portion of the work will be active on SMs. Each SM has multiple execution units (more than CUDA cores). Which resources are in use at any given time is dependent on the warp schedulers and instruction mix of the application. If you don't do TEX operations then the TEX units will be idle. If you don't do a special floating point operation the SUFU units will idle.

4'. Parallel Nsight and the Visual Profiler show

a. executed IPC

b. issued IPC

c. active warps per active cycle

d. eligible warps per active cycle (Nsight only)

e. warp stall reasons (Nsight only)

f. active threads per instruction executed

The profiler do not show the utilization percentage of any of the execution units. For GTX560 a rough estimate would be IssuedIPC / MaxIPC. For MaxIPC assume GF100 (GTX480) is 2 GF10x (GTX560) is 4 but target is 3 is a better target.

Solution 2

"E. If a warp contains 20 threads, but currently there are only 16 cores available, the warp will not run."

is incorrect. You are confusing cores in their usual sense (also used in CPUs) - the number of "multiprocessors" in a GPU, with cores in nVIDIA marketing speak ("our card has thousands of CUDA cores").

A warp itself can only be scheduled on a single core (= multiprocessor), and can run up to 32 threads at the same time; it cannot use more than a single core.

The number "48 warps" is the maximum number of active warps (warps which may be chosen to be scheduled for work in the next cycle, at any given cycle) per multiprocessor, on nVIDIA GPUs with Compute Capability 2.x; and this number corresponds to 1536 = 48 x 32 threads.

Answer based on this webinar

Related videos on Youtube

Daedalus

Updated on August 23, 2020Comments

-

Daedalus almost 4 years

I have been using CUDA for a few weeks, but I have some doubts about the allocation of blocks/warps/thread. I am studying the architecture from a didactic point of view (university project), so reaching peak performance is not my concern.

First of all, I would like to understand if I got these facts straight:

The programmer writes a kernel, and organize its execution in a grid of thread blocks.

Each block is assigned to a Streaming Multiprocessor (SM). Once assigned it cannot migrate to another SM.

Each SM splits its own blocks into Warps (currently with a maximum size of 32 threads). All the threads in a warp executes concurrently on the resources of the SM.

The actual execution of a thread is performed by the CUDA Cores contained in the SM. There is no specific mapping between threads and cores.

If a warp contains 20 thread, but currently there are only 16 cores available, the warp will not run.

On the other hand if a block contains 48 threads, it will be split into 2 warps and they will execute in parallel provided that enough memory is available.

If a thread starts on a core, then it is stalled for memory access or for a long floating point operation, its execution could resume on a different core.

Are they correct?

Now, I have a GeForce 560 Ti so according to the specifications it is equipped with 8 SM, each containing 48 CUDA cores (384 cores in total).

My goal is to make sure that every core of the architecture executes the SAME instructions. Assuming that my code will not require more register than the ones available in each SM, I imagined different approaches:

I create 8 blocks of 48 threads each, so that each SM has 1 block to execute. In this case will the 48 threads execute in parallel in the SM (exploiting all the 48 cores available for them)?

Is there any difference if I launch 64 blocks of 6 threads? (Assuming that they will be mapped evenly among the SMs)

If I "submerge" the GPU in scheduled work (creating 1024 blocks of 1024 thread each, for example) is it reasonable to assume that all the cores will be used at a certain point, and will perform the same computations (assuming that the threads never stall)?

Is there any way to check these situations using the profiler?

Is there any reference for this stuff? I read the CUDA Programming guide and the chapters dedicated to hardware architecture in "Programming Massively Parallel Processors" and "CUDA Application design and development"; but I could not get a precise answer.

-

Konstantin Burlachenko about 6 yearsI would like append as a comment what is "CUDA core". "CUDA core" or "Execution unit" is fully pipelined integer ALU and FPU that executes one arithmetic instruction instruction per clock cycle in one cuda thread.

Konstantin Burlachenko about 6 yearsI would like append as a comment what is "CUDA core". "CUDA core" or "Execution unit" is fully pipelined integer ALU and FPU that executes one arithmetic instruction instruction per clock cycle in one cuda thread.

-

Daedalus about 12 yearsThank you for your answer. I read the references, but there are a few things that I don't understand in your answer. In the following questions I am assuming that we are using a Fermi architecture with 48 cores (16 cores * 3 "core groups"): 1. You mentioned a mapping between cores and laneid. What kind of mapping is it? 2. From the references I got that each "core group" execute at most an half-warp (16 threads) per clock cycle. So in theory if we have 48 threads in the same block, they will be organized into 3 half-warps and execute in parallel on the 48 cores. Am I right?

-

Greg Smith about 12 yearsCUDA cores are the number of single precision FP units. Thinking of execution in terms of CUDA cores is not correct. Each warp has 32 threads. These threads will be issued to a group of execution units (e.g. 16 cuda cores). In order to issue to all 48 cores in a single clock one of the two warp schedulers needs to select a warp that meets the req of a superscalar pair and both instructions need to be of a type executed by CUDA cores. In addition the other warp scheduler has to pick a warp whose next instruction will be executed by CUDA cores.

-

Greg Smith about 12 yearsThere is no requirement that warps be in the same block or that warps in a block have the same program counter.

-

Daedalus about 12 yearsLet's reason at a single SM scope. I create a block with 48 threads. Each thread executes just a 32bit integer addition between two registers. There are three half-warps. The first scheduler picks the two of them and sends them to the core group #1 and #2. The second scheduler picks the remaining half-warp and sends it to the core group #3. The three half-warps execute the sum in parallel. Is that right?

-

Greg Smith about 12 yearsIn you example each scheduler is picking a warp and issuing 1 instruction. In this case only 2 groups of execution units will be used. In order to use more execution units 1 of the schedulers has to dual-issue. As indicated in the references there are multiple types of execution units (not just what is coined cuda cores) and there are instruction pairing rules (not well documented) that must be met for the schedulers to dual-issue.

-

Greg K. over 8 years@GregSmith i'm searching all over the web to find out where this 8 active blocks per SM in Fermi architecture comes from. Its not even mentioned in the fermi whitepaper. Do you have any more reference about that?

-

Greg Smith over 8 years@GregKasapidis See the CUDA Programming Guide (docs.nvidia.com/cuda/cuda-c-programming-guide/…) Section G. Compute Capabilities Table 13. Technical Specifications per Compute Capability row "Maximum number of resident blocks per multiprocessor".

-

einpoklum about 7 years@GregSmith: Edited the answer to address this. It's fine that you were patient with it, but - it's been five years...

-

Adarsh over 6 yearssingle core (= multiprocessor) ? I think question assumes terminology single core = processor and not multiprocessor. With your terminology your answer is correct.

-

Gumby The Green over 4 years@Greg Smith You said it backwards. The grid is divided into blocks, which are divided into threads - not the other way around. This had me confused for a few minutes.

Gumby The Green over 4 years@Greg Smith You said it backwards. The grid is divided into blocks, which are divided into threads - not the other way around. This had me confused for a few minutes. -

Greg Smith over 4 yearsFrom a hardware perspective the work is executed as a grid is rasterized into blocks; blocks are rasterized into threads. From a developer perspective of breaking down a problem you often start from what does a single thread/work-item have to do then you group work-items into block then blocks into grids. It is the opposite perspective. If this is confusing I can update the answer.

-

Steven Lu about 4 yearsGTX 560 is GF114? Not GF104?

Steven Lu about 4 yearsGTX 560 is GF114? Not GF104? -

OOM about 3 yearsAs I understood good, to find the max wraps allowed be multiprocessor I can use the Cuda Occupency calculator and read it from the line "Max Warps per Multiprocessor" Then, I have a GTX 770 (SDK 3.0), I determinate the best call like this:

OOM about 3 yearsAs I understood good, to find the max wraps allowed be multiprocessor I can use the Cuda Occupency calculator and read it from the line "Max Warps per Multiprocessor" Then, I have a GTX 770 (SDK 3.0), I determinate the best call like this:MaxWrapPerMultiprocessor/WarpAllocationGranuality = BlockPerSm; TotalBlock=BlockPerSm*NbrSm; TotalThreadPerBlock= WarpAllocationGranuality * ThreadPerWarp;Then:64/4=16;TotalBlock=16*8=128;TotalThreadPerBlock=4*32=128;Then call my cuda function like this:myfunc<<<128,128>>>(); -

Nikola Smiljanić over 2 years@GregSmith

8 SM * 48 warps = 512 warpsShouldn't this be 384?