How do I access a google cloud storage bucket using a service account from the command line?

Solution 1

I was having the same problem. The default key file that the Google Developers Console gave me was actually a .json file with the key material in a json field. I revoked the service account with "gcloud auth revoke", generated a new key from the developers console, and downloaded the key as a .p12 file, and this time after activating the service account it worked.

Solution 2

For the record I saw same error message today while trying to enable service account on a GCE instance via generated json key file. Gcloud sdk preinstalled on the instance was too old and couldn't work with json keys properly. Additionally the tool wouldn't give any feedback when trying to enable my account this way, but trying to use the account for authorization would fail with mentioned error.

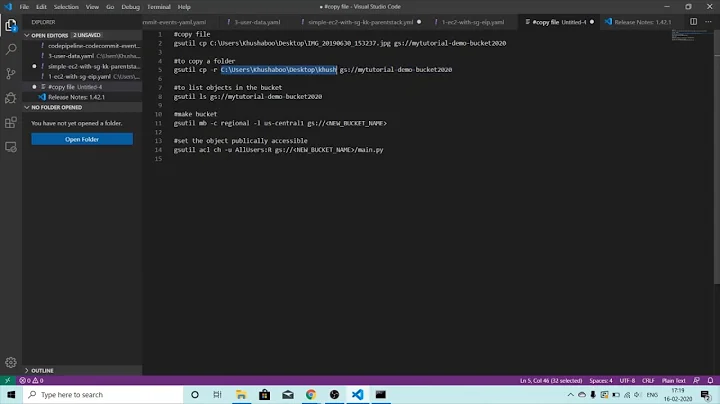

After I installed current version, I was able to enable my service account via gcloud auth activate-service-account --key-file /key.json and then gsutil cp file gs://testbucket/ worked correctly.

Related videos on Youtube

bjoseru

Updated on September 18, 2022Comments

-

bjoseru over 1 year

I thought it would be pretty straight forward to do this, but I can't get it to work:

I'm trying to push files from a server (GCE) to a google cloud storage bucket. To avoid granting the

gsutilcommand on the server too many rights, I have created a "Service Account" in the credentials section of my google project.To the bucket

gs://mybucketI have added the email address of that service account with OWNER permissions as a USER to the bucket.On the server I activated the service account like this:

$gcloud auth activate-service-account --key-file <path-to-keyfile> myservice $gcloud auth list Credentialed accounts: - [email protected] - myservice (active) To set the active account, run: $ gcloud config set account <account>So everything seems fine so far. However, accessing the bucket fails:

$gsutil cp tempfile gs://mybucket CommandException: Error retrieving destination bucket gs://mybucket/: [('PEM routines', 'PEM_read_bio', 'no start line')] $gsutil cp tempfile gs://mybucket/tempfile Failure: [('PEM routines', 'PEM_read_bio', 'no start line')].Of course, I did verify that the ACLs of the bucket do show the service account as OWNER. I also tried this on a different machine with a different OS. Same result. Needless to say, I can't make sense out of the error messages myself. I would appreciate any suggestions. Detailed error log in this gist.

Update:

After removing

~/.config, wheregcloudstores its authorization data, use of the deprecated commandgsutil config -ewill generate

~/.botowith the service account as intended. Subsequent access togs://mybucketdoes work.However, I'm not sure this is the path I'm supposed to follow. How do I get this to work using

gcloud auth? -

bjoseru almost 10 yearsThank you for pointing this out. Apparently the combination of the .p12-keyfile AND naming the account exactly like the email address of the account did it for me. (And I thought to have read somewhere one could use any name for the account.)

-

Joe Bowbeer over 7 yearsBy the way, openssl -export -nocerts will convert the private key (pem) extracted from the json file to a .p12 file. In case this is easier than downloading a new key.