How do I ensure that the text encoded in a form is utf8

Solution 1

- How does a browser determine which encodings to use when a user is typing into a text box?

It uses the encoding the page was decoded as by default. According to the spec, you should be able to override this with the accept-charset attribute of the <form> element, but IE is buggy, so you shouldn't rely on this (I've seen several different sources describe several different bugs, and I don't have all the relevant versions of IE in front of me to test, so I'll leave it at that).

- How can javascript determine the encoding of a string value in an html text box?

All strings in JavaScript are encoded in UTF-16. The browser will map everything into UTF-16 for JavaScript, and from UTF-16 into whatever the page is encoded in.

UTF-16 is an encoding that grew out of UCS-2. Originally, it was thought that 65,536 code points would be enough for all of Unicode, and so a 16 bit character encoding would be sufficient. It turned out that the is not the case, and so the character set was expanded to 1,114,112 code points. In order to maintain backwards compatibility, a few unused ranges of the 16 bit character set were set aside for surrogate pairs, in which two 16 bit code units were used to encode a single character. Read up on UTF-16 and UCS-2 on Wikipedia for details.

The upshot is that when you have a string str in JavaScript, str.length does not give you the number of characters, it gives you the number of code units, where two code units may be used to encode a single character, if that character is not within the Basic Multilingual Plane. For instance, "abc".length gives you 3, but "𐤀𐤁𐤂".length gives you 6; and "𐤀𐤁𐤂".substring(0,1) gives what looks like an empty string, since a half of a surrogate pair cannot be displayed, but the string still contains that invalid character (I will not guarantee this works cross browser; I believe it is acceptable to drop broken characters). To get a valid character, you must use "𐤀𐤁𐤂".substring(0,2).

- Can I force the browser to only use UTF-8 encoding?

The best way to do this is to deliver your page in UTF-8. Ensure that your web server is sending the appropriate Content-type: text/html; charset=UTF-8 headers. You may also want to embed a <meta charset="UTF-8"> element in your <head> element, for cases in which the Content-Type does not get set properly (such as if your page is loaded off of the local disk).

- How can I encode arbitrary encodings to UTF-8 I assume there is a JavaScript library for this?

There isn't much need in JavaScript to encode text in particular encodings. If you are simply writing to the DOM, or reading or filling in form controls, you should just use JavaScript strings which are treated as sequences of UTF-16 code units. XMLHTTPRequest, when used to send(data) via POST, will use UTF-8 (if you pass it a document with a different encoding declared in the <?xml ...> declaration, it may or may not convert that to UTF-8, so for compatibility you generally shouldn't use anything other than UTF-8).

Solution 2

I would like to ensure all text entered in the box is either encoded in UTF-8

Text in an HTML DOM including input fields has no intrinsic byte encoding; it is stored as Unicode characters (specifically, at a DOM and ECMAScript standard level, UTF-16 code units; on the rare case you use characters outside the Basic Multilingual Plane it is possible to see the difference, eg. '𝅘𝅥𝅯'.length is 2).

It is only when the form is sent that the text is serialised into bytes using a particular encoding, by default the same encoding as was used to parse the page So you should serve your page containing the form as UTF-8 (via Content-Type header charset parameter and/or equivalent <meta> tag).

Whilst in principle there is an override for this in the accept-charset attribute of the <form> element, it doesn't work correctly (and is actively harmful in many cases) in IE. So avoid that one.

There are no explicit encoding-handling functions available in JavaScript itself. You can hack together a Unicode-to-UTF-8-bytes encoder by chaining unescape(encodeURIComponent(str)) (and similarly the other way round with the inverse function), but that's about it.

Solution 3

The text in a text box is not encoded in any way; it is "text", an abstract series of characters. In almost every contemporary application, that text is expressed as a sequence of Unicode code points, which are integers mapped to particular abstract characters. Text doesn't get "encoded" until it is turned into a sequence of bytes, as when submitting the form. At that time, the encoding is determined by the encoding of the HTML page in which the form appears, or by the accept-charset attribute of the form element.

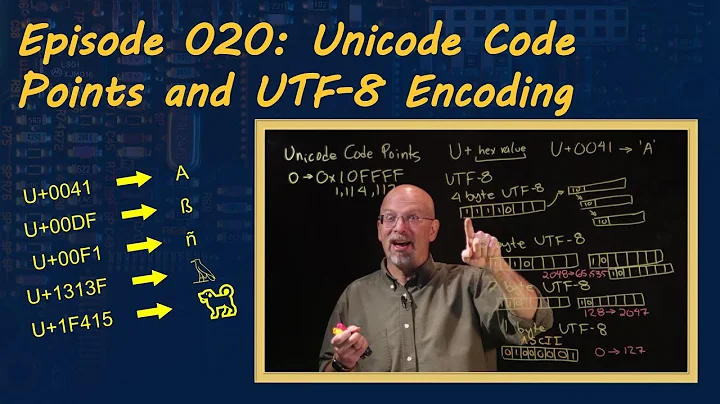

Related videos on Youtube

Ethan Heilman

Security Hobbyist, Cryptography Researcher, Software Engineer Github: https://github.com/EthanHeilman Hackernews: http://news.ycombinator.com/user?id=EthanHeilman Twitter: https://twitter.com/Ethan_Heilman Tumblr: http://ethanheilman.tumblr.com/ ResearchGate: http://www.researchgate.net/profile/Ethan_Heilman/ Play FlipIt: http://ethanheilman.github.com/flipIt/playable_with_instructions.html Blog entries: A Look at Security Through Obesity. Castle meet Cannon: What to do after you lose? FlipIt: An Interesting Game.

Updated on April 17, 2022Comments

-

Ethan Heilman about 2 years

I have an html box with which users may enter text. I would like to ensure all text entered in the box is either encoded in UTF-8 or converted to UTF-8 when a user finishes typing. Furthermore, I don't quite understand how various UTF encoding are chosen when being entered into a text box.

Generally I'm curious about the following:

- How does a browser determine which encodings to use when a user is typing into a text box?

- How can javascript determine the encoding of a string value in an html text box?

- Can I force the browser to only use UTF-8 encoding?

- How can I encode arbitrary encodings to UTF-8 I assume there is a JavaScript library for this?

** Edit **

Removed some questions unnecessary to my goals.

This tutorial helped me understand JavaScript character codes better, but is buggy and does not actually translate character codes to utf-8 in all cases. http://www.webtoolkit.info/javascript-base64.html

-

Mark Byers over 14 yearsThat's a lot of questions! Do we have to answer them all to post an answer?

-

Ethan Heilman over 14 years@Mark Byers not at all, I feel they are related the problem I'm trying to solve. Answers to the first 4 questions put me closer to my solution.

-

Ethan Heilman over 14 yearsSo what if I want to convert the value of that form to it's hexadecimal equivalent in string form? What encoding does ECMAScript see?

-

Ethan Heilman over 14 yearsI've seen unescape(encodeURIComponent(str)) before, but I worried it may not work in all cases.

-

Brian Campbell over 14 years@e5 As I said in my answer, strings in JavaScript appear as sequences of UTF-16 code units. If you access a string character by character, or check it's length, you will see surrogate code points if you have characters beyond the BMP.

-

Ethan Heilman over 14 years@Brian Campbell, Thanks for the quick response. What are surrogate code points? What is the relationship between the hex values for a utf-16 character and the char codes that javascript gives you?

-

Amit Patil over 14 yearsIt's solid, and pretty much the only thing escape/unescape should ever be used for (even then, it's pretty rare that you ever need it).

-

Amit Patil over 14 yearse5: They're the same. Both JavaScript (ECMAScript standard) and the DOM (W3C DOM Level 1 Core) specify UTF-16 code units as the basic character type. A surrogate code unit is part of a ‘surrogate pair’ that encodes one Unicode character (code point) in two UTF-16 code units. This ugliness proved necessary because after a few versions of Unicode it became clear that 65536 characters just weren't enough. Many systems use UTF-16 code units in their basic string type, including Java and Windows. Others such as Linux and Python can support a wider string type that doesn't need the surrogates.

-

Amit Patil over 14 yearsIt's widely accepted that web browsers think ISO-8859-1 is cp1252, and this isn't the reason

accept-charsetis broken. What IE actually does is treataccept-charsetas only a backup charset to use when the charset taken from the page itself cannot hold the content of the form field. That means when your form is submitted you can't know whether IE used the page encoding or theaccept-charsetencoding to encode a form field (in fact you are likely to have a mixture across the form). This makes it impossible to recover the original characters. -

Brian Campbell over 14 yearsOK, have removed reference to

accept-charset; after some research, I've seen several sources describe the bugs differently, I don't have all the relevant versions of IE in front of me to test, and it's not necessary anyhow if you set your character encoding on the whole page to UTF-8. -

Frank Farmer over 14 yearsExcellent answer. Additionally, in the end, the server accepting the POST will be ultimately responsible for validating and filtering POSTed content. Because you can't guarantee that the client submitting the POST actually ran your javascript.

-

asciimo over 6 years

-

Amit Patil over 6 years@asciimo you can't use

decodeURIfor this purpose, which is nothing to do with URIs. Blanket-replacingunescapewithdecodeURI[Component]isn't a good idea, unless you're sure that it was used in error when URI-decoding was meant, and you're sure you don't haveescapedata that could get mangled by the change. These functions are now in the “web browser legacy features” annex but that does not mean they are deprecated or likely to disappear soon. The new-world replacement for this specific purpose is the Encoding API, but support is too poor today.