How does X-server calculate DPI?

Solution 1

As far as I know, starting with version 1.7, xorg defaults to 96 dpi. It doesn't calculate anything unless you specify DisplaySize via Xorg config files. Also, don't rely on xdpyinfo output.

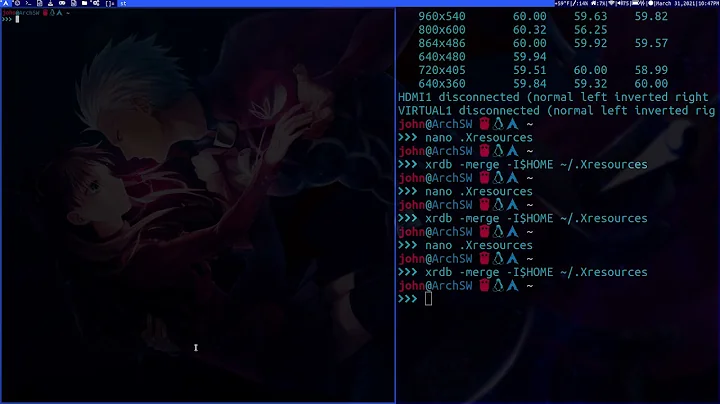

My laptop runs on Intel SandyBridge. Excerpt from my Xorg.0.log on a fresh Archlinux install:

(==) intel(0): DPI set to (96, 96)

running

xdpyinfo | grep -E 'dimensions|resolution'

returns:

dimensions: 1600x900 pixels (423x238 millimeters)

resolution: 96x96 dots per inch

which is far from being true. I know that my screen size is 344x193 mm so obviously xdpyinfo calculates the physical size based on pixel resolution (1600x900) and default 96 DPI. If I add

........

DisplaySize 344 193

........

in /etc/X11/xorg.conf.d/monitor.conf and restart, Xorg.0.log correctly reports:

(**) intel(0): Display dimensions: (344, 193) mm

(**) intel(0): DPI set to (118, 118)

However, xdpyinfo | grep -E 'dimensions|resolution' always returns:

dimensions: 1600x900 pixels (423x238 millimeters)

resolution: 96x96 dots per inch

Still, no visual changes as I'm using Gnome, and 96 DPI is also hard-coded in gnome-settings-daemon. After patching the latter, I can enjoy my native 118 DPI. But even after all that, xdpyinfo still returns:

dimensions: 1600x900 pixels (423x238 millimeters)

resolution: 96x96 dots per inch

Solution 2

How does X-server calculate DPI?

The DPI of the X server is determined in the following manner:

- The

-dpicommand line option has highest priority. - If this is not used, the

DisplaySizesetting in the X config file is used to derive the DPI, given the screen resolution. - If no

DisplaySizeis given, the monitor size values from DDC are used to derive the DPI, given the screen resolution. - If DDC does not specify a size, 75 DPI is used by default.

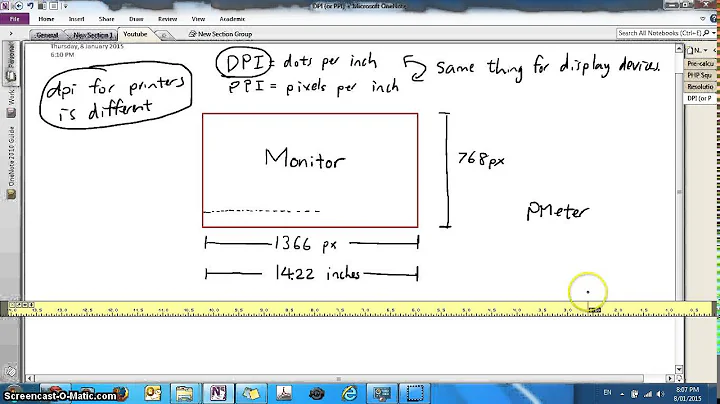

It may know how many pixels I have on my display, but is that enough?

No, it not only knows the virtual screen size in pixel but (usually) also physical display size in millimeters. You can check your display dimensions by running the following in a terminal window:

~ $ xdpyinfo | grep dimension

dimensions: 1366x768 pixels (361x203 millimeters)

The calculation which is your X server doing is the following:

- 1366 pixels divided by 361 millimeters multiplied with 25.4 millimeters per inch = 96.11191136 dots per inch (DPI).

- 768 pixels divided by 203 millimeters multiplied with 25.4 millimeters per inch = 96.09458128 dots per inch.

You can check what DPI your X server has calculated using the following command:

~ $ xdpyinfo | grep resolution

resolution: 96x96 dots per inch

Looks good, doesn't it?

For further reading:

- http://scanline.ca/dpi/

- https://wiki.archlinux.org/index.php/Xorg#Display_Size_and_DPI

- http://www.cyberciti.biz/faq/how-do-i-find-out-screen-resolution-of-my-linux-desktop/

Related videos on Youtube

yrajabi

I am a robot. I can't remember how I passed the captcha.

Updated on September 18, 2022Comments

-

yrajabi over 1 year

From Xfce Docs:

In case you want to override the DPI (dots per inch) value calculated by the X-server, you can select the checkbox and use the spin box to specify the resolution to use when your screen renders fonts.

But how does X-server do its calculation? What assumptions are made in the process and can some of the parameters be overridden?

It may know how many pixels I have on my display, but is that enough?

-

Tushar Nallan almost 11 years@alois-mahdal I guess I misunderstood the question at first. I've improved the answer adding the display dimensions used by X server.

-

CMCDragonkai about 8 yearsX doesn't seem to allow per-monitor DPI settings for a single screen, that DPI is shared across all monitors in the same screen. Also I found that the millimeters given by

xdpyinfodoes not add up from millimeters fromxrandr --query.

![How to Find *Your* PERFECT SENSITIVITY! (In-Depth Sens Guide) [VALORANT] *2022*](https://i.ytimg.com/vi/t2kUeGc4hgQ/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCi__bGY2jNVGIE6d-8BodNiEisrQ)