How to convert bigint to datetime in hive?

14,235

Solution 1

I've had this problem. My approach was to create the Hive table first. You should make an equivalence between Teradata datatypes and your Hive version datatypes. After that you can use the Sqoop argument --hive-table <table-name> to insert into that table.

Solution 2

select to_date(from_unixtime(your timestamp));

example:

select to_date(from_unixtime(1490985000));

output:2017-04-01

I hope it will work. please let me know if i am wrong.

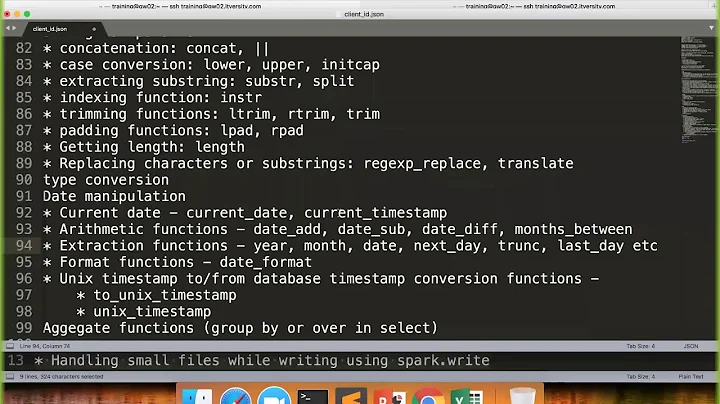

Related videos on Youtube

Author by

Stack 777

Updated on June 04, 2022Comments

-

Stack 777 almost 2 years

I had sqooped the data from teradata to Hive using sqoop import command.

For one of the tables in teradata, I have a date field . After sqooping, my date field is appearing as a timestamp with bigint datatype.

But I need the date field as a date datatype in hive table. Can anyone please suggest me in achieving this?

-

Andrew almost 7 yearsWhat does timestamp with bigint datatype look like? Add some samples to your post please.

Andrew almost 7 yearsWhat does timestamp with bigint datatype look like? Add some samples to your post please. -

shaine almost 7 yearsi came across this last year the only way round I found was to cast the field as a varchar as part of the sqoop export.

-

Stack 777 almost 7 yearsMy date field in hue is a 13-digit timestamp, which looks like : 1450051200000 which is of bigint type. But I'm expecting the date type instead of bigint in hue after sqooping.

-

Stack 777 almost 7 yearsI've tried to cast the bigint into string field using to_char function. But, instead of string , is there any way to convert directly from bigint to datetime type

-

sumitya almost 7 yearswhy don't you use Teradata function

sumitya almost 7 yearswhy don't you use Teradata functioncast(column_name as date)function in sqoop query -

Stack 777 almost 7 yearsFinally, resolved my issue by using the below sqoop import command, I'm able to convert the bigint value into timestamp. $ sqoop import ... --map-column-java id=String,value=Integer

-

-

Stack 777 almost 7 yearsThe format which you have mentioned is working as expected. But, it is just a read right. what I'm expecting is , after sqoop import , the date field in hive should be of type datetime.

-

Stack 777 almost 7 yearsCan you please post the sqoop import command with equivalence between Teradata datatypes and my Hive version datatypes ?

-

danielsepulvedab almost 7 years@Stack777 It would be just a

danielsepulvedab almost 7 years@Stack777 It would be just aCREATE TABLEstatement in Hive, not an extra argument in sqoop. That way you can define your table schema just as you want it.