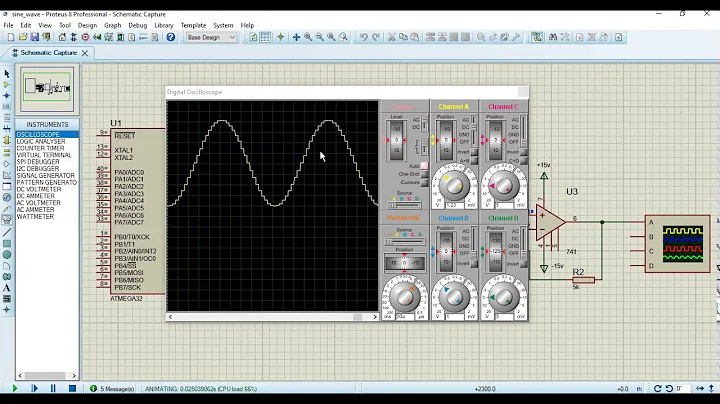

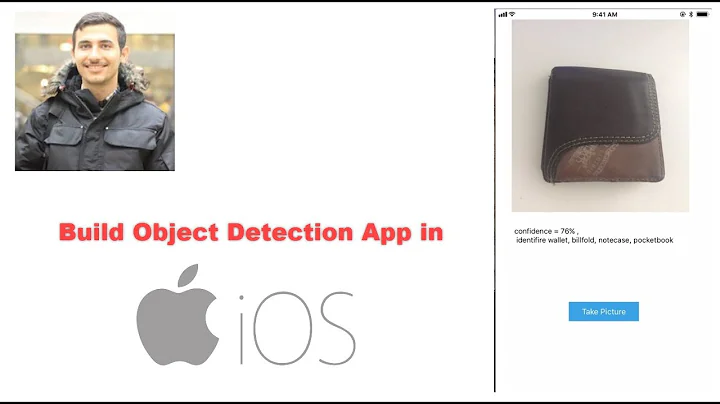

How to correctly read decoded PCM samples on iOS using AVAssetReader -- currently incorrect decoding

Solution 1

Currently, I am also working on a project which involves extracting audio samples from iTunes Library into AudioUnit.

The audiounit render call back is included for your reference. The input format is set as SInt16StereoStreamFormat.

I have made use of Michael Tyson's circular buffer implementation - TPCircularBuffer as the buffer storage. Very easy to use and understand!!! Thanks Michael!

- (void) loadBuffer:(NSURL *)assetURL_

{

if (nil != self.iPodAssetReader) {

[iTunesOperationQueue cancelAllOperations];

[self cleanUpBuffer];

}

NSDictionary *outputSettings = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithInt:kAudioFormatLinearPCM], AVFormatIDKey,

[NSNumber numberWithFloat:44100.0], AVSampleRateKey,

[NSNumber numberWithInt:16], AVLinearPCMBitDepthKey,

[NSNumber numberWithBool:NO], AVLinearPCMIsNonInterleaved,

[NSNumber numberWithBool:NO], AVLinearPCMIsFloatKey,

[NSNumber numberWithBool:NO], AVLinearPCMIsBigEndianKey,

nil];

AVURLAsset *asset = [AVURLAsset URLAssetWithURL:assetURL_ options:nil];

if (asset == nil) {

NSLog(@"asset is not defined!");

return;

}

NSLog(@"Total Asset Duration: %f", CMTimeGetSeconds(asset.duration));

NSError *assetError = nil;

self.iPodAssetReader = [AVAssetReader assetReaderWithAsset:asset error:&assetError];

if (assetError) {

NSLog (@"error: %@", assetError);

return;

}

AVAssetReaderOutput *readerOutput = [AVAssetReaderAudioMixOutput assetReaderAudioMixOutputWithAudioTracks:asset.tracks audioSettings:outputSettings];

if (! [iPodAssetReader canAddOutput: readerOutput]) {

NSLog (@"can't add reader output... die!");

return;

}

// add output reader to reader

[iPodAssetReader addOutput: readerOutput];

if (! [iPodAssetReader startReading]) {

NSLog(@"Unable to start reading!");

return;

}

// Init circular buffer

TPCircularBufferInit(&playbackState.circularBuffer, kTotalBufferSize);

__block NSBlockOperation * feediPodBufferOperation = [NSBlockOperation blockOperationWithBlock:^{

while (![feediPodBufferOperation isCancelled] && iPodAssetReader.status != AVAssetReaderStatusCompleted) {

if (iPodAssetReader.status == AVAssetReaderStatusReading) {

// Check if the available buffer space is enough to hold at least one cycle of the sample data

if (kTotalBufferSize - playbackState.circularBuffer.fillCount >= 32768) {

CMSampleBufferRef nextBuffer = [readerOutput copyNextSampleBuffer];

if (nextBuffer) {

AudioBufferList abl;

CMBlockBufferRef blockBuffer;

CMSampleBufferGetAudioBufferListWithRetainedBlockBuffer(nextBuffer, NULL, &abl, sizeof(abl), NULL, NULL, kCMSampleBufferFlag_AudioBufferList_Assure16ByteAlignment, &blockBuffer);

UInt64 size = CMSampleBufferGetTotalSampleSize(nextBuffer);

int bytesCopied = TPCircularBufferProduceBytes(&playbackState.circularBuffer, abl.mBuffers[0].mData, size);

if (!playbackState.bufferIsReady && bytesCopied > 0) {

playbackState.bufferIsReady = YES;

}

CFRelease(nextBuffer);

CFRelease(blockBuffer);

}

else {

break;

}

}

}

}

NSLog(@"iPod Buffer Reading Finished");

}];

[iTunesOperationQueue addOperation:feediPodBufferOperation];

}

static OSStatus ipodRenderCallback (

void *inRefCon, // A pointer to a struct containing the complete audio data

// to play, as well as state information such as the

// first sample to play on this invocation of the callback.

AudioUnitRenderActionFlags *ioActionFlags, // Unused here. When generating audio, use ioActionFlags to indicate silence

// between sounds; for silence, also memset the ioData buffers to 0.

const AudioTimeStamp *inTimeStamp, // Unused here.

UInt32 inBusNumber, // The mixer unit input bus that is requesting some new

// frames of audio data to play.

UInt32 inNumberFrames, // The number of frames of audio to provide to the buffer(s)

// pointed to by the ioData parameter.

AudioBufferList *ioData // On output, the audio data to play. The callback's primary

// responsibility is to fill the buffer(s) in the

// AudioBufferList.

)

{

Audio* audioObject = (Audio*)inRefCon;

AudioSampleType *outSample = (AudioSampleType *)ioData->mBuffers[0].mData;

// Zero-out all the output samples first

memset(outSample, 0, inNumberFrames * kUnitSize * 2);

if ( audioObject.playingiPod && audioObject.bufferIsReady) {

// Pull audio from circular buffer

int32_t availableBytes;

AudioSampleType *bufferTail = TPCircularBufferTail(&audioObject.circularBuffer, &availableBytes);

memcpy(outSample, bufferTail, MIN(availableBytes, inNumberFrames * kUnitSize * 2) );

TPCircularBufferConsume(&audioObject.circularBuffer, MIN(availableBytes, inNumberFrames * kUnitSize * 2) );

audioObject.currentSampleNum += MIN(availableBytes / (kUnitSize * 2), inNumberFrames);

if (availableBytes <= inNumberFrames * kUnitSize * 2) {

// Buffer is running out or playback is finished

audioObject.bufferIsReady = NO;

audioObject.playingiPod = NO;

audioObject.currentSampleNum = 0;

if ([[audioObject delegate] respondsToSelector:@selector(playbackDidFinish)]) {

[[audioObject delegate] performSelector:@selector(playbackDidFinish)];

}

}

}

return noErr;

}

- (void) setupSInt16StereoStreamFormat {

// The AudioUnitSampleType data type is the recommended type for sample data in audio

// units. This obtains the byte size of the type for use in filling in the ASBD.

size_t bytesPerSample = sizeof (AudioSampleType);

// Fill the application audio format struct's fields to define a linear PCM,

// stereo, noninterleaved stream at the hardware sample rate.

SInt16StereoStreamFormat.mFormatID = kAudioFormatLinearPCM;

SInt16StereoStreamFormat.mFormatFlags = kAudioFormatFlagsCanonical;

SInt16StereoStreamFormat.mBytesPerPacket = 2 * bytesPerSample; // *** kAudioFormatFlagsCanonical <- implicit interleaved data => (left sample + right sample) per Packet

SInt16StereoStreamFormat.mFramesPerPacket = 1;

SInt16StereoStreamFormat.mBytesPerFrame = SInt16StereoStreamFormat.mBytesPerPacket * SInt16StereoStreamFormat.mFramesPerPacket;

SInt16StereoStreamFormat.mChannelsPerFrame = 2; // 2 indicates stereo

SInt16StereoStreamFormat.mBitsPerChannel = 8 * bytesPerSample;

SInt16StereoStreamFormat.mSampleRate = graphSampleRate;

NSLog (@"The stereo stream format for the \"iPod\" mixer input bus:");

[self printASBD: SInt16StereoStreamFormat];

}

Solution 2

I guess it is kind of late, but you could try this library:

https://bitbucket.org/artgillespie/tslibraryimport

After using this to save the audio into a file, you could process the data with render callbacks from MixerHost.

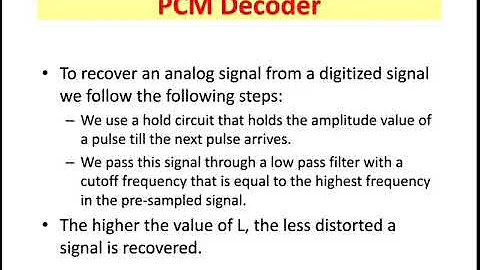

Related videos on Youtube

Peter

Updated on June 04, 2022Comments

-

Peter almost 2 years

I am currently working on an application as part of my Bachelor in Computer Science. The application will correlate data from the iPhone hardware (accelerometer, gps) and music that is being played.

The project is still in its infancy, having worked on it for only 2 months.

The moment that I am right now, and where I need help, is reading PCM samples from songs from the itunes library, and playing them back using and audio unit. Currently the implementation I would like working does the following: chooses a random song from iTunes, and reads samples from it when required, and stores in a buffer, lets call it sampleBuffer. Later on in the consumer model the audio unit (which has a mixer and a remoteIO output) has a callback where I simply copy the required number of samples from sampleBuffer into the buffer specified in the callback. What i then hear through the speakers is something not quite what i expect; I can recognize that it is playing the song however it seems that it is incorrectly decoded and it has a lot of noise! I attached an image which shows the first ~half a second (24576 samples @ 44.1kHz), and this does not resemble a normall looking output. Before I get into the listing I have checked that the file is not corrupted, similarily I have written test cases for the buffer (so I know the buffer does not alter the samples), and although this might not be the best way to do it (some would argue to go the audio queue route), I want to perform various manipulations on the samples aswell as changing the song before it is finished, rearranging what song is played, etc. Furthermore, maybe there are some incorrect settings in the audio unit, however, the graph that displays the samples (which shows the samples are decoded incorrectly) is taken straight from the buffer, thus I am only looking now to solve why the reading from the disk and decoding does not work correctly. Right now i simply want to get a play through working. Cant post images because new to stackoverflow so heres the link to the image: http://i.stack.imgur.com/RHjlv.jpg

Listing:

This is where I setup the audioReadSettigns which will be used for the AVAssetReaderAudioMixOutput

// Set the read settings audioReadSettings = [[NSMutableDictionary alloc] init]; [audioReadSettings setValue:[NSNumber numberWithInt:kAudioFormatLinearPCM] forKey:AVFormatIDKey]; [audioReadSettings setValue:[NSNumber numberWithInt:16] forKey:AVLinearPCMBitDepthKey]; [audioReadSettings setValue:[NSNumber numberWithBool:NO] forKey:AVLinearPCMIsBigEndianKey]; [audioReadSettings setValue:[NSNumber numberWithBool:NO] forKey:AVLinearPCMIsFloatKey]; [audioReadSettings setValue:[NSNumber numberWithBool:NO] forKey:AVLinearPCMIsNonInterleaved]; [audioReadSettings setValue:[NSNumber numberWithFloat:44100.0] forKey:AVSampleRateKey];Now the following code listing is a method that receives an NSString with the persistant_id of the song:

-(BOOL)setNextSongID:(NSString*)persistand_id { assert(persistand_id != nil); MPMediaItem *song = [self getMediaItemForPersistantID:persistand_id]; NSURL *assetUrl = [song valueForProperty:MPMediaItemPropertyAssetURL]; AVURLAsset *songAsset = [AVURLAsset URLAssetWithURL:assetUrl options:[NSDictionary dictionaryWithObject:[NSNumber numberWithBool:YES] forKey:AVURLAssetPreferPreciseDurationAndTimingKey]]; NSError *assetError = nil; assetReader = [[AVAssetReader assetReaderWithAsset:songAsset error:&assetError] retain]; if (assetError) { NSLog(@"error: %@", assetError); return NO; } CMTimeRange timeRange = CMTimeRangeMake(kCMTimeZero, songAsset.duration); [assetReader setTimeRange:timeRange]; track = [[songAsset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0]; assetReaderOutput = [AVAssetReaderAudioMixOutput assetReaderAudioMixOutputWithAudioTracks:[NSArray arrayWithObject:track] audioSettings:audioReadSettings]; if (![assetReader canAddOutput:assetReaderOutput]) { NSLog(@"cant add reader output... die!"); return NO; } [assetReader addOutput:assetReaderOutput]; [assetReader startReading]; // just getting some basic information about the track to print NSArray *formatDesc = ((AVAssetTrack*)[[assetReaderOutput audioTracks] objectAtIndex:0]).formatDescriptions; for (unsigned int i = 0; i < [formatDesc count]; ++i) { CMAudioFormatDescriptionRef item = (CMAudioFormatDescriptionRef)[formatDesc objectAtIndex:i]; const CAStreamBasicDescription *asDesc = (CAStreamBasicDescription*)CMAudioFormatDescriptionGetStreamBasicDescription(item); if (asDesc) { // get data numChannels = asDesc->mChannelsPerFrame; sampleRate = asDesc->mSampleRate; asDesc->Print(); } } [self copyEnoughSamplesToBufferForLength:24000]; return YES; }The following presents the function -(void)copyEnoughSamplesToBufferForLength:

-(void)copyEnoughSamplesToBufferForLength:(UInt32)samples_count { [w_lock lock]; int stillToCopy = 0; if (sampleBuffer->numSamples() < samples_count) { stillToCopy = samples_count; } NSAutoreleasePool *apool = [[NSAutoreleasePool alloc] init]; CMSampleBufferRef sampleBufferRef; SInt16 *dataBuffer = (SInt16*)malloc(8192 * sizeof(SInt16)); int a = 0; while (stillToCopy > 0) { sampleBufferRef = [assetReaderOutput copyNextSampleBuffer]; if (!sampleBufferRef) { // end of song or no more samples return; } CMBlockBufferRef blockBuffer = CMSampleBufferGetDataBuffer(sampleBufferRef); CMItemCount numSamplesInBuffer = CMSampleBufferGetNumSamples(sampleBufferRef); AudioBufferList audioBufferList; CMSampleBufferGetAudioBufferListWithRetainedBlockBuffer(sampleBufferRef, NULL, &audioBufferList, sizeof(audioBufferList), NULL, NULL, 0, &blockBuffer); int data_length = floorf(numSamplesInBuffer * 1.0f); int j = 0; for (int bufferCount=0; bufferCount < audioBufferList.mNumberBuffers; bufferCount++) { SInt16* samples = (SInt16 *)audioBufferList.mBuffers[bufferCount].mData; for (int i=0; i < numSamplesInBuffer; i++) { dataBuffer[j] = samples[i]; j++; } } CFRelease(sampleBufferRef); sampleBuffer->putSamples(dataBuffer, j); stillToCopy = stillToCopy - data_length; } free(dataBuffer); [w_lock unlock]; [apool release]; }Now the sampleBuffer will have incorrectly decoded samples. Can anyone help me why this is so? This happens for different files on my iTunes library (mp3, aac, wav, etc). Any help would be greatly appreciated, furthermore, if you need any other listing of my code, or perhaps what the output sounds like, I will attach it per request. I have been sitting on this for the past week trying to debug it and have found no help online -- everyone seems to be doign it in my way, yet it seems that only I have this issue.

Thanks for any help at all!

Peter

-

Peter about 12 yearsAudioFilePlayer allows me to only specify a single file to play, and further it cannot be from iTunes. ExtAudioFileRef is also using Audio Sessions which do not allow access from iTunes (or atleast I cant get it to work). Has anyone implemented something similar that could helpe me? Please

-

dubbeat about 12 yearsI dont have much experience with the itune library I'm afraid. Does this help though? subfurther.com/blog/2010/12/13/…

-

Peter about 12 yearsThanks a lot! Really helpful!

-

the-a-train almost 12 yearsWhat is kUnitSize? and what is kTotalBufferSize?

-

infiniteloop almost 12 years@smartfaceweb : In my case, I have used the following setting

#define kUnitSize sizeof(AudioSampleType) #define kBufferUnit 655360 #define kTotalBufferSize kBufferUnit * kUnitSize -

abbood over 11 years@infiniteloop can you please let us know if this code works with iOS as well? based on my limited research so far onto audio units, it seems that iOS has far less audio unit features than its OSX counterpart

abbood over 11 years@infiniteloop can you please let us know if this code works with iOS as well? based on my limited research so far onto audio units, it seems that iOS has far less audio unit features than its OSX counterpart -

abbood over 11 years

abbood over 11 years -

infiniteloop over 11 years@www.fossfactory.org it works on iOS 4.3-5.1.1, but i have never tried it on iOS 6 yet.

-

abbood over 11 years@infiniteloop I was wondering about one thing.. supposed I have the TPCircularBuffer buffer declared as a public iVar how do I declare the property? is it atomic or nonaotmic? the author mentioned something about it's atomicity in the git hub repo: github.com/michaeltyson/TPCircularBuffer

abbood over 11 years@infiniteloop I was wondering about one thing.. supposed I have the TPCircularBuffer buffer declared as a public iVar how do I declare the property? is it atomic or nonaotmic? the author mentioned something about it's atomicity in the git hub repo: github.com/michaeltyson/TPCircularBuffer -

infiniteloop over 11 years@www.fossfactory.org actually i am wrapping the TPCircularBuffer struct inside

typedef struct { AudioLibrary * audio; AudioUnit ioUnit; AudioUnit mixerUnit; BOOL playingiPod; BOOL bufferIsReady; TPCircularBuffer circularBuffer; Float64 samplingRate; } PlaybackState, *PlaybackStatePtr;and declare the@property (assign) PlaybackState playbackState;in the class. -

dizy almost 10 yearsYou check that circular buffer has at least 32768 available before filling, and that's the same number CMSampleBufferGetTotalSampleSize returns, but I wonder if that size might ever be different for whatever reason. Any particular meaning for it being what it is?

![[422] Ramdisk Passcode/Disable IOS 15 - Read id apple/phone number no need jaibreak | HTHND](https://i.ytimg.com/vi/ZZvp6AeMryQ/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDP9KBneUxKq-8DDi-Vzuvg8JmrNA)

![KRUU Virus [.kruu Files] Decrypt & Remove GUIDE [Free]](https://i.ytimg.com/vi/B2tGjxsMx08/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDedFRDd3X6EV-fDKazZiBNebtjlQ)