How to deal with batches with variable-length sequences in TensorFlow?

Solution 1

You can use the ideas of bucketing and padding which are described in:

Also, the rnn function which creates RNN network accepts parameter sequence_length.

As an example, you can create buckets of sentences of the same size, pad them with the necessary amount of zeros, or placeholders which stand for zero word and afterwards feed them along with seq_length = len(zero_words).

seq_length = tf.placeholder(tf.int32)

outputs, states = rnn.rnn(cell, inputs, initial_state=initial_state, sequence_length=seq_length)

sess = tf.Session()

feed = {

seq_length: 20,

#other feeds

}

sess.run(outputs, feed_dict=feed)

Take a look at this reddit thread as well:

Tensorflow basic RNN example with 'variable length' sequences

Solution 2

You can use dynamic_rnn instead and specify length of every sequence even within one batch via passing array to sequence_length parameter.

Example is below:

def length(sequence):

used = tf.sign(tf.reduce_max(tf.abs(sequence), reduction_indices=2))

length = tf.reduce_sum(used, reduction_indices=1)

length = tf.cast(length, tf.int32)

return length

from tensorflow.nn.rnn_cell import GRUCell

max_length = 100

frame_size = 64

num_hidden = 200

sequence = tf.placeholder(tf.float32, [None, max_length, frame_size])

output, state = tf.nn.dynamic_rnn(

GRUCell(num_hidden),

sequence,

dtype=tf.float32,

sequence_length=length(sequence),

)

Code is taken from a perfect article on the topic, please also check it.

Update: Another great post on dynamic_rnn vs rnn you can find

Solution 3

You can use ideas of bucketing and padding which are described in

Also rnn function which creates RNN network accepts parameter sequence_length.

As example you can create buckets of sentances of the same size, padd them with necessary amount of zeros, or placeholdres which stands for zero word and afterwards feed them along with seq_length = len(zero_words).

seq_length = tf.placeholder(tf.int32)

outputs, states = rnn.rnn(cell, inputs,initial_state=initial_state,sequence_length=seq_length)

sess = tf.Session()

feed = {

seq_lenght: 20,

#other feeds

}

sess.run(outputs, feed_dict=feed)

Here , the most important thing is , if you want to make use of the states obtained by one sentence as , the state for the next sentence , when you are providing sequence_length , ( lets say 20 and sentence after padding is 50 ) . You want the state obtained at the 20th time step . For that , do

tf.pack(states)

After that call

for i in range(len(sentences)):

state_mat = session.run([states],{

m.input_data: x,m.targets: y,m.initial_state: state, m.early_stop:early_stop })

state = state_mat[early_stop-1,:,:]

Solution 4

You can limit the maximum length of your input sequences, pad the shorter ones to that length, record the length of each sequence and use tf.nn.dynamic_rnn . It processes input sequences as usual, but after the last element of a sequence, indicated by seq_length, it just copies the cell state through, and for output it outputs zeros-tensor.

Solution 5

Sorry to post on a dead issue but I just submitted a PR for a better solution. dynamic_rnn is extremely flexible but abysmally slow. It works if it is your only option but CuDNN is much faster. This PR adds support for variable lengths to CuDNNLSTM, so you will hopefully be able to use that soon.

You need to sort sequences by descending length. Then you can pack_sequence, run your RNNs, then unpack_sequence.

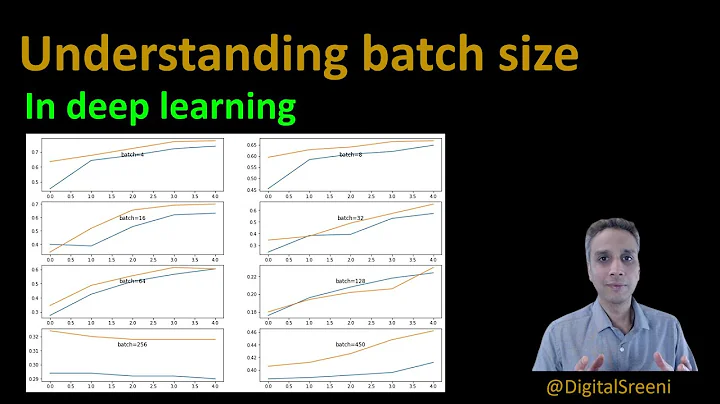

Related videos on Youtube

Seja Nair

Updated on February 07, 2020Comments

-

Seja Nair about 4 years

I was trying to use an RNN (specifically, LSTM) for sequence prediction. However, I ran into an issue with variable sequence lengths. For example,

sent_1 = "I am flying to Dubain" sent_2 = "I was traveling from US to Dubai"I am trying to predicting the next word after the current one with a simple RNN based on this Benchmark for building a PTB LSTM model.

However, the

num_stepsparameter (used for unrolling to the previous hidden states), should remain the same in each Tensorflow's epoch. Basically, batching sentences is not possible as the sentences vary in length.# inputs = [tf.squeeze(input_, [1]) # for input_ in tf.split(1, num_steps, inputs)] # outputs, states = rnn.rnn(cell, inputs, initial_state=self._initial_state)Here,

num_stepsneed to be changed in my case for every sentence. I have tried several hacks, but nothing seems working.-

clearlight over 7 yearsLink requires Google account to read.

clearlight over 7 yearsLink requires Google account to read.

-

-

Sonal Gupta over 7 yearsis it possible to infer on sentences that are more than max sequence length during inference?

-

Seja Nair over 7 years@SonalGupta - Can you please be more specific ?

-

tnq177 over 7 years@SonalGupta yes. During interference, just feed in one time-step input at a time, i.e. you unroll RNN for only one time step.

tnq177 over 7 years@SonalGupta yes. During interference, just feed in one time-step input at a time, i.e. you unroll RNN for only one time step. -

Sonal Gupta over 7 years@Seja Nair: sorry, there is a typo in my question: "is it possible to infer on sentences that are more than max sequence length during training?". More specifically: stackoverflow.com/questions/39881639/…

-

Sonal Gupta over 7 years@tnq177: Wouldn't that beat the point of it being a sequential model?

-

Shamane Siriwardhana almost 7 yearsHere when we get different sizes of seq2seq what happen? The lstm get padded up to the largest one?

-

Datalker almost 7 yearsIn this case no padding happens, because we explicitly pass length of each sequence to a function

-

Mike Khan over 6 yearsDo you thin padding sentences (or larger blocks of text) with zeros could cause a vanishing gradient problem? As an example, if our longest sentence has 1000 words and most other only have about 100 do you think a large number of zeros in the input could cause the gradient to vanish?

-

seeiespi about 6 years@MikeKhan, that is a legitimate concern. One way around that is to bucket you data into batches of uniform length since timesteps parameter need not be uniform across batches.

seeiespi about 6 years@MikeKhan, that is a legitimate concern. One way around that is to bucket you data into batches of uniform length since timesteps parameter need not be uniform across batches. -

ARAT over 5 yearsThis function only works if the sequence does not contain frames with all elements being zero

ARAT over 5 yearsThis function only works if the sequence does not contain frames with all elements being zero