How to design deep convolutional neural networks?

Short answer: if there are design rules, we haven't discovered them yet.

Note that there are comparable questions in computing. For instance, note that there is only a handful of basic electronic logic units, the gates that drive your manufacturing technology. All computing devices use the same Boolean logic; some have specialised additions, such as photoelectric input or mechanical output.

How do you decide how to design your computing device?

The design depends on the purpose of the CNN. Input characteristics, accuracy, training speed, scoring speed, adaptation, computing resources, ... all of these affect the design. There is no generalized solution, even for a given problem (yet).

For instance, consider the ImageNet classification problem. Note the structural differences between the winners and contenders so far: AlexNet, GoogleNet, ResNet, VGG, etc. If you change inputs (say, to MNIST), then these are overkill. If you change the paradigm, they may be useless. GoogleNet may be a prince of image processing, but it's horrid for translating spoken French to written English. If you want to track a hockey puck in real time on your video screen, forget these implementations entirely.

So far, we're doing this the empirical way: a lot of people try a lot of different things to see what works. We get feelings for what will improve accuracy, or training time, or whatever factor we want to tune. We find what works well with total CPU time, or what we can do in parallel. We change algorithms to take advantage of vector math in lengths that are powers of 2. We change problems slightly and see how the learning adapts elsewhere. We change domains (say, image processing to written text), and start all over -- but with a vague feeling of what might tune a particular bottleneck, once we get down to considering certain types of layers.

Remember, CNNs really haven't been popular for that long, barely 6 years. For the most part, we're still trying to learn what the important questions might be. Welcome to the research team.

Related videos on Youtube

malreddysid

Updated on April 12, 2021Comments

-

malreddysid about 3 years

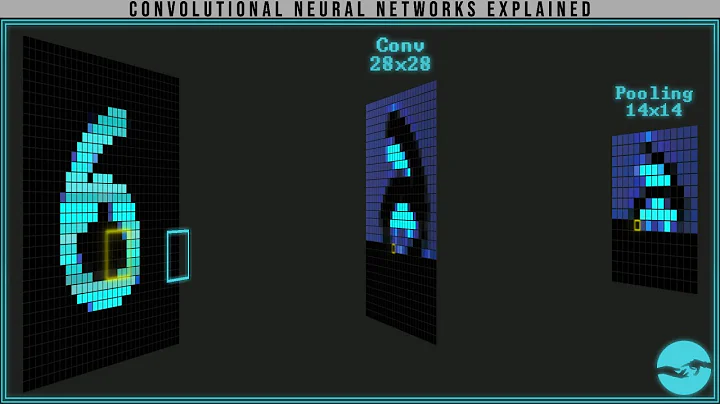

As I understand it, all CNNs are quite similar. They all have a convolutional layers followed by pooling and relu layers. Some have specialised layers like FlowNet and Segnet. My doubt is how should we decide how many layers to use and how do we set the kernel size for each layer in the network. I have searched for an answer to this question but I couldn't find a concrete answer. Is the network designed using trial and error or are some specific rules that I am not aware of? If you could please clarify this, I would be very grateful to you.

-

Qazi almost 8 yearsI also have the same question. Although, your opinion is accurate in concluding that an empirical approach is chosen, I am not able to understand if there is at least some design process to start making a model or not? It will be too random to just put layers on top of one another and expect them to give a somewhat accurate result. There should be some basic guidelines on how to start and then empirical methods can be used to fine tune the model.

-

Prune almost 8 years@Qazi At the level you're asking the question, there are no such guidelines. It sounds as if you're asking for practical, applicable guidelines for "how do I make a model?" Until you classify the model according to it's general characteristics, we can't even say that a CNN might be a good solution. For most modelling situations, a neural network is a waste of computing resources.

Prune almost 8 years@Qazi At the level you're asking the question, there are no such guidelines. It sounds as if you're asking for practical, applicable guidelines for "how do I make a model?" Until you classify the model according to it's general characteristics, we can't even say that a CNN might be a good solution. For most modelling situations, a neural network is a waste of computing resources. -

Prune almost 8 years@Qazi You are correct that it's not practical to just start slapping layers together. Rather, you need to analyze your input texture, consider your desired modelling purpose and performance, determine what features you could derive from the input that may lead to the output you want, and then experiment with network topologies that embody those features. A relatively small change in the input texture often results in a large change in the model topology.

Prune almost 8 years@Qazi You are correct that it's not practical to just start slapping layers together. Rather, you need to analyze your input texture, consider your desired modelling purpose and performance, determine what features you could derive from the input that may lead to the output you want, and then experiment with network topologies that embody those features. A relatively small change in the input texture often results in a large change in the model topology. -

Luc about 6 yearsThank you for your complete answer. However, it is almost 2 years later. Is an update worth mentioning? I have the same question, also for RNN and LSTM.

-

Prune about 6 yearsYes, an update is absolutely worth mentioning! If you have something to add, please do. You prompted me to add a link I found around the turn of the year. Your question on RNN and LSTM is perfectly valid; please post as a separate question, linking to this one for reference.

Prune about 6 yearsYes, an update is absolutely worth mentioning! If you have something to add, please do. You prompted me to add a link I found around the turn of the year. Your question on RNN and LSTM is perfectly valid; please post as a separate question, linking to this one for reference. -

C-3PO about 3 yearsThanks for the complete answer, it was very helpful. Does somebody know an alternative URL? The link mentioned was changed by Kaggle.