How to display graphs of loss and accuracy on pytorch using matplotlib

11,259

What you need to do is: Average the loss over all the batches and then append it to a variable after every epoch and then plot it. Implementation would be something like this:

import matplotlib.pyplot as plt

def my_plot(epochs, loss):

plt.plot(epochs, loss)

def train(num_epochs,optimizer,criterion,model):

loss_vals= []

for epoch in range(num_epochs):

epoch_loss= []

for i, (images, labels) in enumerate(trainloader):

# rest of the code

loss.backward()

epoch_loss.append(loss.item())

# rest of the code

# rest of the code

loss_vals.append(sum(epoch_loss)/len(epoch_loss))

# rest of the code

# plotting

my_plot(np.linspace(1, num_epochs, num_epochs).astype(int), loss_vals)

my_plot([1, 2, 3, 4, 5], [100, 90, 60, 30, 10])

You can do a similar calculation for accuracy.

Related videos on Youtube

Author by

Ka_

Updated on May 30, 2022Comments

-

Ka_ 7 months

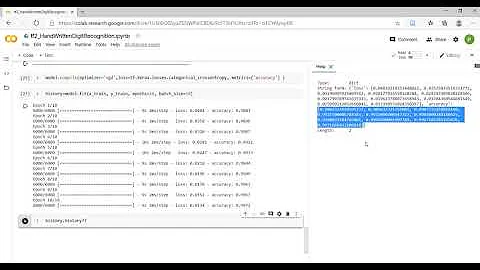

Ka_ 7 monthsI am new to pytorch, and i would like to know how to display graphs of loss and accuraccy And how exactly should i store these values,knowing that i'm applying a cnn model for image classification using CIFAR10.

here is my current implementation :

def train(num_epochs,optimizer,criterion,model): for epoch in range(num_epochs): for i, (images, labels) in enumerate(trainloader): # origin shape: [4, 3, 32, 32] = 4, 3, 1024 # input_layer: 3 input channels, 6 output channels, 5 kernel size images = images.to(device) labels = labels.to(device) # Forward pass outputs = model(images) loss = criterion(outputs, labels) # Backward and optimize optimizer.zero_grad() loss.backward() optimizer.step() if (i+1) % 2000 == 0: print (f'Epoch [{epoch+1}/{num_epochs}], Step [{i+1}/{n_total_steps}], Loss: {loss.item():.4f}') PATH = './cnn.pth' torch.save(model.state_dict(), PATH) def test (): with torch.no_grad(): n_correct = 0 n_samples = 0 n_class_correct = [0 for i in range(10)] n_class_samples = [0 for i in range(10)] for images, labels in testloader: images = images.to(device) labels = labels.to(device) outputs = model(images) # max returns (value ,index) _, predicted = torch.max(outputs, 1) n_samples += labels.size(0) n_correct += (predicted == labels).sum().item() for i in range(batch_size): label = labels[i] pred = predicted[i] if (label == pred): n_class_correct[label] += 1 n_class_samples[label] += 1 acc = 100.0 * n_correct / n_samples print(f'Accuracy of the network: {acc} %') for i in range(10): acc = 100.0 * n_class_correct[i] / n_class_samples[i] print(f'Accuracy of {classes[i]}: {acc} %') test_score = np.mean([100 * n_class_correct[i] / n_class_samples[i] for i in range(10)]) print("the score test is : {0:.3f}%".format(test_score)) return acc -

TheRajVJain over 1 yearCurrent code does avg. of avg. You would ideally need to do epoch_loss.append(loss.item() * images.shape[0]). at the end of epoch, sum(epoch_loss) / len(training of dataset)

TheRajVJain over 1 yearCurrent code does avg. of avg. You would ideally need to do epoch_loss.append(loss.item() * images.shape[0]). at the end of epoch, sum(epoch_loss) / len(training of dataset)