How to do Bulk insert using Sequelize and node.js

Solution 1

I utilized the cargo utility of the async library to load in up to 1000 rows at a time. See the following code for loading a csv into a database:

var fs = require('fs'),

async = require('async'),

csv = require('csv');

var input = fs.createReadStream(filename);

var parser = csv.parse({

columns: true,

relax: true

});

var inserter = async.cargo(function(tasks, inserterCallback) {

model.bulkCreate(tasks).then(function() {

inserterCallback();

}

);

},

1000

);

parser.on('readable', function () {

while(line = parser.read()) {

inserter.push(line);

}

});

parser.on('end', function (count) {

inserter.drain = function() {

doneLoadingCallback();

}

});

input.pipe(parser);

Solution 2

You can use Sequelize's built in bulkCreate method to achieve this.

User.bulkCreate([

{ username: 'barfooz', isAdmin: true },

{ username: 'foo', isAdmin: true },

{ username: 'bar', isAdmin: false }

]).then(() => { // Notice: There are no arguments here, as of right now you'll have to...

return User.findAll();

}).then(users => {

console.log(users) // ... in order to get the array of user objects

})

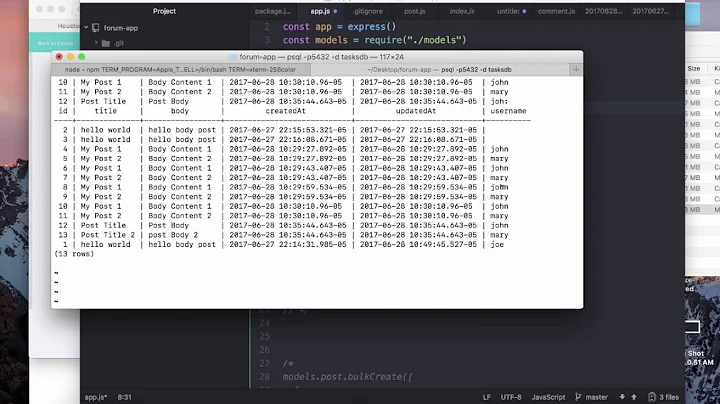

Sequelize | Bulk Create and Update

Solution 3

If you really want to use bulkInsert, than my previous answer is sufficient. However, you'll run out of memory if you have a lot of data! It really is best to use some built-in database method is best for this. The problem is that you're loading all the data into memory until the bulkCreate executes. If you got a million rows, you'll probably run out of memory before it even executes. Even still, if you queue it up using something like async.cargo, you'll still be waiting for the db to get back to you all while the data asyncrhonously consumes all your memory.

My solution was to ditch sequelize for the loading of data (at least until they implement streaming or something (see their github issue #2454)). I ended up creating db-streamer, but it just has pg support for now. You'll want to look at streamsql for mysql.

Solution 4

The following question has the same answer that you need here: NodeJS, promises, streams - processing large CSV files

- use a stream to read the data in and to parse it;

- use the combination of methods stream.read and sequence from spex to read the stream and execute the queries one by one.

Related videos on Youtube

Uma Maheshwaraa

Updated on July 30, 2020Comments

-

Uma Maheshwaraa almost 4 years

js + sequelize to insert 280K rows of data using JSON. The JSON is an array of 280K. Is there a way to do bulk insert in chunks. I am seeing that it takes a lot of time to update the data. When i tried to cut down the data to 40K rows it works quick. Am i taking the right approach. Please advice. I am using postgresql as backend.

PNs.bulkCreate(JSON_Small) .catch(function(err) { console.log('Error ' + err); }) .finally(function(err) { console.log('FINISHED + ' \n +++++++ \n'); });-

vitaly-t over 8 yearsThe same question here, with an answer: stackoverflow.com/questions/33129677/…

vitaly-t over 8 yearsThe same question here, with an answer: stackoverflow.com/questions/33129677/…

-

![Build a Node.js App With Sequelize [1] - Connection & Model](https://i.ytimg.com/vi/bOHysWYMZM0/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDKmS74FJCXEplWOHeoKiEj8kjOxQ)