How to download a file from http url?

63,345

Solution 1

This is what I did:

wget -O file.tar "http://www.ncbi.nlm.nih.gov/geo/download/?acc=GSE46130&format=file"

Solution 2

Use the -O option with wget, to specify where to save the file that is downloaded. For example:

wget -O /path/to/file http://www.ncbi.nlm.nih.gov/geo/download/?acc=GSE46130&format=file

Solution 3

# -r : recursive

# -nH : Disable generation of host-prefixed directories

# -nd : all files will get saved to the current directory

# -np : Do not ever ascend to the parent directory when retrieving recursively.

# -R index.html*,999999-99999-1990.gz* : don't download files with this files pattern

wget -r -nH -nd -np -R *.html,999999-99999-1990.gz* http://www1.ncdc.noaa.gov/pub/data/noaa/1990/

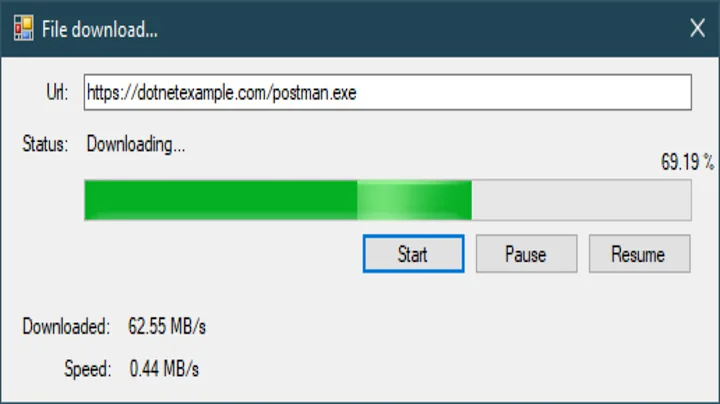

Related videos on Youtube

Author by

olala

Learning programming, computation, data analysis, so much to learn but I'm enjoying it!

Updated on July 09, 2022Comments

-

olala almost 2 years

I know how to use wget to download from ftp but I couldn't use wget to download from the following link:

http://www.ncbi.nlm.nih.gov/geo/download/?acc=GSE46130&format=file

If you copy and paste it in the browser, it'll start to download. But I want to download it to our server directly so I don't need to move it from my desktop to the server. How do I do it?

Thanks!

-

olala over 10 yearsI tried and I only got a 6.4K file and the original file is over 50M..why?

-

olala over 10 yearsI tried and I only got a 6.4K file and the original file is over 50M..why?

-

FedeCz over 10 yearsglad it was helpful. The ""'s are required to escape special chars like ? and &

-

Noumenon almost 8 yearsThis code could use a little introduction to make it an answer. Like "The

Noumenon almost 8 yearsThis code could use a little introduction to make it an answer. Like "The-ndflag will let you save the file without a prompt for the filename. Here's a script that will even handle multiple files and directories." With no intro I was wondering "Is this really an answer? The URL doesn't match and there's no problem with .gz* files in the question". But it is a good answer IMO!