How to extract phrases from corpus using gensim

I got the solution for the problem , There was two parameters I didn't take care of it which should be passed to Phrases() model, those are

min_count ignore all words and bigrams with total collected count lower than this. Bydefault it value is 5

threshold represents a threshold for forming the phrases (higher means fewer phrases). A phrase of words a and b is accepted if (cnt(a, b) - min_count) * N / (cnt(a) * cnt(b)) > threshold, where N is the total vocabulary size. Bydefault it value is 10.0

With my above train data with two statements, threshold value was 0, so I change train datasets and add those two parameters.

My New code

from gensim.models import Phrases

documents = ["the mayor of new york was there", "machine learning can be useful sometimes","new york mayor was present"]

sentence_stream = [doc.split(" ") for doc in documents]

bigram = Phrases(sentence_stream, min_count=1, threshold=2)

sent = [u'the', u'mayor', u'of', u'new', u'york', u'was', u'there']

print(bigram[sent])

Output

[u'the', u'mayor', u'of', u'new_york', u'was', u'there']

Gensim is really awesome :)

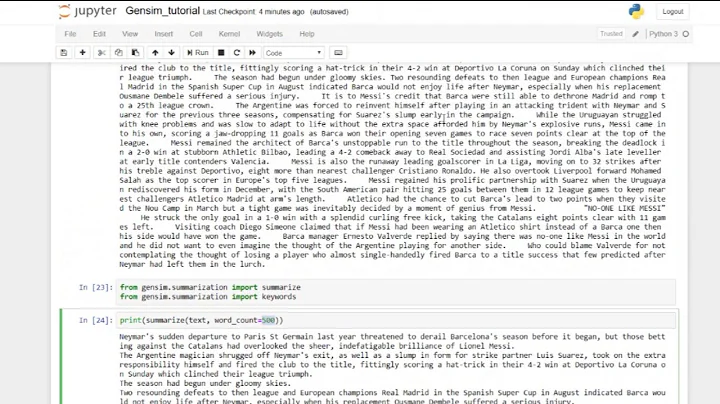

Related videos on Youtube

Prashant Puri

Updated on July 09, 2022Comments

-

Prashant Puri almost 2 years

For preprocessing the corpus I was planing to extarct common phrases from the corpus, for this I tried using Phrases model in gensim, I tried below code but it's not giving me desired output.

My code

from gensim.models import Phrases documents = ["the mayor of new york was there", "machine learning can be useful sometimes"] sentence_stream = [doc.split(" ") for doc in documents] bigram = Phrases(sentence_stream) sent = [u'the', u'mayor', u'of', u'new', u'york', u'was', u'there'] print(bigram[sent])Output

[u'the', u'mayor', u'of', u'new', u'york', u'was', u'there']But it should come as

[u'the', u'mayor', u'of', u'new_york', u'was', u'there']But when I tried to print vocab of train data, I can see bigram, but its not working with test data, where I am going wrong?

print bigram.vocab defaultdict(<type 'int'>, {'useful': 1, 'was_there': 1, 'learning_can': 1, 'learning': 1, 'of_new': 1, 'can_be': 1, 'mayor': 1, 'there': 1, 'machine': 1, 'new': 1, 'was': 1, 'useful_sometimes': 1, 'be': 1, 'mayor_of': 1, 'york_was': 1, 'york': 1, 'machine_learning': 1, 'the_mayor': 1, 'new_york': 1, 'of': 1, 'sometimes': 1, 'can': 1, 'be_useful': 1, 'the': 1}) -

Admin over 6 yearsThan you for the valuable answer. But in this example bigram does not catch "machine", "learning" as "machine_learning". Do you know why that happens?

Admin over 6 yearsThan you for the valuable answer. But in this example bigram does not catch "machine", "learning" as "machine_learning". Do you know why that happens? -

ethanenglish over 6 yearsIf you add "machine learning" in the sentence before training twice, then add it into your sent variable, you'll get "machine_learning". If it cannot see a frequency of that pair then it's not going to intuitively know.

-

Baktaawar about 6 years@Prashant Does Phrase modeling from Gensim work on individual docs or we need to pass in the whole corpus. Like in your case each doc is just one sentence. But what if I have docs which are multi-sentence. Then i need to convert each doc to list of sentence (which is further a list of token strings) and then pass the whole list of list of all docs to phrase modeling or just individual doc can be sent too. I feel it has to be whole corpus as thats when it would count the phrase frequency occurring across the corpus. <contd>

-

Baktaawar about 6 yearsIn that case it becomes difficult to run phrase modeling if the docs are like in thousands.

-

Prashant Puri about 6 years@Baktaawar Phrase Modeling work on both individual docs and whole corpus. But, whole corpus requires high computation.

-

Baktaawar about 6 yearsNo what I meant was to train phrase model one would be training it on whole corpus (all documents) right? You can just run it on individual docs, but then it won't find the right phrases as to find right phrases it needs to count the frequency of co-occuring words and individual words across the corpus. In just one doc if you train you would hardly find the frequency of two words.

-

Prashant Puri about 6 years@Baktaawar: train phrase model should be train on whole corpus.

-

Aviad Rozenhek over 5 yearsdo you know how to extract the bigrams learned? for instance, "new york" was learned as a bigram new_york. how do I extract ALL the phrases learned?

-

keramat almost 5 years@Aviad Rozenhek use: [x for x in bigram[sent] if re.findall('\w_\w', x)]

-

keramat almost 5 years@Prashant Puri based on the formula you mentioned: tokens = sentence_stream[0]+sentence_stream[1]+sentence_stream[2] (2-1)*len(set(tokens))/(tokens.count('new')*tokens.count('york')) is equal 3.5. setting threshold to 7 works!

-

Jinhua Wang over 3 yearsHow long did it take for you to train this model?

Jinhua Wang over 3 yearsHow long did it take for you to train this model?