How to fetch vectors for a word list with Word2Vec?

Solution 1

The direct access model[word] is deprecated and will be removed in Gensim 4.0.0 in order to separate the training and the embedding. The command should be replaced with, simply, model.wv[word].

Using Gensim in Python, after vocabs are built and the model trained, you can find the word count and sampling information already mapped in model.wv.vocab, where model is the variable name of your Word2Vec object.

Thus, to create a dictionary object, you may:

my_dict = dict({})

for idx, key in enumerate(model.wv.vocab):

my_dict[key] = model.wv[key]

# Or my_dict[key] = model.wv.get_vector(key)

# Or my_dict[key] = model.wv.word_vec(key, use_norm=False)

Now that you have your dictionary, you can write it to a file with whatever means you like. For example, you can use the pickle library. Alternatively, if you are using Jupyter Notebook, they have a convenient 'magic command' %store my_dict > filename.txt. Your filename.txt will look like:

{'one': array([-0.06590105, 0.01573388, 0.00682817, 0.53970253, -0.20303348,

-0.24792041, 0.08682659, -0.45504045, 0.89248925, 0.0655603 ,

......

-0.8175681 , 0.27659689, 0.22305458, 0.39095637, 0.43375066,

0.36215973, 0.4040089 , -0.72396156, 0.3385369 , -0.600869 ],

dtype=float32),

'two': array([ 0.04694849, 0.13303463, -0.12208422, 0.02010536, 0.05969441,

-0.04734801, -0.08465996, 0.10344813, 0.03990637, 0.07126121,

......

0.31673026, 0.22282903, -0.18084198, -0.07555179, 0.22873943,

-0.72985399, -0.05103955, -0.10911274, -0.27275378, 0.01439812],

dtype=float32),

'three': array([-0.21048863, 0.4945509 , -0.15050395, -0.29089224, -0.29454648,

0.3420335 , -0.3419629 , 0.87303966, 0.21656844, -0.07530259,

......

-0.80034876, 0.02006451, 0.5299498 , -0.6286509 , -0.6182588 ,

-1.0569025 , 0.4557548 , 0.4697938 , 0.8928275 , -0.7877308 ],

dtype=float32),

'four': ......

}

You may also wish to look into the native save / load methods of Gensim's word2vec.

Solution 2

Gensim tutorial explains it very clearly.

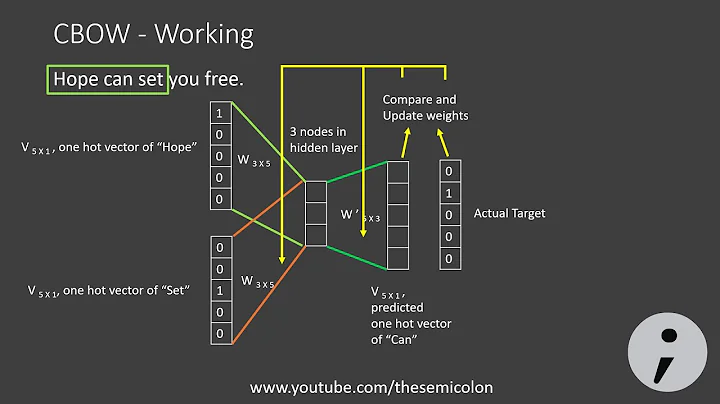

First, you should create word2vec model - either by training it on text, e.g.

model = Word2Vec(sentences, size=100, window=5, min_count=5, workers=4)

or by loading pre-trained model (you can find them here, for example).

Then iterate over all your words and check for their vectors in the model:

for word in words:

vector = model[word]

Having that, just write word and vector formatted as you want.

Solution 3

If you are willing to use python with gensim package, then building upon this answer and Gensim Word2Vec Documentation you could do something like this

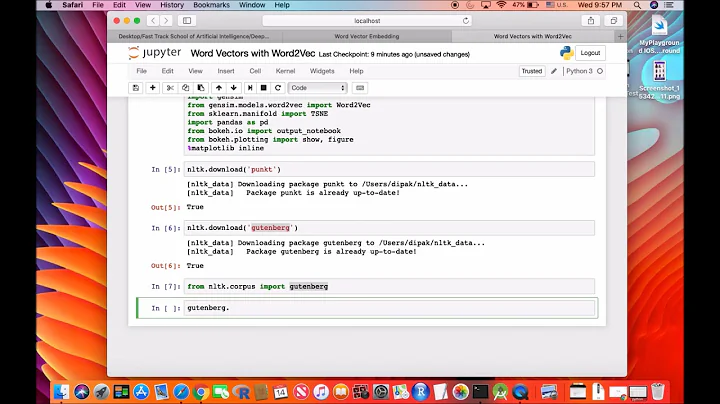

from gensim.models import Word2Vec

# Take some sample sentences

tokenized_sentences = [["here","is","one"],["and","here","is","another"]]

# Initialise model, for more information, please check the Gensim Word2vec documentation

model = Word2Vec(tokenized_sentences, size=100, window=2, min_count=0)

# Get the ordered list of words in the vocabulary

words = model.wv.vocab.keys()

# Make a dictionary

we_dict = {word:model.wv[word] for word in words}

Solution 4

You can Directly get the vectors through

model = Word2Vec(sentences, size=100, window=5, min_count=5, workers=4)

model.wv.vectors

and words through

model.wv.vocab.keys()

Hope it helps !

Related videos on Youtube

jonbon

Updated on February 22, 2022Comments

-

jonbon about 2 years

jonbon about 2 yearsI want to create a text file that is essentially a dictionary, with each word being paired with its vector representation through word2vec. I'm assuming the process would be to first train word2vec and then look-up each word from my list and find its representation (and then save it in a new text file)?

I'm new to word2vec and I don't know how to go about doing this. I've read from several of the main sites, and several of the questions on Stack, and haven't found a good tutorial yet.

-

Naman almost 9 yearsIt's quite easy. I had done that in past. Do you want to use any specific language? You can directly use author's code (in C++) to train and extract the vectors. It's simple 600-700 lines of optimized code. I might be able to help with exact arguments if you require it.

-

jonbon almost 9 yearsI would prefer Java, but all I really need to do is make a dictionary with any language and then load that text file into my Java program, so any language would probably work

jonbon almost 9 yearsI would prefer Java, but all I really need to do is make a dictionary with any language and then load that text file into my Java program, so any language would probably work -

Naman almost 9 yearscode.google.com/p/word2vec is the original author's code. It's very simple to train. Only thing is this output the vector into a binary file. You can easily convert it to a text file.

-

patti_jane almost 8 years@Naman I'm trying to work with word vector output and as you said some of the words are just represented as numbers. I am working on the part they assigned binary codes to words, but still couldn't decipher it fully. Any suggestion would be great help!

-

Naman almost 8 years@patti_jane Sure, you can look into radimrehurek.com/gensim/models/word2vec.html if you are comfortable using python and gensim. It gives you a nice wrapper and some basic functions. If you want pure python code, I can give you that once I am on my personal PC.

-

patti_jane almost 8 years@Naman thank you! I already have several word vector outputs that I trained using the original code, so if possible a method to reconstruct words from binary would be better really, thanks again!

-

Naman almost 8 years@patti_jane By reconstruct words, I guess you mean, you want to load a mapping from words to vectors, right? model = Word2Vec.load_word2vec_format('/tmp/vectors.bin', binary=True) should do your work. It will load the binaries and you can get word vectors. Let me know if this helps.

-

patti_jane almost 8 years@Naman exactly! so i have to use gensim or is there any other way without using it?

-

-

Mitali Cyrus about 4 yearsHi, can you add what exactly are

words. Whether it isvocabformodel.wv.vocabor the words from your corpus. -

Mitali Cyrus about 4 yearsUsing this method, the

vectorsdo not correspond to the words obtained by taking thekeys. That is, the the order is not the same, not even when keys are sorted. -

Mitali Cyrus about 4 yearsAfter attempting a few things, I found that

model.wv[model.wv.vocab.keys()]gives the vectors in the order of keys. -

TrickOrTreat over 3 yearsIt should be

list(model.wv.vocab.keys()) -

E.K. over 3 yearsWhat is the difference between

model.wv.get_vector()andmodel.wv.word_vec()? -

spectre about 2 yearsYour method does not preserve the order of the words. The resulting dict contains the order as

and another here is one. Is there a way to preserve the order of the sentence? -

Mitali Cyrus about 2 years@spectre - Python dictionaries do not retain order, so you might have to use an ordered dictionary for that. So you can

import collectionsand definewe_dict = collections.OrderedDict(). Just remember to use the loop without dictionary comprehension to save the results. Hope that helps. -

Admin about 2 yearsAs it’s currently written, your answer is unclear. Please edit to add additional details that will help others understand how this addresses the question asked. You can find more information on how to write good answers in the help center.

Admin about 2 yearsAs it’s currently written, your answer is unclear. Please edit to add additional details that will help others understand how this addresses the question asked. You can find more information on how to write good answers in the help center. -

Aravind R about 2 yearsHi, After getting the vector, it's not fitting to the model, can you please help me with that, Im stumbling from moring

Aravind R about 2 yearsHi, After getting the vector, it's not fitting to the model, can you please help me with that, Im stumbling from moring