How to fix broken "etc/sudoers" ownership on EC2?

Solution 1

While going through the Amazon documentation was helpful (as per @Ouroborus's answer), I finally figured how to repair this mess that I got myself into.

Let's see if I can recall all the steps...

-

Prepare a New Instance

-

Easiest way to match your existing instance as closely as possible is to go under My AMIs and select the same AMI image used by your problem instance.

-

Since this is just an instance for recovery purpose, pick the Free instance type:

-

This step is Very important! Make sure to match the subnet mask in this recovery instance with your problem instance (otherwise, you won't be able to mount the problem EBS to your recovery instance! I found that out the hard way...)

You can click "Next: Add Storage", leave that page as is, and click "Next: Tag Instance".

-

In the Value field, type something like "Recovery" (any name will do, in my case it's just to mark the purpose of this instance).

There should be one last step / popup where it prompts you to create the inbound / outbound security group. Make sure to pick the same one you should already have setup for your problem instance. Meaning, you can reuse the same SSH key file to login into this instance (via Putty or whichever program you prefer).

-

Once that Recovery instance is created...

- Make sure to keep track of your EBS volume names (as you will need to mount / unmount your problem volume between this Temporary instance and back to your original instance).

- Mark down which path your problem instance accesses the volume (ex:

/dev/xvda). - Ok, now Stop (not Terminate!!!) your problem instance.

- You may need to refresh the browser to confirm it is stopped (might take a few seconds/mins).

-

Now navigate to the EBS Volumes section:

Unmount the Volume that is currently attached to the problem instance (you will see your instance's status marked as stopped in one of the far right columns).

- Refresh to confirm the volume is "available".

Mount the volume to your new Recovery instance (if you can't see your recovery instance in the list, you probably missed the "subnet" step I mentioned above - and you will need to redo your Recovery instance all over again to match that subnet setting).

Refresh, confirm it's now "in-use" in your recovery instance.

-

Now, on to the fun command line steps!

Login / SSH into your Recovery box (you can lookup your Recovery instance IP / host address in the Instances section in AWS).

Set your current working directory to the root:

cd /Create a directory to hold your problem EBS volume:

sudo mkdir bad-

Mount it:

sudo mount /dev/xvdf /bad(NOTE: If this doesn't work, you may have encountered the same issue I had, so try the following instead:

sudo mount /dev/xvdf1 /bad, thanks to this answer https://serverfault.com/a/632906/356372). If that goes well, you should now be able to cd into that

/baddirectory and see the same file structure you would normally see when it's mounted on your original (currently problematic) instance.VERY IMPORTANT Note in the following couple steps how I'm using

./etcand not/etcto indicate to modify the permissions / ownership on the/bad/etc/sudoersfile, NOT this Recovery EBS volume! One broken volume is enough, right?Try:

cd /bad

ls -l ./etc/sudoers

... then follow that by:

stat --format %a ./etc/sudoersConfirm that this file's ownership and/or

chmodvalue is in fact incorrect.

To fix its chown ownership, do this:

sudo chown root:root ./etc/sudoers

To fix its chmod value, do this:

sudo chmod 0755 ./etc/sudoers

Now it's just a matter of reversing the steps!

Once that's done, time to unmount:

cd /

sudo umount /badBack to the AWS configuration page, go to the EBS Volume section.

- Unmount the fixed volume from the Recovery instance.

- Refresh, confirm it's

available. - Mount it back on the original instance (DO NOT FORGET - use the same

/dev/whatever/volume path your original instance was using prior to all these steps). - Refresh, confirm it's

in-use. - Now, navigate to the Instances section and Start your original instance again. (It might take a few seconds / minutes to restart).

- If all is well, you should now be able to login and SSH into your EC2 instance and use

sudoonce again!

Congrats if it worked for you too!

If not.... I'm so.... so very sorry this is happening to you :(

Solution 2

Yeah, you've broken it real good. You can't sudo because of ownership and Amazon's instances are setup to disallow root without sudo access.

If restarting the instance didn't work then the changes you made are stored on the EBS volume you had attached. Fixing it involves starting up another, fresh instance and using that to mount and alter the EBS volume that has the borked file. Doing this is described here in Amazon's own documentation as well as summarized in the answer to the question you linked.

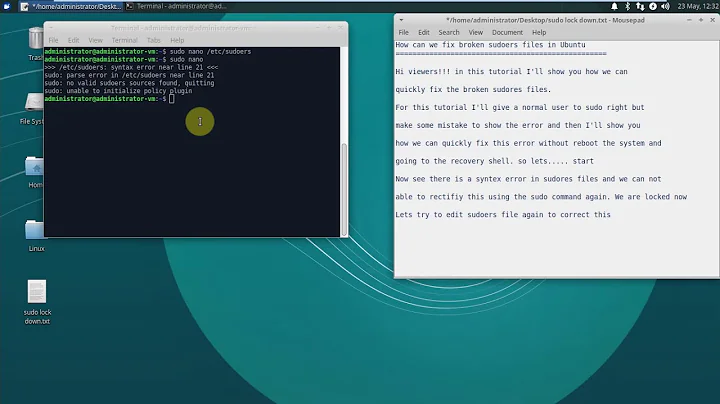

After you get that fixed but before you try to edit /etc/sudoers again, read up on visudo, a tool that puts you in an editor preloaded with /etc/sudoers and performs sanity checks before saving.

Related videos on Youtube

chamberlainpi

Updated on September 18, 2022Comments

-

chamberlainpi over 1 year

Backstory: I was trying to get PHP to execute node, but ended up changing permissions / ownerships on probably more files and folders than I should of.

At one point I stumbled upon someone's suggestion to change

/etc/sudoers/so that it setsDefaults requiretty. I tried tonanointo it, and couldn't. So then I get the idea tosudo chown ec2-user /etc/sudoersand I've been stuck with this issue ever since.I was able to go back in nano and revert my text change, but the ownership of the file is what's causing the issue now.

I think he closest matching answered question on here is this one:

Broken sudo on amazon web services ec2 linux centOS (but this one relates to a parsing error, typo in the file I'm assuming).How can I fix this? Have I permanently messed up this EC2 instance?

-

chamberlainpi almost 8 yearsCool I'll look into that tomorrow. I was able to access the folders I needed to move the node executable over to some subfolder of /var/www/... and after changing chmod on it my PHP script ran it fine. So no more need to modify that 'sudoers' file anymore! Just messed it up for no reason ... sigh