How to force Chrome to use integrated GPU for decoding?

Solution 1

I had this problem for a few months now and yesterday I found a solution to the problem:

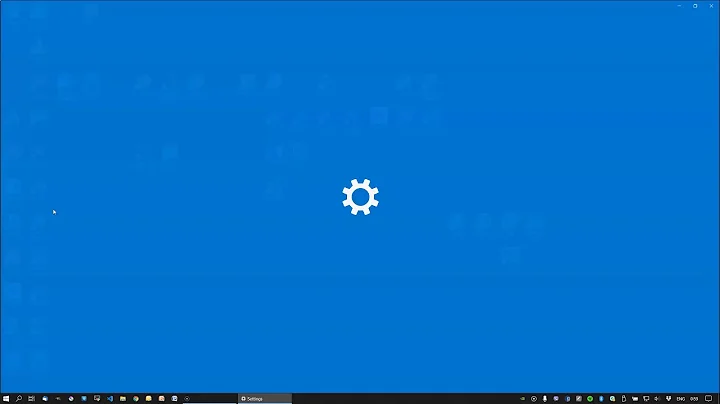

Display settings -> Graphic settings -> Choose an app to set preference: Classic App -> Browse -> Firefox.exe (Chrome in your case) -> Firefox (Button that appears below the browse button) -> Options. I then chose Power Saving which makes my browser use the iGPU. High Performance would make the browser use my dedicated GPU.

Solution 2

The simple solution is the one gave by Unrul3r, you just need to go to Graphic Settings in Windows 10 Control Panel and add Google Chrome or Firefox in Classic app mode, and press option button, select High performarce and that's it. Run some WebGL Benchmark and you will see the web browser using GPU-1 or the number of your discrete GPU. It works for any other application like Word, Excel, etc.

Another option to improve the performance in Google Chrome and specially the video playback (i.e Youtube), is to use the flags configuration page, to set the GPU Rendering to Enable, see the picture:

Google Chrome flags configuration

As you can see in the next image, my Discrete GPU es the GPU-1 AMD Radeon R7

Descripción en español: Activar el uso de la GPU discreta en Google Chrome (ATI Radeon), Activar la reproducción de vídeo por hardware en Youtube.

Solution 3

If you wish to automate the task of disabling the Nvidia adapter, launching Chrome and re-enabling the adapter, you may use a batch script (.bat file) that you can put on the desktop as an icon.

Using the Microsoft command line tool Devcon.exe :

devcon.exe disable "NVIDIA GeForce GTX 970"

start "C:\Program Files (x86)\Google\Chrome\Application\chrome.exe"

timeout 5

devcon.exe enable "NVIDIA GeForce GTX 970"

Check in Device Manager, section Display adapters, that the above name of the adapter is correct.

Other utilities that can be used instead of Devcon are DevManView and MultiMonitorTool.

Solution 4

Spent a few hours tracking down a working solution to this with Windows 10 October 2018 Update. As of right now, using the directions provided by Unrul3r, I have been able to successfully enable hardware VP9 decoding in Chrome powered by the iGPU.

(Link to screenshot) Setup is as follows:

AMD Radeon R9-290 dGPU -> Connected via DisplayPort to 3840x2160 (4K) primary monitor.

Intel Core i5-8400 w/UHD 630 iGPU -> Connected via motherboard HDMI to 1920x1080 secondary monitor.

(Link to screenshot) This is what you want to see in chrome://gpu to verify everything is working:

Now, here's a quick walkthrough on how to get this working correctly.

Setup requirements

- A primary and secondary monitor

- At least one monitor must be physically connected to the iGPU outputs on the motherboard

- Connect the primary monitor and any others to your dGPU

Step-by-step

- Enter BIOS and make sure the iGPU is set to "Enabled" (not "Auto"). Check to make sure your dGPU is still set as the first enabled. Save and restart.

- Boot to Windows. It should automatically download and install the Intel drivers for the iGPU since it is now enabled and in-use. Personally I grabbed the full driver package for my UHD 630 from Intel and installed it manually.

- Restart once iGPU drivers are installed.

- Once back in Windows, open Display Settings and adjust the multiple display settings and positioning if your setup has changed.

- Near the bottom of the Display Settings window, click on Graphics settings. Leave the dropdown on Classic app, then click Browse and find the executable for the program you want to force to the iGPU.

- Once added, click the new listing, select Options, then select Power Saving to always force the iGPU to handle the rendering for that app.

(Link to screenshot) What you should see in the Graphics settings window when finished:

And there we go! Testing with this 8K YouTube video of the Unigine Superposition benchmark now shows ~2-3% CPU usage and <1% dropped frames (totally smooth to the eye, no stuttering). In comparison without the hardware decoder, CPU usage would be capped out, and the result was an unwatchable mess with >50% of frames dropped.

Related videos on Youtube

Gepard

Updated on September 18, 2022Comments

-

Gepard almost 2 years

I want Chrome to utilize iGPU (GT630 on i7-8700k) for video decoding, especially VP9 decoding on YouTube. My discrete GPU is GTX970 and it is not capable of decoding VP9. Currently, VP9 is software decoded on my system, putting load on CPU and occasionally skipping frames.

Both GPUs are detected by the system (Windows 10): GT630 as GPU0 and GTX970 as GPU1. I use 2 screens. I don't care where they need to be connected (970 or Motherboard) as long as Chrome uses iGPU and I can keep using Nvidia for gaming. One of the screens is 144Hz. The motherboard is ASUS ROG Hero.

I've tried different settings, but nothing seems to work as intended, and usually people look for a solution to the exactly opposite problem...

Update (command switches):

Running chrome with

--gpu-active-vendor-id=0x8086 --gpu-active-device-id=0x3E92or--gpu-vendor-id=0x8086 --gpu-device-id=0x3E92or--gpu-testing-vendor-id=0x8086 --gpu-testing-device-id=0x3E92results in:GPU0 VENDOR = 0x10de, DEVICE= 0x13c2 ACTIVE

GPU1 VENDOR = 0x8086, DEVICE= 0x3e92

GL_RENDERER ANGLE (NVIDIA GeForce GTX 970 Direct3D11 vs_5_0 ps_5_0)`Vivaldi browser seems to accept

--gpu-testing-vendor-id=0x8086 --gpu-testing-device-id=0x3E92which results in:GPU0 VENDOR = 0x8086, DEVICE= 0x3e92 ACTIVE

GL_RENDERER ANGLE (NVIDIA GeForce GTX 970 Direct3D11 vs_5_0 ps_5_0)`However it still uses Nvidia for renderer and doesn't utilize GT630 igfx.

The only method that works so far is disabling Nvidia card in Device Manager, launching Chrome, and re-enabling Nvidia card. When Chrome is launched without the discrete card present in the system, it runs with the following configuration and it's the only one that uses HW decoding from GT630:

GPU0 VENDOR = 0x8086, DEVICE= 0x3e92 ACTIVE

GPU1 VENDOR = 0x10de, DEVICE= 0x13c2

GL_RENDERER ANGLE (Intel(R) UHD Graphics 630 Direct3D11 vs_5_0 ps_5_0) -

guest about 6 yearsDisclaimer: This is an educated guess. I welcome any disputation backed up with proof, eg. Linux has no such limitation, as stated here.

guest about 6 yearsDisclaimer: This is an educated guess. I welcome any disputation backed up with proof, eg. Linux has no such limitation, as stated here. -

Gepard about 6 yearsActually I found out I can force Chrome to use igfx as renderer if I temporarily disable Nvidia in Device manager. After re-enabling Chrome keeps using igfx, and at the same time I can utilize Nvidia for games.

-

Unrul3r about 6 yearsSure, here you go: i5 6600k, R9 280x. Why does it matter? Are these options not showing up for you?

Unrul3r about 6 yearsSure, here you go: i5 6600k, R9 280x. Why does it matter? Are these options not showing up for you? -

guest about 6 yearsI thought this new feature only works for laptop. With your input, look likes I'm wrong.

guest about 6 yearsI thought this new feature only works for laptop. With your input, look likes I'm wrong. -

Unrul3r about 6 yearsThey are from the last Windows 10 April Update.

Unrul3r about 6 yearsThey are from the last Windows 10 April Update. -

Brian Sutherland over 5 yearsawesome. I tried doing this a few years ago when I got my first 144hz and figured out you could disable the dGPU open Chrome then re-enable the dGPU but that was too much of a pain so I just would turn of acceleration and watch videos at a lower resolution. This solution has worked and chrome now uses GPU1 instead of GPU0

Brian Sutherland over 5 yearsawesome. I tried doing this a few years ago when I got my first 144hz and figured out you could disable the dGPU open Chrome then re-enable the dGPU but that was too much of a pain so I just would turn of acceleration and watch videos at a lower resolution. This solution has worked and chrome now uses GPU1 instead of GPU0 -

Valen almost 5 yearsThanks a lot, I've found that I've already set the preference for obs-studio but somehow I completely forget about it and spent a lot of time figuring out why the applications aren't using igpu.