How to get memory usage per process with sar, sysstat?

Solution 1

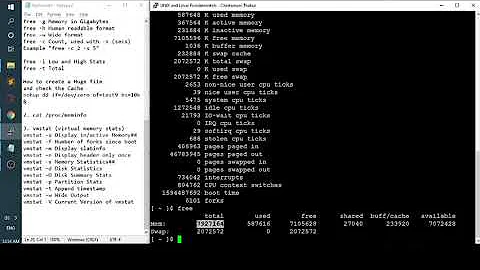

As Tensibai mentioned, you can extract this info from the /proc filesystem, but in most cases you need to determine the trending yourself. There are several places which could be of interest:

- /proc/[pid]/statm

Provides information about memory usage, measured in pages. The columns are: size (1) total program size (same as VmSize in /proc/[pid]/status) resident (2) resident set size (same as VmRSS in /proc/[pid]/status) shared (3) number of resident shared pages (i.e., backed by a file) (same as RssFile+RssShmem in /proc/[pid]/status) text (4) text (code) lib (5) library (unused since Linux 2.6; always 0) data (6) data + stack dt (7) dirty pages (unused since Linux 2.6; always 0)

cat /proc/31520/statm

1217567 835883 84912 29 0 955887 0

- memory-related fields in /proc/[pid]/status (notably

Vm*andRss*), might be preferable if you also collect other info from this file

* VmPeak: Peak virtual memory size. * VmSize: Virtual memory size. * VmLck: Locked memory size (see mlock(3)). * VmPin: Pinned memory size (since Linux 3.2). These are pages that can't be moved because something needs to directly access physical memory. * VmHWM: Peak resident set size ("high water mark"). * VmRSS: Resident set size. Note that the value here is the sum of RssAnon, RssFile, and RssShmem. * RssAnon: Size of resident anonymous memory. (since Linux 4.5). * RssFile: Size of resident file mappings. (since Linux 4.5). * RssShmem: Size of resident shared memory (includes System V shared memory, mappings from tmpfs(5), and shared anonymous mappings). (since Linux 4.5). * VmData, VmStk, VmExe: Size of data, stack, and text segments. * VmLib: Shared library code size. * VmPTE: Page table entries size (since Linux 2.6.10). * VmPMD: Size of second-level page tables (since Linux 4.0). * VmSwap: Swapped-out virtual memory size by anonymous private pages; shmem swap usage is not included (since Linux 2.6.34).

server:/> egrep '^(Vm|Rss)' /proc/31520/status

VmPeak: 6315376 kB

VmSize: 4870332 kB

VmLck: 0 kB

VmPin: 0 kB

VmHWM: 5009608 kB

VmRSS: 3344300 kB

VmData: 3822572 kB

VmStk: 1040 kB

VmExe: 116 kB

VmLib: 146736 kB

VmPTE: 8952 kB

VmSwap: 0 kB

Some processes can, through their behaviour and not through their actual memory footprint, contribute to the overall system memory starvation and eventual demise. So it might also be of interest to look at the OOM Killer related information, which already takes into account some trending information:

- /proc/[pid]/oom_score

This file displays the current score that the kernel gives to this process for the purpose of selecting a process for the OOM-killer. A higher score means that the process is more likely to be selected by the OOM-killer. The basis for this score is the amount of memory used by the process, with increases (+) or decreases (-) for factors including: * whether the process creates a lot of children using fork(2) (+); * whether the process has been running a long time, or has used a lot of CPU time (-); * whether the process has a low nice value (i.e., > 0) (+); * whether the process is privileged (-); and * whether the process is making direct hardware access (-). The oom_score also reflects the adjustment specified by the oom_score_adj or oom_adj setting for the process.

server:/> cat proc/31520/oom_score

103

- /proc/[pid]/oom_score_adj (or its deprecated predecessor /proc/[pid]/oom_adj, if need be)

This file can be used to adjust the badness heuristic used to select which process gets killed in out-of-memory conditions. The badness heuristic assigns a value to each candidate task ranging from 0 (never kill) to 1000 (always kill) to determine which process is targeted. The units are roughly a proportion along that range of allowed memory the process may allocate from, based on an estimation of its current memory and swap use. For example, if a task is using all allowed memory, its badness score will be 1000. If it is using half of its allowed memory, its score will be 500. There is an additional factor included in the badness score: root processes are given 3% extra memory over other tasks. The amount of "allowed" memory depends on the context in which the OOM-killer was called. If it is due to the memory assigned to the allocating task's cpuset being exhausted, the allowed memory represents the set of mems assigned to that cpuset (see cpuset(7)). If it is due to a mempolicy's node(s) being exhausted, the allowed memory represents the set of mempolicy nodes. If it is due to a memory limit (or swap limit) being reached, the allowed memory is that configured limit. Finally, if it is due to the entire system being out of memory, the allowed memory represents all allocatable resources. The value of oom_score_adj is added to the badness score before it is used to determine which task to kill. Acceptable values range from -1000 (OOM_SCORE_ADJ_MIN) to +1000 (OOM_SCORE_ADJ_MAX). This allows user space to control the preference for OOM-killing, ranging from always preferring a certain task or completely disabling it from OOM killing. The lowest possible value, -1000, is equivalent to disabling OOM- killing entirely for that task, since it will always report a badness score of 0. Consequently, it is very simple for user space to define the amount of memory to consider for each task. Setting an oom_score_adj value of +500, for example, is roughly equivalent to allowing the remainder of tasks sharing the same system, cpuset, mempolicy, or memory controller resources to use at least 50% more memory. A value of -500, on the other hand, would be roughly equivalent to discounting 50% of the task's allowed memory from being considered as scoring against the task. For backward compatibility with previous kernels, /proc/[pid]/oom_adj can still be used to tune the badness score. Its value is scaled linearly with oom_score_adj. Writing to /proc/[pid]/oom_score_adj or /proc/[pid]/oom_adj will change the other with its scaled value.

server:/> cat proc/31520/oom_score_adj

0

Solution 2

Is it a hard requirement to use only sar and sysstat? If not you might want to look at collectl or collectd. These will enable you to study memory usage over time on a per-process granularity. It's really not worth wasting your time writing your own scripts to parse /proc as the other answer suggests; this is a solved problem.

Related videos on Youtube

kokito

Updated on September 18, 2022Comments

-

kokito almost 2 years

Can I get memory usage per process with Linux? we monitor our servers with

sysstat/sar. But besides seeing that memory went off the roof at some point, we can't pinpoint which process was getting bigger and bigger. is there a way withsar(or other tools) to get memory usage per process? and look at it, later on? -

kokito almost 7 yearsthis is an amazing response, thanks a lot. we were starting to have a bunch of oom but weren't sure which process(es) was causing it since they didn't happen that often before. the purpose of this question was to look for an "easy" way to log the memory usage of the system and its evolution and correlate it with operations before and after an oom. anyhoo learn a lot with your reply, so thanks again.

![How To Fix High RAM/Memory Usage on Windows 10 [Complete Guide]](https://i.ytimg.com/vi/osKnDbHibig/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDgajRq6bP7JD1erNguFVloL0gqUA)