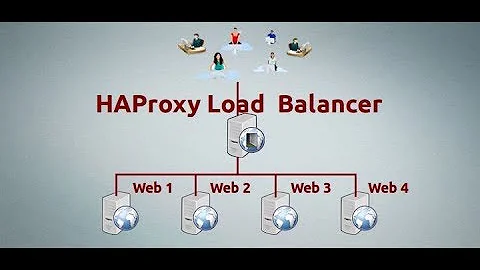

How to horizontally scale SSL termination behind HAProxy load balancing?

Firstly this adds unneccesary complexity to your webservers.

Secondly terminating the SSL connection at the LB means you can use keepalive on the client side for the connection reducing the complex part of establishing the connection. Also the most efficient use of resources is to group like workloads. Many people seperate static and dynamic content, SSL at the LB means that both can come from different servers through the same connection.

Thirdly SSL generally scales at a different rate than required by your web app. I think the lack of examples is due to the fact that a single LB pair or Round robin dns is enough for most people. It seems to me that you may be overestimating the SSL workload.

Also I am not sure about your reasoning regarding security. In addition to the fact that the webserver is already running far more services with possible exploits, if there are any vulnerabilities in the LB then you have just introduced them onto your webservers too!

Related videos on Youtube

BrionS

Updated on September 18, 2022Comments

-

BrionS over 1 year

I've been looking around and no one seems to be trying to scale SSL termination the way I am and I'm curious as to why my approach seems so uncommon.

Here's what I want to do followed by why:

10.0.1.1 10.0.1.2 - 10.0.1.5 -----+--------+----+----+----+ | | | | | +--+--+ +-+-++-+-++-+-++-+-+ | LB1 | | A || B || C || D | +-----+ +---++---++---++---+ haproxy 1.5 haproxy 1.5 + tomcat tcp mode http modeWhy this crazy set-up of

Internet -> HAProxy (tcp mode) -> HAProxy (http mode) -> Tomcat? In two words: security and scalabilityBy offloading the SSL termination to the web backends (A-D) that run HAProxy 1.5 and Tomcat listening only on the loopback interface I can guarantee that all traffic is encrypted from the client to the server with no possibility of sniffing from anything not local to the web backend.

Additionally, as SSL demand increases I can simply spin up new (cheap) backend servers behind the load balancer.

Lastly it removes the requirement of having the certs live on the external-facing LB and adds additional security by doing so since a compromised LB will not have any pems or certs on it.

My situation seems very similar to this one: why no examples of horizontally scalable software load balancers balancing ssl? but I am not using file-based sessions and if possible I'd like to avoid balancing by IP since clients may be coming from behind a NAT.

I've tried following the HAProxy instructions in the configuration document for using the stick table with SSL ID (http://cbonte.github.com/haproxy-dconv/configuration-1.5.html#4-stick%20store-response) but that does not appear to keep my session to one backend server (reloading the A/admin?stats page that shows the node-name bounces across all my backend servers).

Clearly the round-robin load balancing is working, but sticky sessions are not.

Here's an example of my LB configuration:

global log 127.0.0.1 local0 notice maxconn 200 daemon user appserver group appserver stats socket /tmp/haproxy defaults log global mode tcp timeout client 5000ms timeout connect 50000ms timeout server 50000ms option contstats frontend frontend_http log global bind *:80 default_backend backend_http_servers frontend frontend_ssl log global bind *:443 default_backend backend_servers listen stats :8888 mode http stats enable stats hide-version stats uri / ################################################################################################# ## NOTE: Anything below this section header will be generated by the bootstrapr process and may be ## re-generated at any time losing manual changes ################################################################################################# ## BACKENDS ################################################################################################# backend backend_http_servers mode tcp #option httpchk server webA:8081 webA:8081 check port 8081 server webB:8081 webB:8081 check port 8081 # This configuration is for HTTPS affinity from frontdoor to backend # Learn SSL session ID from both request and response and create affinity backend backend_servers mode tcp balance roundrobin option ssl-hello-chk #option httpchk # maximum SSL session ID length is 32 bytes stick-table type binary len 32 size 30k expire 30m acl clienthello req_ssl_hello_type 1 acl serverhello rep_ssl_hello_type 2 # use tcp content accepts to detects ssl client and server hello tcp-request inspect-delay 5s tcp-request content accept if clienthello # no timeout on response inspect delay by default tcp-response content accept if serverhello # SSL session ID (SSLID) may be present on a client or server hello # Its length is coded on 1 byte at offset 43 and its value starts # at offset 44 # Match and learn on request if client hello stick on payload_lv(43,1) if clienthello # Learn on response if server hello stick store-response payload_lv(43,1) if serverhello ############################################ # HTTPS BACKENDS ############################################ server webA:8443 webA:8443 check port 8443 server webB:8443 webB:8443 check port 8443An example of my backend configuration for webA looks like:

global log 127.0.0.1 local0 info maxconn 200 daemon defaults log global mode http option dontlognull option forwardfor option httplog option httpchk # checks server using HTTP OPTIONS on / and marks down if not 2xx/3xx status retries 3 option redispatch maxconn 200 timeout client 5000 timeout connect 50000 timeout server 50000 frontend frontend_http log global # only allow connections if the backend server is alive monitor fail if { nbsrv(backend_application) eq 0 } reqadd X-Forwarded-Proto:\ http # necessary for tomcat RemoteIPValve to report the correct client IP and port reqadd X-Forwarded-Protocol:\ http # necessary because who knows what's actually correct? reqadd X-Forwarded-Port:\ 80 # also here for safety bind *:8081 default_backend backend_application frontend frontend_ssl log global # only allow connections if the backend server is alive monitor fail if { nbsrv(backend_application) eq 0 } reqadd X-Forwarded-Proto:\ https # necessary for tomcat RemoteIPValve to report the correct client IP and port reqadd X-Forwarded-Protocol:\ https # necessary because who knows what's actually correct? reqadd X-Forwarded-Port:\ 443 # also here for safety reqadd X-Forwarded-SSL:\ on # also here for safety bind *:8443 ssl crt /path/to/default.pem crt /path/to/additional/certs crt /path/to/common/certs default_backend backend_application ################################################################################################# # Backends ################################################################################################# backend backend_haproxy stats enable stats show-node stats uri /haproxy acl acl_haproxy url_beg /haproxy redirect location /haproxy if !acl_haproxy backend backend_application stats enable stats show-node stats uri /haproxy option httpclose option forwardfor acl acl_haproxy url_beg /haproxy server 127.0.0.1:8080 127.0.0.1:8080 check port 8080In this configuration an SSL (or non-SSL) connection gets routed through the LB to one of the backends in a round-robin fashion. However, when I reload the page (make a new request) it's clear I move to another backend regardless of SSL or no.

I test this by going to

https://LB/haproxywhich is the URL of the backend stats page with the node name (shows webA the first time, and webB after a reload, and so on with each subsequent reload). Going tohttp://LB:8888shows the stats for the LB and shows my backends all healthy.What do I need to change to get sessions to stick to one backend when SSL is terminated on the backend?

Edit: Question: Why not bounce across backend servers and store the session in a central store (like memcached)?

Answer: Because the legacy application is extremely fragile and breaks when the session is carried across servers. As long as the user stays on the same backend the application works as expected. This will be changed eventually (re-written) but not in the near term.

-

Admin about 11 yearsYou really need to split this into 2 (or more) questions. Are you doing lb right and how to get sticky sessions working are seperate issues.

Admin about 11 yearsYou really need to split this into 2 (or more) questions. Are you doing lb right and how to get sticky sessions working are seperate issues. -

Admin about 11 yearsWell, these are together because the question is how to do sticky sessions given this LB configuration. I've seen lots of other questions and answer that address sticky sessions using IP or cookies and using cookies requires http mode in HAProxy.

Admin about 11 yearsWell, these are together because the question is how to do sticky sessions given this LB configuration. I've seen lots of other questions and answer that address sticky sessions using IP or cookies and using cookies requires http mode in HAProxy. -

Admin about 11 yearsWell that isn't the only thing you ask and the whole of the backend part is irrelevant to balancing ssl and just confuses matters. Its not the way stackexchange is supposed to work and you are unlikely to get a sensible answer unless you split and clarify it.

Admin about 11 yearsWell that isn't the only thing you ask and the whole of the backend part is irrelevant to balancing ssl and just confuses matters. Its not the way stackexchange is supposed to work and you are unlikely to get a sensible answer unless you split and clarify it. -

Admin about 11 yearsPerhaps I did not make the question clear. Setting the design aside (I only asked about it as a courtesy expecting people would provide suggestions on design changes anyway), the question is: How do I create sticky sessions on an HAProxy LB that is in tcp mode when SSL termination occurs on the backend servers (since cookies cannot be used and IP is less than ideal and the suggestion in the HAProxy manual doesn't seem to work).

Admin about 11 yearsPerhaps I did not make the question clear. Setting the design aside (I only asked about it as a courtesy expecting people would provide suggestions on design changes anyway), the question is: How do I create sticky sessions on an HAProxy LB that is in tcp mode when SSL termination occurs on the backend servers (since cookies cannot be used and IP is less than ideal and the suggestion in the HAProxy manual doesn't seem to work). -

Admin about 11 yearsThats not how stackexchange works, repeating it in the comments is no help either, you have already asked a question in the same question. Rating your solution rather than a generic problem provides no benefit to the community. When you ask clear cut questions it can help other people with the same issue. Also without all the irrelevant info it would have been far easier to spot your specific problem. Split your 2nd question into a new question and get an answer.

Admin about 11 yearsThats not how stackexchange works, repeating it in the comments is no help either, you have already asked a question in the same question. Rating your solution rather than a generic problem provides no benefit to the community. When you ask clear cut questions it can help other people with the same issue. Also without all the irrelevant info it would have been far easier to spot your specific problem. Split your 2nd question into a new question and get an answer. -

Admin about 11 yearsI don't think I have a question that is generic enough to your and ServerFault's liking that has not already been asked, so I will accept your answer and be done. Thank you for your input.

Admin about 11 yearsI don't think I have a question that is generic enough to your and ServerFault's liking that has not already been asked, so I will accept your answer and be done. Thank you for your input.

-

-

BrionS about 11 yearsThere's a twist I didn't mention which is part of the reason I'm doing it this way as well. The application is a multi-tenant application and there are many tenants in a legacy (poorly-written) application that will blow out of memory if too many sessions are held in the Tomcat instance. Keeping users on a single backend (even though their session is stored in memcached) limits the amount of sessions in any given backend at any particular moment. If we bounce around sooner or later Tomcat is crushed with too many sessions in memory.

-

jwbensley about 11 yearsAs per JamesRyan answer, SSL termination doesn't scale at the same rate, I don't think there is any really benefit to having it on the back-end unless your LBs and back ends aren't actually anywhere near each other (so there are security concerns). Sound to me (if I have understood your above comment correctly) like you need to scale up more Tomcat instances which is strait forward to HAProxy.

jwbensley about 11 yearsAs per JamesRyan answer, SSL termination doesn't scale at the same rate, I don't think there is any really benefit to having it on the back-end unless your LBs and back ends aren't actually anywhere near each other (so there are security concerns). Sound to me (if I have understood your above comment correctly) like you need to scale up more Tomcat instances which is strait forward to HAProxy. -

Xiong Chiamiov almost 10 yearsAlso, it's nice to be able to use as few Varnish instances as possible to keep hitrates up - if you have to put one Varnish instance on each app, you're spreading your cache out. And you should be using Varnish.

Xiong Chiamiov almost 10 yearsAlso, it's nice to be able to use as few Varnish instances as possible to keep hitrates up - if you have to put one Varnish instance on each app, you're spreading your cache out. And you should be using Varnish.