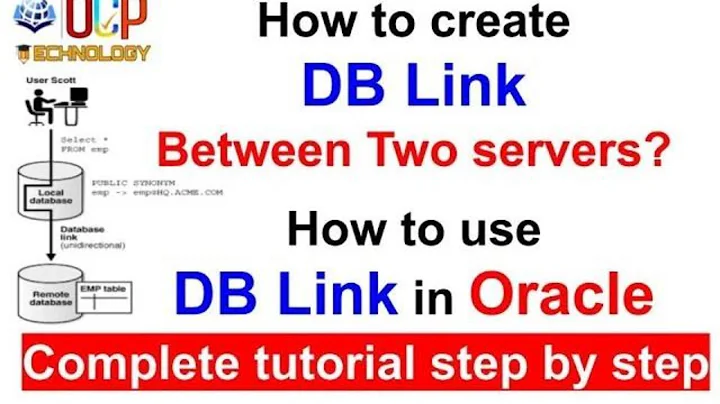

How to import schema from remote database using dblink or network link

You need to use Network import.

With network mode imports, one doesn't need any intermediate dump files. Data is exported across a database link and imported directly into the target database.

For example,

SQL> create user new_scott identified by tiger;

User created.

SQL> grant connect, resource to new_scott;

Grant succeeded.

SQL> grant read, write on directory dmpdir to new_scott;

Grant succeeded.

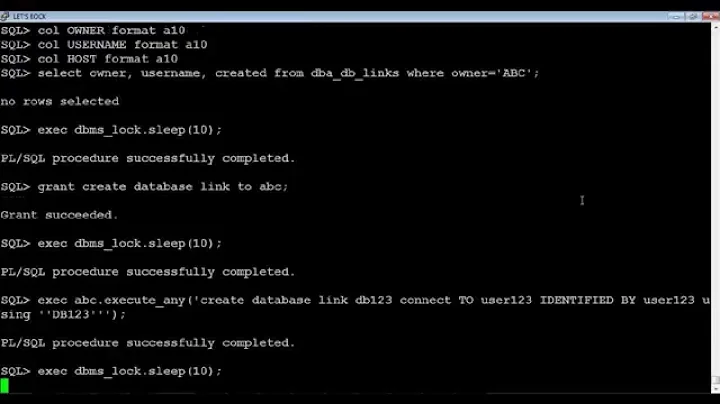

SQL> grant create database link to new_scott;

Grant succeeded.

SQL> conn new_Scott/tiger

Connected.

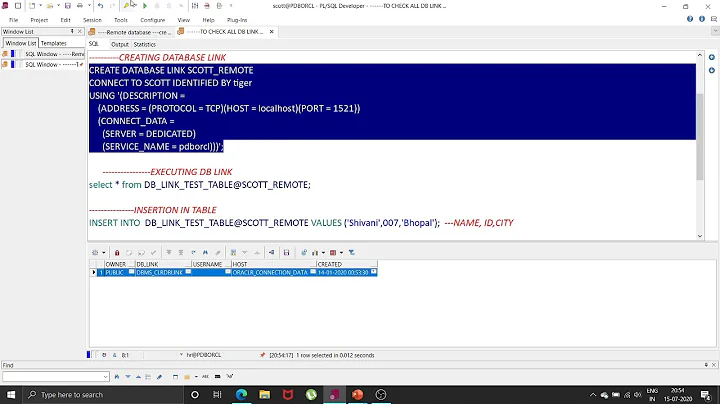

SQL> create database link old_scott connect to scott identified by tiger using 'orcl';

Database link created.

impdp new_scott/tiger DIRECTORY=dmpdir NETWORK_LINK=old_scott remap_schema=scott:new_scott

Related videos on Youtube

kittu

Worked on different stack of technologies with 6+ years of experience such as: Front-end stack: Javascript, Angular 7/8, HTML5/CSS3, Bootstrap Back-end stack: NodeJs, MongoDb with mongoose, AWS, RabbitMQ Tools: Git, VS Code, Jenkins Passionate about working on enterprise or product based companies with react, node and mongo tech stack

Updated on October 15, 2022Comments

-

kittu over 1 year

I have created a network link as follows:

CREATE PUBLIC DATABASE LINK "remote_lab2" CONNECT TO system IDENTIFIED BY itl USING '(DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP) (Host = 192.168.5.43) (Port = 1521) ) ) (CONNECT_DATA = (SID = orcl) ) )';And I am able to query on the remote database using the following query:

select * from bill@remote_lab; //fetches rows in the table 'bill'But I want to import all the schema as a DUMP file. For that I have created a directory called

mydumpin my D: drive. but I am not sure how to import all the schema as dump file from remote database to my local system?Update: After creating the network link, I am able to create a dump file in default directory

DATA_PUMP_DIRon remote system using:expdp VTMOLAP2/vtmolap2 schemas=VTMOLAP2 network_link=remote_lab directory=DATA_PUMP_DIR dumpfile=vtm.dmp logfile=vtm.log;Now I need to import the dump file in to my local system. How to I go about it?

-

kittu over 8 yearsUpdated the question. Please check

-

Lalit Kumar B over 8 years@Satyadev I see your update, and the answer is already there.

impdpcommand. -

kittu over 8 yearsSo I just change the query to:

impdp VTMOLAP2/vtmolap2 schemas=VTMOLAP2 network_link=remote_lab directory=DATA_PUMP_DIR dumpfile=vtm.dmp logfile=vtm.log;?? -

Lalit Kumar B over 8 yearsYou need to run this on the server you need to import.

-

kittu over 8 yearsThe expdp export is very slow. I have about 40gb and its been more than an hour and its still going on. Is there any way to increase the speed?

-

Lalit Kumar B over 8 yearsIt depends on your server configuration and network latency.

-

kittu over 8 yearsI guess its not only that. There are some parameters like

paralleletc are being used. Just found it on other site -

Lalit Kumar B over 8 yearsOnce again, parallel also depends on your server configuration. The number of cores, CPUs etc. See community.oracle.com/thread/894648?tstart=0

-

Welsige about 3 yearsIs it possible to import just a sample of data over dblink using impdp?

-

Lalit Kumar B about 3 years@Welsige Yes, use INCLUDE/EXCLUDE parameter. See examples here oracle-base.com/articles/10g/…