How to make JOIN query use index?

Solution 1

If you have lots of categories, this query cannot be made efficient. No single index can cover two tables at once in MySQL.

You have to do denormalization: add last_updated, has_comments and deleted into article_categories:

CREATE TABLE `article_categories` (

`article_id` int(11) NOT NULL DEFAULT '0',

`category_id` int(11) NOT NULL DEFAULT '0',

`last_updated` timestamp NOT NULL,

`has_comments` boolean NOT NULL,

`deleted` boolean NOT NULL,

PRIMARY KEY (`article_id`,`category_id`),

KEY `category_id` (`category_id`),

KEY `ix_articlecategories_category_comments_deleted_updated` (category_id, has_comments, deleted, last_updated)

) ENGINE=InnoDB DEFAULT CHARSET=utf8

and run this query:

SELECT *

FROM (

SELECT article_id

FROM article_categories

WHERE (category_id, has_comments, deleted) = (78, 1, 0)

ORDER BY

last_updated DESC

LIMIT 100, 20

) q

JOIN articles a

ON a.id = q.article_id

Of course you should update article_categories as well whenever you update relevant columns in article. This can be done in a trigger.

Note that the column has_comments is boolean: this will allow using an equality predicate to make a single range scan over the index.

Also note that the LIMIT goes into the subquery. This makes MySQL use late row lookups which it does not use by default. See this article in my blog about why do they increase performance:

If you were on SQL Server, you could make an indexable view over your query, which essentially would make a denormalized indexed copy of article_categories with the additional fields, automatically mainained by the server.

Unfortunately, MySQL does not support this and you will have to create such a table manually and write additional code to keep it in sync with the base tables.

Solution 2

Before getting to your specific query, it's important to understand how an index works.

With appropriate statistics, this query:

select * from foo where bar = 'bar'

... will use an index on foo(bar) if it's selective. That means, if bar = 'bar' amounts to selecting most of the table's rows, it'll go faster to just read the table and eliminate rows that don't apply. In contrast, if bar = 'bar' means only selecting a handful of rows, reading the index makes sense.

Suppose we now toss in an order clause and that you've indexes on each of foo(bar) and foo(baz):

select * from foo where bar = 'bar' order by baz

If bar = 'bar' is very selective, it's cheap to grab all rows that comply, and to sort them in memory. If it's not at all selective, the index on foo(baz) makes little sense because you'll fetch the entire table anyway: using it would mean going back and forth on disk pages to read the rows in order, which is very expensive.

Toss in a limit clause, however, and foo(baz) might suddenly make sense:

select * from foo where bar = 'bar' order by baz limit 10

If bar = 'bar' is very selective, it's still a good option. If it's not at all selective, you'll quickly find 10 matching rows by scanning the index on foo(baz) -- you might read 10 rows, or 50, but you'll find 10 good ones soon enough.

Suppose the latter query with indexes on foo(bar, baz) and foo(baz, bar) instead. Indexes are read from left to right. One makes very good sense for this potential query, the other might make none at all. Think of them like this:

bar baz baz bar

--------- ---------

bad aaa aaa bad

bad bbb aaa bar

bar aaa bbb bad

bar bbb bbb bar

As you can see, the index on foo(bar, baz) allows to start reading at ('bar', 'aaa') and fetching the rows in order from that point forward.

The index on foo(baz, bar), on the contrary, yields rows sorted by baz irrespective of what bar might hold. If bar = 'bar' is not at all selective as a criteria, you'll quickly run into matching rows for your query, in which case it makes sense to use it. If it's very selective, you may end up iterating gazillions of rows before finding enough that match bar = 'bar' -- it might still be a good option, but it's as optimal.

With that being addressed, let's get back to your original query...

You need to join articles with categories, to filter articles that are in a particular category, with more than one comment, that aren't deleted, and then sort them by date, and then grabbing a handful of them.

I take it that most articles are not deleted, so an index on that criteria won't be of much use -- it'll only slow down writes and query planning.

I presume most articles have a comment or more, so that won't be selective either. I.e. there's little need to index it either.

Without your category filter, index options are reasonably obvious: articles(last_updated); possibly with the comment count column to the right, and the deleted flag to the left.

With your category filter, it all depends...

If your category filter is very selective, it actually makes very good sense to select all rows that are within that category, sort them in memory, and pick the top matching rows.

If your category filter is not at all selective and yields almost all articles, the index on articles(last_update) makes sense: valid rows are all over the place, so read rows in order until you find enough that match and voilà.

In the more general case, it's just vaguely selective. To the best of my knowledge, the stats collected don't look into correlations much. Thus, the planner has no good way to estimate whether it'll find articles with the right category fast enough to be worth reading the latter index. Joining and sorting in memory will usually be cheaper, so the planner goes with that.

Anyway, you've two options to force the use of an index.

One is to acknowledge that the query planner is not perfect and to use a hint:

http://dev.mysql.com/doc/refman/5.5/en/index-hints.html

Be wary though, because sometimes the planner is actually correct in not wanting to use the index you'd like it to or vice version. Also, it may become correct in a future version of MySQL, so keep that in mind as you maintain your code over the years.

Edit: STRAIGHT_JOIN, as point out by DRap works too, with similar caveats.

The other is to maintain an extra column to tag frequently selected articles (e.g. a tinyint field, which is set to 1 when they belong to your specific category), and then add an index on e.g. articles(cat_78, last_updated). Maintain it using a trigger and you'll do fine.

Solution 3

You can use influence MySQL to use KEYS or INDEXES

For

- Ordering, or

- Grouping, or

- Join

For extra information, follow this link. I intended to use this for joining (i.e. USE INDEX FOR JOIN (My_Index) but it didn't work as expected. Removing the FOR JOIN part sped up my query significantly, from more than 3.5 hours, to 1-2 seconds. Simply because MySQL was forced to use the right index.

Solution 4

Use of a non-covering index is expensive. For each row, any uncovered columns have to be retrieved from the base table, using the primary key. So I'd first try to make the index on articles covering. That might help convince the MySQL query optimizer that the index is useful. For example:

KEY IX_Articles_last_updated (last_updated, id, title, comment_cnt, deleted),

If that doesn't help, you could play around with FORCE INDEX:

SELECT a.*

FROM article_categories AS c FORCE INDEX (IX_Articles_last_updated)

JOIN articles AS a FORCE INDEX (PRIMARY)

ON a.id = c.article_id

WHERE c.category_id = 78

AND a.comment_cnt > 0

AND a.deleted = 0

ORDER BY

a.last_updated

LIMIT 100, 20

The name of the index enforcing the primary key is always "primary".

Solution 5

First of all, I would recommend reading the article 3 ways MySQL uses indexes.

And now, when you know the basics, you can optimize this particular query.

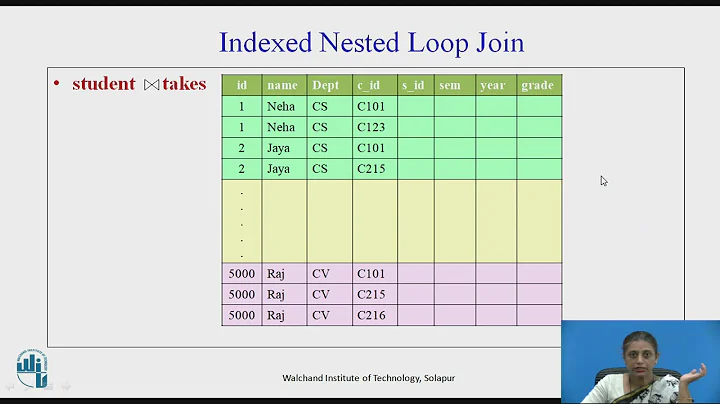

MySQL can not use index for ordering, it just can output data in an order of an index. Since MySQL uses nested loops for joining, the field you want to order by should be in the first table in the join (you see the order of join in EXPLAIN results, and can affect it by creating specific indexes and (if it does not help) by forcing required indexes).

Another important thing is that before ordering you fetch all columns for all filtered rows from a table and then skip probably most of them. It is much more effifient to get a list of required row ids and fetch only those rows.

To make this work you will need a covering index (deleted, comment_cnt, last_updated) on table a, and now you can rewrite the query as follows:

SELECT *

FROM (

SELECT a.id

FROM articles AS a,

JOIN article_categories AS c

ON a.id = c.article_id AND c.category_id = 78

WHERE a.comment_cnt > 0 AND a.deleted = 0

ORDER BY a.last_updated

LIMIT 100, 20

) as ids

JOIN articles USING (id);

P.S. Your table definition for table a does not contain comment_cnt column ;)

Related videos on Youtube

Silver Light

PHP developer. As hobby also a Python/Django programmer, guitarist, marshal art and bicycle enthusiast.

Updated on July 09, 2022Comments

-

Silver Light almost 2 years

I have two tables:

CREATE TABLE `articles` ( `id` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(1000) DEFAULT NULL, `last_updated` datetime DEFAULT NULL, PRIMARY KEY (`id`), KEY `last_updated` (`last_updated`), ) ENGINE=InnoDB AUTO_INCREMENT=799681 DEFAULT CHARSET=utf8 CREATE TABLE `article_categories` ( `article_id` int(11) NOT NULL DEFAULT '0', `category_id` int(11) NOT NULL DEFAULT '0', PRIMARY KEY (`article_id`,`category_id`), KEY `category_id` (`category_id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 |This is my query:

SELECT a.* FROM articles AS a, article_categories AS c WHERE a.id = c.article_id AND c.category_id = 78 AND a.comment_cnt > 0 AND a.deleted = 0 ORDER BY a.last_updated LIMIT 100, 20And an

EXPLAINfor it:*************************** 1. row *************************** id: 1 select_type: SIMPLE table: a type: index possible_keys: PRIMARY key: last_updated key_len: 9 ref: NULL rows: 2040 Extra: Using where *************************** 2. row *************************** id: 1 select_type: SIMPLE table: c type: eq_ref possible_keys: PRIMARY,fandom_id key: PRIMARY key_len: 8 ref: db.a.id,const rows: 1 Extra: Using indexIt uses a full index scan of

last_updatedon the first table for sorting, but does not use an y index for join (type: indexin explain). This is very bad for performance and kills the whole database server, since this is a very frequent query.I've tried reversing table order with

STRAIGHT_JOIN, but this givesfilesort, using_temporary, which is even worse.Is there any way to make mysql use index for join and for sorting at the same time?

=== update ===

I'm really desparate in this. Maybe some kind of denormalization can help here?

-

Jodrell almost 11 yearsyour shcema doesn't match your query, or plan. sqlfiddle.com/#!9/bccd7/2.

-

Jodrell almost 11 yearsstart by being specific sqlfiddle.com/#!9/bccd7/5

-

Jodrell almost 11 yearsmore possibly useful fiddle sqlfiddle.com/#!9/bccd7/7

-

-

siride almost 11 yearsIt's too bad MySQL doesn't have included, but non-indexed columns like SQL serve does.

-

Silver Light almost 11 yearsThanks, I've just tried experimanting with covering indexes and

force indexbut with no luck. As long as there is an "ORDER BY", mysql won't use index for any join, even if forced... -

Silver Light almost 11 yearsThis looks good! Now I have query type

ref, withrows: 409896(half of the table filtered out bydeletedfield). also, I've noticed, that the same result can be achieved if I ommitcomment_cntfrom an index (it is not used). Is there any way of includingidinto the index? -

DRapp almost 11 years@SilverLight, adding the ID to the index is not important if it is the primary table (as in this) JOINing to the second table (child), and you are already looking at every column in the table articles table, its there for the join. You COULD add it, but I would just add as the last column and keep the deleted status up front.

DRapp almost 11 years@SilverLight, adding the ID to the index is not important if it is the primary table (as in this) JOINing to the second table (child), and you are already looking at every column in the table articles table, its there for the join. You COULD add it, but I would just add as the last column and keep the deleted status up front. -

DRapp almost 11 years@SilverLight Another option... you have 409k articles not deleted. I would question how many articles are associated with category ID = 78. You might consider reversing the query to get category 78 FIRST, then join to articles (say only 38k articles are 78 regardless of deleted status, you are now 1/10th the record result set to further consider.

DRapp almost 11 years@SilverLight Another option... you have 409k articles not deleted. I would question how many articles are associated with category ID = 78. You might consider reversing the query to get category 78 FIRST, then join to articles (say only 38k articles are 78 regardless of deleted status, you are now 1/10th the record result set to further consider. -

DRapp almost 11 yearsSo, what is your actual solution for the question. Personally, I've always disliked the "foo" "bar" examples that people provide, but that's just me.

DRapp almost 11 yearsSo, what is your actual solution for the question. Personally, I've always disliked the "foo" "bar" examples that people provide, but that's just me. -

Denis de Bernardy almost 11 yearsIf you frequently run that particular query, I'd suggest that you add a

cat_78field as discussed in my conclusion, and index(cat_78, last_updated), and get rid of the join altogether. If (per your discussion with DRap) deleted is selective, index that too:(deleted, cat_78, last_updated). Either will give you better results that the doing the actual join for that particular query. -

Denis de Bernardy almost 11 yearsIn contrast, if you run similar queries with all categories, the best you can do is to either rely on the planner, or force the join order/the use of your choice of index, knowing that in some cases you'll be incorrect in doing so. (Lastly, and fwiw, Postgres' planner frequently yields more optimal plans, because it collects better stats...)

-

Denis de Bernardy almost 11 yearsOh, and... last point... I take it you've run analyze on your tables before asking, right? If not: dev.mysql.com/doc/refman/5.5/en/analyze-table.html

-

Silver Light almost 11 yearsThis looks very promising, I'm brenchmarking this now! If "limit" is out of range I get a warning

Impossible WHERE noticed after reading const tables, is it safe to ignore it? -

Quassnoi almost 11 years@SilverLight: sure, it just sees that you are trying to select beyond the limit

-

flaschenpost almost 11 yearsUsing the index itself is not always a win, the query should also get faster in query-time and less ressource-hungry for the database.

-

Silver Light almost 11 yearsThis solution was best, performance wise. Ofcourse, I had to go through pain of changing schema writing trigers, but now my query uses index, no temporary table, no filesort, avarage time is 0.00. Thank you.

-

Chinoto Vokro over 7 yearsFlexviews, similar to the SQL Server feature mentioned, might be of use: mariadb.com/kb/en/mariadb/flexviews

Chinoto Vokro over 7 yearsFlexviews, similar to the SQL Server feature mentioned, might be of use: mariadb.com/kb/en/mariadb/flexviews -

phil294 about 7 yearsthis is absolutely amazing and can boost up speed by multiples.