How to make sure only one instance of a bash script runs?

Solution 1

Almost like nsg's answer: use a lock directory. Directory creation is atomic under linux and unix and *BSD and a lot of other OSes.

if mkdir -- "$LOCKDIR"

then

# Do important, exclusive stuff

if rmdir -- "$LOCKDIR"

then

echo "Victory is mine"

else

echo "Could not remove lock dir" >&2

fi

else

# Handle error condition

...

fi

You can put the PID of the locking sh into a file in the lock directory for debugging purposes, but don't fall into the trap of thinking you can check that PID to see if the locking process still executes. Lots of race conditions lie down that path.

Solution 2

To add to Bruce Ediger's answer, and inspired by this answer, you should also add more smarts to the cleanup to guard against script termination:

#Remove the lock directory

function cleanup {

if rmdir $LOCKDIR; then

echo "Finished"

else

echo "Failed to remove lock directory '$LOCKDIR'"

exit 1

fi

}

if mkdir $LOCKDIR; then

#Ensure that if we "grabbed a lock", we release it

#Works for SIGTERM and SIGINT(Ctrl-C)

trap "cleanup" EXIT

echo "Acquired lock, running"

# Processing starts here

else

echo "Could not create lock directory '$LOCKDIR'"

exit 1

fi

Solution 3

One other way to make sure a single instance of bash script runs:

#! /bin/bash -

# Check if another instance of script is running

if pidof -o %PPID -x -- "$0" >/dev/null; then

printf >&2 '%s\n' "ERROR: Script $0 already running"

exit 1

fi

...

pidof -o %PPID -x -- "$0" gets the PID of the existing script¹ if it's already running or exits with error code 1 if no other script is running

¹ Well, any process with the same name...

Solution 4

This may be too simplistic, please correct me if I'm wrong. Isn't a simple ps enough?

#!/bin/bash

me="$(basename "$0")";

running=$(ps h -C "$me" | grep -wv $$ | wc -l);

[[ $running > 1 ]] && exit;

# do stuff below this comment

Solution 5

Although you've asked for a solution without additional tools, this is my favourite way using flock:

#!/bin/sh

[ "${FLOCKER}" != "$0" ] && exec env FLOCKER="$0" flock -en "$0" "$0" "$@" || :

echo "servus!"

sleep 10

This comes from the examples section of man flock, which further explains:

This is useful boilerplate code for shell scripts. Put it at the top of the shell script you want to lock and it'll automatically lock itself on the first run. If the env var $FLOCKER is not set to the shell script that is being run, then execute flock and grab an exclusive non-blocking lock (using the script itself as the lock file) before re-execing itself with the right arguments. It also sets the FLOCKER env var to the right value so it doesn't run again.

Points to consider:

- Requires

flock, the example script terminates with an error if it can't be found - Needs no extra lock file

- May not work if the script is on NFS (see https://serverfault.com/questions/66919/file-locks-on-an-nfs)

Update: If your script may get called through different paths (e.g. through its absolute or relative path) or in other words if $0 differs in parallel invocations then one needs to use realpath as well:

[ "${FLOCKER}" != "`realpath '$0'`" ] && exec env FLOCKER="`realpath '$0'`" flock -en "$0" "$0" "$@" || :

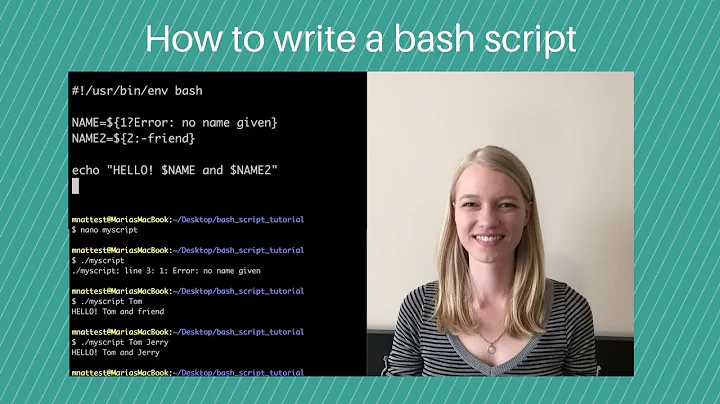

Related videos on Youtube

Comments

-

Tobias Kienzler over 1 year

A solution that does not require additional tools would be prefered.

-

Tobias Kienzler over 11 years(I think you forgot to create the lock file) What about race conditions?

-

Tobias Kienzler over 11 yearsThanks for the link, but could you include the essential parts in your answer? It's common policy at SE to prevent link rot... But something like

[[(lsof $0 | wc -l) > 2]] && exitmight actually be enough, or is this also prone to race conditions? -

nsg over 11 yearsops :) Yes, race conditions is a problem in my example, I usually write hourly or daily cron jobs and race conditions are rare.

-

Tobias Kienzler over 11 yearsThey shouldn't be relevant in my case either, but it's something one should keep in mind. Maybe using

lsof $0isn't bad, either? -

manatwork over 11 yearsYou can diminish the race condition by writing your

$$in the lock file. Thensleepfor a short interval and read it back. If the PID is still yours, you successfully acquired the lock. Needs absolutely no additional tools. -

nsg over 11 yearsI have never used lsof for this purpose, I this it should work. Note that lsof is really slow in my system (1-2 sec) and most likely there is a lot of time for race conditions.

-

nsg over 11 yearsmanatwork: nice idea, I will update my answer.

-

Tobias Kienzler over 11 yearshere's a lockfile answer at SO. Maybe in combination with the

mkdiranswer, race conditions and stalenes checks can be included... -

user1146332 over 11 yearsYou are right the essential part of my answer was missing and only posting links is pretty lame. I added my own suggestion to the answer.

-

Tobias Kienzler over 11 yearsI'd consider using the stored PID to check whether the locking instance is still alive. However, here's a claim that

mkdiris not atomic on NFS (which is not the case for me, but I guess one should mention that, if true) -

Praveen Felix over 11 yearsDoes it make sense to add a trap for

Praveen Felix over 11 yearsDoes it make sense to add a trap forSIGINT,SIGTERMetc to make sure your lock file gets cleaned up in case somebody kills the current run (explicitly with Ctrl-C or during shutdown)? -

Admin over 11 yearsYes, by all means use the stored PID to see if the locking process still executes, but don't attempt to do anything other than log a message. The work of checking the stored pid, creating a new PID file, etc, leaves a big window for races.

Admin over 11 yearsYes, by all means use the stored PID to see if the locking process still executes, but don't attempt to do anything other than log a message. The work of checking the stored pid, creating a new PID file, etc, leaves a big window for races. -

nsg over 11 yearsAxel: Sure, sounds like a good idea. I Also recommend Bruce Ediger's mkdir based answer.

-

Tobias Kienzler over 11 yearsOk, as Ihunath stated, the lockdir would most likely be in

/tmpwhich is usually not NFS shared, so that should be fine. -

chepner over 11 yearsI would use

rm -rfto remove the lock directory.rmdirwill fail if someone (not necessarily you) managed to add a file to the directory. -

dhag over 6 years> A solution that does not require additional tools would be prefered.

-

Naktibalda about 6 yearsI've used this condition for a week, and in 2 occasions it didn't prevent new process from starting. I figured what the problem is - new pid is a substring of the old one and gets hidden by

grep -v $$. real examples: old - 14532, new - 1453, old - 28858, new - 858. -

Naktibalda about 6 yearsI fixed it by changing

grep -v $$togrep -v "^${$} " -

terdon about 6 years@Naktibalda good catch, thanks! You could also fix it with

terdon about 6 years@Naktibalda good catch, thanks! You could also fix it withgrep -wv "^$$"(see edit). -

Kusalananda about 6 yearsAlternatively,

Kusalananda about 6 yearsAlternatively,if ! mkdir "$LOCKDIR"; then handle failure to lock and exit; fi trap and do processing after if-statement. -

Naktibalda about 6 yearsThanks for that update. My pattern occasionally failed because shorter pids were left padded with spaces.

-

Pro Backup over 5 yearsFor

Pro Backup over 5 yearsForbusyboxreducedashshell, anotherps | awk | grepsolution is available at stackoverflow.com/questions/52141287/… -

Scott - Слава Україні over 5 yearsYou should always quote all shell variable references unless you have a good reason not to, and you’re sure you know what you’re doing. So you should be doing

Scott - Слава Україні over 5 yearsYou should always quote all shell variable references unless you have a good reason not to, and you’re sure you know what you’re doing. So you should be doingexec 6< "$SCRIPT". -

John Doe over 5 years@Scott I've changed the code according your suggestions. Many thanks.

-

Scott - Слава Україні almost 5 yearsPlease explain exactly how hardcoding the checksum is a good idea.

Scott - Слава Україні almost 5 yearsPlease explain exactly how hardcoding the checksum is a good idea. -

arputra almost 5 yearsnot hardcoding checksum, its only create identity key of your script, when another instance will running, it will check other shell script process and cat the file first, if your identity key is on that file, so its mean your instance already running.

-

Scott - Слава Україні almost 5 yearsOK; please edit your answer to explain that. And, in the future, please don’t post multiple 30-line long blocks of code that look like they’re (almost) identical without saying and explaining how they’re different. And don’t say things like “you can hardcoded [sic] cksum inside your script”, and don’t continue to use variable names

Scott - Слава Україні almost 5 yearsOK; please edit your answer to explain that. And, in the future, please don’t post multiple 30-line long blocks of code that look like they’re (almost) identical without saying and explaining how they’re different. And don’t say things like “you can hardcoded [sic] cksum inside your script”, and don’t continue to use variable namesmysumandfsum, when you’re not talking about a checksum any more. -

Tobias Kienzler almost 5 yearsLooks interesting, thanks! And welcome to unix.stackexchange :)

-

flagg19 over 4 yearsWith this solution, if two instances of the same script are started at the same time, there's a chance that they will "see" each others and both will terminate. It may not be a problem, but it also may be, just be aware of it.

flagg19 over 4 yearsWith this solution, if two instances of the same script are started at the same time, there's a chance that they will "see" each others and both will terminate. It may not be a problem, but it also may be, just be aware of it. -

EdwardTeach almost 4 yearsI suggest using lower-case variable names here, e.g.

script=.... It reduces the risk of colliding with built-in shell variables such as$PATH. Which I've never done... (cough) -

John Doe almost 4 years@EdwardTeach Good point. I've changed it

-

harleygolfguy over 3 yearsI prefer the simplicity of this solution. “Simplicity is the ultimate sophistication.” -- Leonardo da Vinci

-

Shah Zain over 3 yearsIt's worth pointing out that the

trapdefinition must remain at the global scope of the script. Moving that mkdir block inside a function will result incleanup: command not found. (I learned this the hard way) -

h q about 3 yearsThis is, by far, the most elegant. Thank you.

-

Admin almost 2 yearsThat doesn't work if the script is run as

Admin almost 2 yearsThat doesn't work if the script is run as./thatscriptthe first time and/path/to/thatscriptthe second time. It's generally a bad idea to rely on process names as those can be arbitrarily set to any value by anyone. -

Admin almost 2 years@StéphaneChazelas Regarding process name changing - noted! Could be avoided by using

Admin almost 2 years@StéphaneChazelas Regarding process name changing - noted! Could be avoided by usingbasename $0instead of$0. Still can never be safe, as you mentioned, but at least that function can be called from different paths. -

Admin almost 2 years@lonix rather

Admin almost 2 years@lonix rather"$(basename -- "$0")"(assuming$0doesn't end in newline characters) or"${0##*/}"(or$0:tin zsh). Remember expansions must be quoted in sh/bash.

![Build [1]: Setting Up a Bash Script](https://i.ytimg.com/vi/YX_mdVbrsWU/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCLSzSZ0q_h1Ia07v6r5-UcrHs5fw)

![ONLY ALLOW ONE INSTANCE AND KILL THE REST [bash][snap]](https://i.ytimg.com/vi/YMl2w4MKwxw/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCQJe-2JarQgdWg9KxBAZTDNk0mCA)