How to make wget download recursive combining --accept with --exclude-directories?

Rather than try and do this using wget I'd suggest using a more appropriate tool for downloading complex "sets" of files or filters.

You can use httrack to download either entire directories of files (essentially mirror everything from a site) or you can specify to httrack a filter along with specific file extensions, such as download only .pdf files.

You can read more about httrack's filter capability which is what you'd need to use if you were interested in only downloading files that were named in a specific way.

Here are some examples of the wildcard capability:

-

*[file]or*[name]- any filename or name, e.g. not /,? and ; characters -

*[path]- any path (and filename), e.g. not ? and ; characters -

*[a,z,e,r,t,y]- any letters among a,z,e,r,t,y -

*[a-z]- any letters -

*[0-9,a,z,e,r,t,y]- any characters among 0..9 and a,z,e,r,t,y

Example

$ httrack http://url.com/files/ -* +1_[a-z].doc -O /dir/to/output

The switches are as follows:

-

-*- remove everything from list of things to download -

+1_[a-z].doc- download files named 1_a.doc, 1_b.doc, etc. -

-O /dir/to/output- write results here

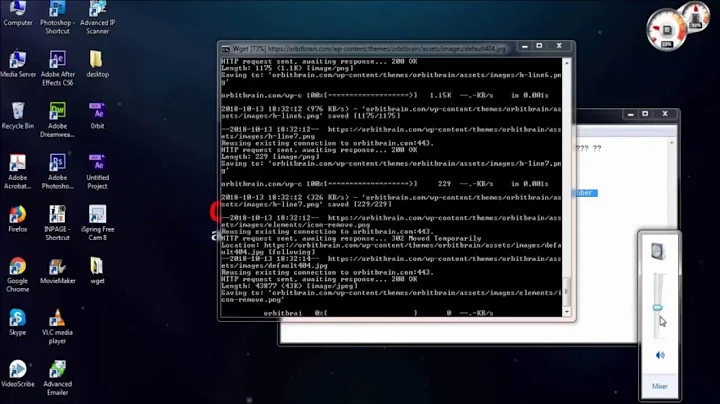

Related videos on Youtube

Twisted89

I'm Elias Dorneles: programmer, musician and addicted to learning things. Brazilian living in France. In 2017 I attended Recurse Center, a retreat for programmers in NYC. I contribute to BeeWare. I like open source software, Python, Bash, Javascript, GNU/Linux/Ubuntu, Wikipedia, fingerstyle guitar and reading everything that I can. Blog Resume

Updated on September 18, 2022Comments

-

Twisted89 4 months

I'm trying to download some directories from an Apache server, but I need to ignore some directories that have huge files I don't care about

The dir structure in the server is somewhat like this (simplified):

somedir/ ├── atxt.txt ├── big_file.pdf ├── image.jpg └── tmp └── tempfile.txtSo, I want to get all the

.txtand.jpgfiles, but I DON'T want the.pdffiles nor anything that is in atmpdirectory.I've tried using

--exclude-directoriestogether with--acceptand then with--reject, but in both attempts it keeps downloading thetmpdir and its contents.These are the commands I've tried:

# with --reject wget -nH --cut-dirs=2 -r --reject=pdf --exclude-directories=tmp \ --no-parent http://<host>/pub/somedir/ # with --accept wget -nH --cut-dirs=2 -r --accept=txt,jpg --exclude-directories=tmp \ --no-parent http://<host>/pub/somedir/Is there a way to do this?

How exactly is

--exclude-directoriessupposed to work? -

S edwards almost 9 yearshttrack is definitely a better way.

S edwards almost 9 yearshttrack is definitely a better way. -

Admin almost 9 years

Admin almost 9 yearshttrack -Walways recommended. -

slm almost 9 years@elias - in the man page it says it takes wildcards, so perhaps you need to define the "directories" using something like

slm almost 9 years@elias - in the man page it says it takes wildcards, so perhaps you need to define the "directories" using something like*/tmp/*. -

Stéphane Gourichon about 8 yearshttrack does not support custom headers (needed for authentication). Wget does.

Stéphane Gourichon about 8 yearshttrack does not support custom headers (needed for authentication). Wget does. -

MattBianco about 8 yearsThere is also cURL, (curl.haxx.se) which is very powerful.

MattBianco about 8 yearsThere is also cURL, (curl.haxx.se) which is very powerful.