How to migrate to LVM?

You're only using around 7.4GB on / and have 79GB free in LVM so, yes, you can create a new LV for / (and another one for /var) and copy the files from / and /var to them. I recommend using rsync for the copies.

e.g. with the new / and /var mounted as /target and /target/var:

rsync --archive --sparse --one-file-system --delete-during --delete-excluded \

--force --numeric-ids --hard-links / /var /target/

optionally use these options too:

--human-readable --human-readable --verbose --stats --progress

You can repeat this as often as you like, until you have enough free time to reboot to single user mode and complete the procedure, which is:

- reboot to single-user mode

- mount

/and/bootRW if they're not already RW - mount

/targetand/target/varas above - one final rsync as above

for i in proc dev sys dev/pts run boot; do mount -o bind /$i /target/$i; donechroot /target- edit

/etc/fstaband change the device/uuid/labels for / and /var - run

update-grub - exit

for i in proc sys dev/pts dev boot var /; do umount /target/$i ; done- reboot

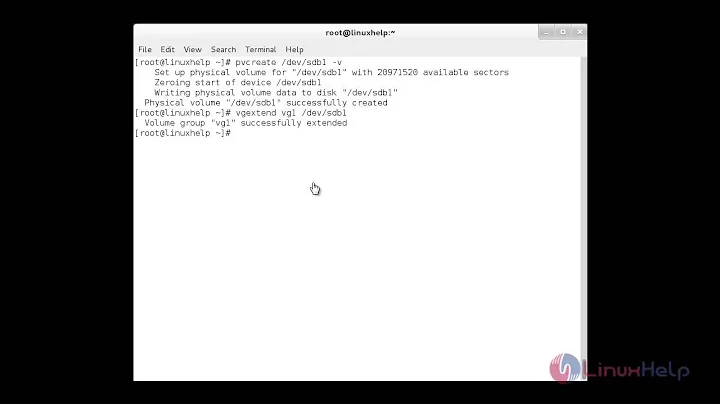

If everything's working fine after the reboot, you can add /dev/sda2 (the old root partition) to the LVM VG datvg.

If you wanted to, you could also create an LV for /boot (mounted as /target/boot) and rsync it etc along with / and /var (except remove boot from the for loop around mount -o bind, you don't want the original /boot bind-mounted over /target/boot).

Then create an LV for swap too, and you can add the entire /dev/sda to the LVM VG (delete all partitions on sda, create /dev/sda1 and add that).

An Alternative: Clonezilla is great!

BTW, another way to do this is to boot with a Clonezilla CD or USB stick, create the LVM partitions, and use cz to clone / and /boot to LVM. It's been a while since I last did this so I can't remember exactly, but you'll probably still have to mount /target (and /target/var, /target/boot), do the bind-mounts and editing fstab and update-grub.

In fact, the for loops above are copied and slightly modified from aliases made by the customisation script for my tftp-bootable clonezilla image...so, it's a pretty good bet that it is needed.

It boots up with aliases like this available to the root shell:

alias prepare-chroot-target='for i in proc dev sys dev/pts ; do mount -o bind /$i /target/$i ; done'

Optional Extra Reading (BTRFS Propaganda)

If you're not using LVM because you need to create partitions for VMs (partitions and most other block-devices are faster than .qcow2 or raw files for VMs), I recommend using btrfs instead of LVM. It's a lot more flexible (and, IMO, easier to use) than LVM - e.g. it's trivial to grow (or shrink) the allocations for sub-volumes.

btrfs has the very useful feature of being able to perform an in-place conversion from ext3 or ext4 to btrfs.

Unfortunately, that wiki page now has a warning:

Warning: As of 4.0 kernels this feature is not often used or well tested anymore, and there have been some reports that the conversion doesn't work reliably. Feel free to try it out, but make sure you have backups.

You could do an in-place conversion of /, migrate your /var/foobar* directories to btrfs, and then add half of sdb to btrfs / (as a RAID-1 mirror) and use the other half for extra storage (also btrfs) or for LVM. It's a shame you don't have a pair of same-sized disks.

If you choose not to do the in-place conversion (probably wise), the procedure would be similar to the rsync etc method above, except instead of creating LVM partitions for /target/{,boot,var} you create a btrfs volume for /target and sub-volumes for /target/boot and /target/var. You'd need a separate swap partition (or forget about disk swap and use zram, the mainline-kernel compressed-RAM block device. or add more RAM so you don't swap. or both.)

But it would be easier to backup your data & config-files, boot an installer CD or USB for your distro, re-install from scratch (after carefully planning the partition layout) and then restore selected parts of your backup (/home, /usr/local, some config files in /etc).

More Optional Extra Reading (ZFS Propaganda)

Given your mismatched drives, btrfs is probably a better choice for you but I find it impossible to mention btrfs without also mentioning ZFS:

If you need to give block-devices to VMs AND want the flexibility of something like btrfs AND don't mind installing a non-mainline kernel module, use ZFS instead of btrfs.

It does almost everything that btrfs does (except rebalancing, unfortunately. and you can only add disks to a pool, never remove them or change the layout) plus a whole lot more, and you can create ZVOLs (block-devices using storage from your pool) as well as sub-volumes.

Installing ZFS on Debian or Ubuntu and several other distros is easy these days - the distros provide packages (including spl-dkms and zfs-dkms to auto-build the modules for your kernels). Building the dkms modules takes an annoyingly long time, but otherwise it's as easy and straight-forward as installing any other set of packages.

Unfortunately, converting to ZFS wouldn't be as easy as the procedure above. Converting the rootfs to ZFS is a moderately difficult procedure in itself. As with btrfs, it would be easier to backup your data and config files etc and rebuild the machine from scratch, using a distro with good ZFS support (Ubuntu is probably the best choice for ZFS on Linux at the moment).

Worse, ZFS requires that all partitions or disks in a vdev be the same size (otherwise the vdev will only be as large as the smallest device in it), so you could only add sda and approx half of sdb to the zpool. the rest of sdb could be btrfs, ext4, xfs, or even LVM (which is kind of pointless since you can make ZVOLs with ZFS).

I use ZFS, on Debian (and am very pleased that ZFS has finally made it into the distribution itself so I can use native debian packages). I've been using it for several years now (since 2011, at least). Highly recommended. I just wish it had btrfs's rebalancing feature, that would be very useful in itself AND open up the possibility of removing a vdev from a pool (currently impossible with ZFS) or maybe even conversions from RAID-1 to RAIDZ-1.

btrfs's ability to do online conversions from, e.g. RAID-1 to RAID-5 or RAID-6 or RAID-10 is kind of cool, too but not something I'd be likely to use. I've pretty much given up on all forms of RAID-5/RAID-6, including ZFS' RAID-Z (the performance cost AND the scrub or resync time just isn't worth it, IMO), and I prefer RAID-1 or RAID-10 - I can add as many RAID-1 vdevs to a pool as I like, when I like (which effectively converts RAID-1 to RAID-10, or just adds more mirrored pairs to an existing RAID-10).

I make extensive use of ZVOLs for VMs, anyway, so btrfs isn't an option for me.

Related videos on Youtube

Peter84753

Updated on September 18, 2022Comments

-

Peter84753 over 1 year

There is a machine:

[root@SERVER ~]# df -mP Filesystem 1048576-blocks Used Available Capacity Mounted on /dev/sda2 124685 7462 110897 7% / tmpfs 12016 0 12016 0% /dev/shm /dev/sda1 485 102 358 23% /boot /dev/sdb1 32131 48 30444 1% /var/foobar1 /dev/sdb2 16009 420 14770 3% /var/foobar2 /dev/sdb3 988 6 930 1% /var/foobar3 /dev/sdb5 988 2 935 1% /var/foobar4 /dev/sdb6 988 17 919 2% /var/foobar5 /dev/mapper/datvg-FOO 125864 81801 37663 69% /var/FOOBAR6 1.2.3.4:/var/FOOBAR7 193524 128878 54816 71% /var/FOOBAR7 [root@SERVER ~]# vgs VG #PV #LV #SN Attr VSize VFree datvg 1 1 0 wz--n- 204.94g 79.94g [root@SERVER ~]# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert FOO datvg -wi-ao---- 125.00g [root@SERVER ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sr0 11:0 1 1024M 0 rom sda 8:0 0 128G 0 disk ├─sda1 8:1 0 500M 0 part /boot ├─sda2 8:2 0 123.7G 0 part / └─sda3 8:3 0 3.8G 0 part [SWAP] sdb 8:16 0 256G 0 disk ├─sdb1 8:17 0 32G 0 part /var/foobar1 ├─sdb2 8:18 0 16G 0 part /var/foobar2 ├─sdb3 8:19 0 1G 0 part /var/foobar3 ├─sdb4 8:20 0 1K 0 part ├─sdb5 8:21 0 1G 0 part /var/foobar4 ├─sdb6 8:22 0 1G 0 part /var/foobar5 └─sdb7 8:23 0 205G 0 part └─datvg-FOO (dm-0) 253:0 0 125G 0 lvm /var/FOOBAR6 [root@SERVER ~]# [root@SERVER ~]# grep ^Red /etc/issue Red Hat Enterprise Linux Server release 6.8 (Santiago) [root@SERVER ~]#Question: how can we migrate it to use LVM for the / and /var, etc. FS too? Just creating the same "partition" with LVM, copy the files in the old FS? How will be machine boot? /boot could stay in /dev/sda1.

-

Alessio almost 8 yearsBTW, two

--human-readableoptions isn't a mistake. it makes the output even more human readable (for even humaner humans, i suppose :) -

Alessio almost 8 yearsThe

--numeric-idsoptions isn't absolutely essential...i use it out of habit because I sometimes have to rsync partitions like this to remote (on my LAN) systems, and I don't use LDAP or NIS etc so the uids or gids may be different on different machines.