How to move / copy logical volume (lv) to another volume group (vg)?

Solution 1

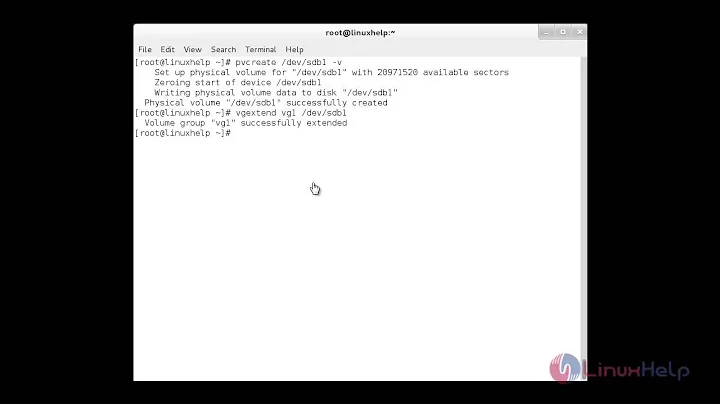

vgmerge lets you merge two VGs. You can also use pvmove to move data within a VG, and vgsplit if you want to go back to multiple VGs.

Solution 2

There is no reason to copy it to a .img file first, just do the lvcreate first, then directly copy it over:

lvcreate --snapshot --name <the-name-of-the-snapshot> --size <the size> /dev/volume-group/logical-volume

lvcreate --name <logical-volume-name> --size <size> the-new-volume-group-name

dd if=/dev/volume-group/snapshot-name of=/dev/new-volume-group/new-logical-volume

Solution 3

Okay, I was able to handle the situation in my own way. Here are the steps I took:

1) Take a snapshot of the targeting logical volume.

lvcreate --snapshot --name <the-name-of-the-snapshot> --size <the size> /dev/volume-group/logical-volume

Note : Size of the snapshot can be as large as or as small as you wish. What matters is having enough space to capture changes during snapshot period.

2) Create an image copy of the snapshot content using dd

dd if=/dev/volume-group/snapshot-name of=/tmp/backup.img

3) Create a new logical volume of enough size in the targeting (new) volume group.

lvcreate --name <logical-volume-name> --size <size> the-new-volume-group-name

4) Write data to the new logical volume from the image backup using dd

dd if=/tmp/backup.img of=/dev/new-volume-group/new-logical-volume

5) delete the snapshot and image backup using lvremove and rm respectively.

That's all folks... Hope this helps to someone :)

Solution 4

As of the LVM in Debian stretch (9.0), namely 2.02.168-2, it's

possible to do a copy of a logical volume across volume groups using a

combination of vgmerge, lvconvert, and vgsplit. Since a move is

a combination of a copy and a delete, this will also work for a move.

Alternatively, you can use pvmove to just move the volume.

A complete self-contained example session using loop devices and

lvconvert follows.

Summary: we create volume group vg1 with logical volume lv1, and vg2 with lv2, and make a copy of lv1 in vg2.

Create files.

truncate pv1 --size 100MB

truncate pv2 --size 100MB

Set up loop devices on files.

losetup /dev/loop1 pv1

losetup /dev/loop2 pv2

Create physical volumes on loop devices (initialize loop devices for use by LVM).

pvcreate /dev/loop1 /dev/loop2

Create volume groups vg1 and vg2 on /dev/loop1 and /dev/loop2 respectively.

vgcreate vg1 /dev/loop1

vgcreate vg2 /dev/loop2

Create logical volumes lv1 and lv2 on vg1 and vg2 respectively.

lvcreate -L 10M -n lv1 vg1

lvcreate -L 10M -n lv2 vg2

Create ext4 filesystems on lv1 and lv2.

mkfs.ext4 -j /dev/vg1/lv1

mkfs.ext4 -j /dev/vg2/lv2

Optionally, write something on lv1 so you can later check the copy was correctly created. Make vg1 inactive.

vgchange -a n vg1

Run merge command in test mode. This merges lv1 into lv2.

vgmerge -A y -l -t -v <<destination-vg>> <<source-vg>>

vgmerge -A y -l -t -v vg2 vg1

And then for real.

vgmerge -A y -l -v vg2 vg1

Then create a RAID 1 mirror pair from lv1 using lvconvert. The

<> argument tells lvconvert to make the mirror copy

lv1_copy on /dev/loop2.

lvconvert --type raid1 --mirrors 1 <<source-lv>> <<dest-pv>>

lvconvert --type raid1 --mirrors 1 /dev/vg2/lv1 /dev/loop2

Then split the mirror. The new LV is now lv1_copy.

lvconvert --splitmirrors 1 --name <<source-lv-copy>> <<source-lv>>

lvconvert --splitmirrors 1 --name lv1_copy /dev/vg2/lv1

Make vg2 inactive.

vgchange -a n vg2

Then (testing mode)

vgsplit -t -v <<source-vg>> <<destination-vg>> <<moved-to-pv>>

vgsplit -t -v /dev/vg2 /dev/vg1 /dev/loop1

For real

vgsplit -v /dev/vg2 /dev/vg1 /dev/loop1

Resulting output:

lvs

[...]

lv1 vg1 -wi-a----- 12.00m

lv1_copy vg2 -wi-a----- 12.00m

lv2 vg2 -wi-a----- 12.00m

NOTES:

1) Most of these commands will need to be run as root.

2) If there is any duplication of the names of the logical volumes in

the two volume groups, vgmerge will refuse to proceed.

3) On merge:

Logical volumes in `vg1` must be inactive

And on split:

Logical volume `vg2/lv1` must be inactive.

Solution 5

I will offer my own:

umount /somedir/

lvdisplay /dev/vgsource/lv0 --units b

lvcreate -L 12345b -n lv0 vgtarget

dd if=/dev/vgsource/lv0 of=/dev/vgtarget/lv0 bs=1024K conv=noerror,sync status=progress

mount /dev/vgtarget/lv0 /somedir/

if everything is good, remove the source

lvremove vgsource/lv0

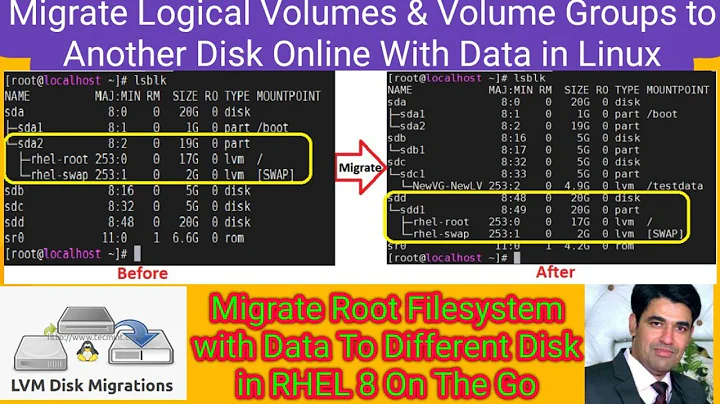

Related videos on Youtube

tonix

Updated on September 18, 2022Comments

-

tonix over 1 year

tonix over 1 yearBasically, I want to move / copy several logical volumes (lv) into a new volume group (vg). The new volume group reside on a new set of physical volumes. Does anyone know how to do that safely without damaging to the data inside those logical volumes??

-

tonix over 12 yearsIf someone has better option / method, let me know as well :-)

tonix over 12 yearsIf someone has better option / method, let me know as well :-) -

gorn about 8 yearsThis is little too brief. It does not say what exactly are the mentioned sizes - for example <the size> can be very small, as it is only for snapshot differencies.

-

Tobias J over 7 years@gorn a valid point, but he was replying to nobody's answer below, which was first at the time. Read that for additional context.

Tobias J over 7 years@gorn a valid point, but he was replying to nobody's answer below, which was first at the time. Read that for additional context. -

user189142 over 6 yearsit is just pointless. You have to unmount filesystems ,deactivate volumes etc. You may just unmount dir and copy data as well.

-

Znik over 4 yearsOne note. For do it, you must have temporary device used for transfer online LV to another VG. Of course after transfer you should update /etc/fstab and other affected configuration, and plan some offline time for reboot, and eventually make some config update. If you do some action with rootfs or bootfs, you should have got some linux live distro for recovery main system.

-

Znik over 4 yearsising backup.img for temporarly store backup is complete unneeded. you can directly dd from source snapshot, to destination LV with not mounted state.

-

Znik over 4 yearsfirst, as user189142 said, it is pointless. second, it is applied only with situation when we can stop services using moved volume. this is problem with services running 24/7, and volume is very big with data counted with terabytes. This cause, this procedure needs very long service time. This cause, much easier is simply creating new volume, rsync online, then with short time rsync offline for update, remount and back system to online state. Of course, the very good idea is wipe out unneeded data from source volume. maybe temporarly move it to some temporary place.

-

Znik over 4 yearswhat if source volume is very big and service or system shouldn't be stopped?