How to recode to UTF-8 conditionally?

Solution 1

This script, adapted from harrymc's idea, which recodes one file conditionally (based on existence of certain UTF-8 encoded Scandinavian characters), seems to work for me tolerably well.

$ cat recode-to-utf8.sh

#!/bin/sh

# Recodes specified file to UTF-8, except if it seems to be UTF-8 already

result=`grep -c [åäöÅÄÖ] $1`

if [ "$result" -eq "0" ]

then

echo "Recoding $1 from ISO-8859-1 to UTF-8"

recode ISO-8859-1..UTF-8 $1 # overwrites file

else

echo "$1 was already UTF-8 (probably); skipping it"

fi

(Batch processing files is of course a simple matter of e.g. for f in *txt; do recode-to-utf8.sh $f; done.)

NB: this totally depends on the script file itself being UTF-8. And as this is obviously a very limited solution suited to what kind of files I happen to have, feel free to add better answers which solve the problem in a more generic way.

Solution 2

This message is quite old, but I think I can contribute to this problem :

First create a script named recodeifneeded :

#!/bin/bash

# Find the current encoding of the file

encoding=$(file -i "$2" | sed "s/.*charset=\(.*\)$/\1/")

if [ ! "$1" == "${encoding}" ]

then

# Encodings differ, we have to encode

echo "recoding from ${encoding} to $1 file : $2"

recode ${encoding}..$1 $2

fi

You can use it this way :

recodeifneeded utf-8 file.txt

So, if you like to run it recursively and change all *.txt files encodings to (let's say) utf-8 :

find . -name "*.txt" -exec recodeifneeded utf-8 {} \;

I hope this helps.

Solution 3

UTF-8 has strict rules about which byte sequences are valid. This means that if data could be UTF-8, you'll rarely get false positives if you assume that it is.

So you can do something like this (in Python):

def convert_to_utf8(data):

try:

data.decode('UTF-8')

return data # was already UTF-8

except UnicodeError:

return data.decode('ISO-8859-1').encode('UTF-8')

In a shell script, you can use iconv to perform the converstion, but you'll need a means of detecting UTF-8. One way is to use iconv with UTF-8 as both the source and destination encodings. If the file was valid UTF-8, the output will be the same as the input.

Solution 4

I'm a bit late, but i've been strugling so often with the same question again and again... Now that i've found a great way to do it, i can't help but share it :)

Despite beeing an emacs user, i'll recommend you to use vim today.

with this simple command, it will recode your file, no matter what's inside to the desired encoding :

vim +'set nobomb | set fenc=utf8 | x' <filename>

never found something giving me better results than this.

I hope it will help some others.

Solution 5

Both ISO-8859-1 and UTF-8 are identical on the first 128 characters, so your problem is really how to detect files that contain funny characters, meaning numerically encoded as above 128.

If the number of funny characters is not excessive, you could use egrep to scan and find out which files need recoding.

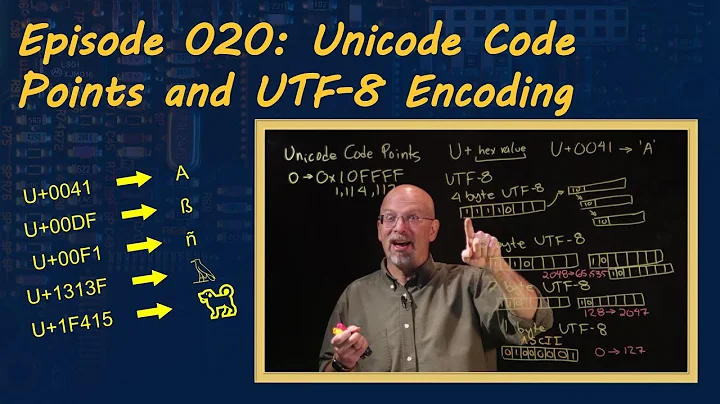

Related videos on Youtube

Assaf Levy

I'm a software developer by profession, and more active on Stack Overflow. I used to be more of a Linux geek, wasting a lot of free time tweaking my system, following Slashdot, etc. Fortunately I'm mostly past that. :) Nowadays I mainly use Mac at home, while still strongly preferring Linux (Ubuntu these days) for development at work and at work too.

Updated on September 17, 2022Comments

-

Assaf Levy almost 2 years

I'm unifying the encoding of a large bunch of text files, gathered over time on different computers. I'm mainly going from ISO-8859-1 to UTF-8. This nicely converts one file:

recode ISO-8859-1..UTF-8 file.txtI of course want to do automated batch processing for all the files, and simply running the above for each file has the problem that files whose already encoded in UTF-8, will have their encoding broken. (For instance, the character 'ä' originally in ISO-8859-1 will appear like this, viewed as UTF-8, if the above recode is done twice:

� -> ä -> ä)My question is, what kind of script would run recode only if needed, i.e. only for files that weren't already in the target encoding (UTF-8 in my case)?

From looking at recode man page, I couldn't figure out how to do something like this. So I guess this boils down to how to easily check the encoding of a file, or at least if it's UTF-8 or not. This answer implies you could recognise valid UTF-8 files with recode, but how? Any other tool would be fine too, as long as I could use the result in a conditional in a bash script...

-

Assaf Levy over 14 yearsNote: I've looked at questions like superuser.com/questions/27060/… and they do not provide an answer for this particular question.

-

-

Assaf Levy over 14 yearsIndeed, in my case the "funny characters" are mostly just åäö (+ uppercase) used in Finnish. It's not quite that simple, but I could adapt this idea... I'm using UTF-8 terminal, and grepping for e.g. 'ä' finds it only in files that are already UTF-8 (i.e. the very files I want to skip)! So I should do the opposite: recode files where grep finds none of [äÄöÖåÅ]. Sure, for some of these files (pure ascii) recoding's not necessary, but it doesn't matter either. Anyway, this way I'd perhaps get all files to be UTF-8 without breaking those that already were. I'll test this some more...

-

Assaf Levy almost 14 yearsThanks, seems useful - I'll try this the next time when batch converting text files

-

Bruno da Cunha about 9 yearsOnly solution that works regardless of the original encoding.

-

mwfearnley over 4 yearsSome encodings are just detected as

data.