How To Reduce Python Script Memory Usage

Solution 1

Organizing:

Your python script seems indeed to be huge, maybe you should consider reorganizing your code first, to split it into several modules or packages. It will probably make easier the code profiling and the optimization tasks.

You may want to have a look there:

And possibly:

- SO: Python: What is the common header format?

- How do you organize Python modules?

- The Hitchiker's Guide to Packaging

Optimizing:

There is a lot of things that can be done for optimizing your code ...

For instance, regarding your data structures ... If you make big use of lists or lists comprehensions, you could try to figure out where do you really need lists, and where they might be replaced by non-mutable data structures like tuples or by "volatile" objects, "lazy" containers, like generator expressions.

See:

- SO: Are tuples more efficient than lists in Python?

- SO: Generator Expressions vs. List Comprehension

- PEP 255 - Simple Generators and PEP 289 - Generator Expressions

On these pages, you could find some useful information and tips:

- http://wiki.python.org/moin/PythonSpeed

- http://wiki.python.org/moin/PythonSpeed/PerformanceTips

- http://wiki.python.org/moin/TimeComplexity

- http://scipy.org/PerformancePython

Also, you should study your ways of doing things and wonder whether there is a way to do that less greedily, a way that it's better to do it in Python (you will find some tips in the tag pythonic) ... That is especially true in Python, since, in Python, there is often one "obvious" way (and only one) to do things which are better than the others (see The Zen of Python), which is said to be pythonic. It's not especially related to the shape of your code, but also - and above all - to the performances. Unlike many languages, which promote the idea that there should be many ways to do anything, Python prefers to focus on the best way only. So obviously, there are many ways for doing something, but often, one is really better.

Now, you should also verify whether you are using the best methods for doing things because pythonicality won't arrange your algorithms for you.

But at last, it varies depending on your code and it's difficult to answer without having seen it.

And, make sure to take into account the comments made by eumiro and Amr.

Solution 2

This video might give you some good ideas: http://pyvideo.org/video/451/pycon-2011---quot-dude--where--39-s-my-ram--quot-

Solution 3

The advice on generator expressions and making use of modules is good. Premature optimization causes problems, but you should always spend a few minutes thinking about your design before sitting down to write code. Particularly if that code is meant to be reused.

Incidentally, you mention that you have a lot of data structures defined at the top of your script, which implies that they're all loaded into memory at the start. If this is a very large dataset, consider moving specific datasets to separate files, and loading it in only as needed. (using the csv module, or numpy.loadtxt(), etc)

Separate from using less memory, also look into ways to use memory more efficiently. For example, for large sets of numeric data, numpy arrays are a way of storing information that will provide better performance in your calculations. There is some slightly dated advice at http://wiki.python.org/moin/PythonSpeed/PerformanceTips

Solution 4

Moving functions around won't change your memory usage. As soon as you import that other module, it will define all the functions in the module. But functions don't take up much memory. Are they extremely repetitive, perhaps you can have less code by refactoring the functions?

@eumiro's question is right: are you sure your script uses too much memory? How much memory does it use, and why is it too much?

Solution 5

If you're taking advantage of OOP and have some objects, say:

class foo:

def __init__(self, lorem, ipsum):

self.lorem = lorem

self.ipsum = ipsum

# some happy little methods

You can have the object take up less memory by putting in:

__slots__ = ("lorem", "ipsum")

right before the __init__ function, as shown:

class foo:

def __init__(self, lorem, ipsum):

self.lorem = lorem

self.ipsum = ipsum

# some happy little methods

Of course, "premature optimization is the root of all evil". Also profile mem usage before and after the addition to see if it actually does anything. Beware of breaking code (shcokingly) with the understanding that this might end up not working.

Related videos on Youtube

Coo Jinx

Updated on July 09, 2022Comments

-

Coo Jinx almost 2 years

I have a very large python script, 200K, that I would like to use as little memory as possible. It looks something like:

# a lot of data structures r = [34, 78, 43, 12, 99] # a lot of functions that I use all the time def func1(word): return len(word) + 2 # a lot of functions that I rarely use def func1(word): return len(word) + 2 # my main loop while 1: # lots of code # calls functionsIf I put the functions that I rarely use in a module, and import them dynamically only if necessary, I can't access the data. That's as far as I've gotten.

I'm new at python.

Can anyone put me on the right path? How can I break this large script down so that it uses less memory? Is it worth putting rarely used code in modules and only calling them when needed?

-

eumiro almost 12 yearsAre you sure it uses too much memory?

-

Amr almost 12 yearsRemember that "Premature optimization is the root of all evil".

-

Jeff Tratner almost 12 yearsin terms of your functions issue, have you checked whether your functions are referring to global variables? If they are (and presumably the data isn't defined in that module) you could either: 1. add a parameter to each function to take in whatever global variable or 2. define all the functions within a class and pass the global variables to

__init__and rewrite the functions to call the globals asself.<variable name> -

martineau almost 12 yearsIf you're script file is that large, then it sounds like either you're using extremely variable names everywhere and have lots of comments in the code, or more likely you're doing something very wrong or at best inefficiently. Unfortunately it's doubtful that anyone will be able give you much help based just on the vague description you've given of your code. Time to get specific (and accept some answers)!

martineau almost 12 yearsIf you're script file is that large, then it sounds like either you're using extremely variable names everywhere and have lots of comments in the code, or more likely you're doing something very wrong or at best inefficiently. Unfortunately it's doubtful that anyone will be able give you much help based just on the vague description you've given of your code. Time to get specific (and accept some answers)!

-

-

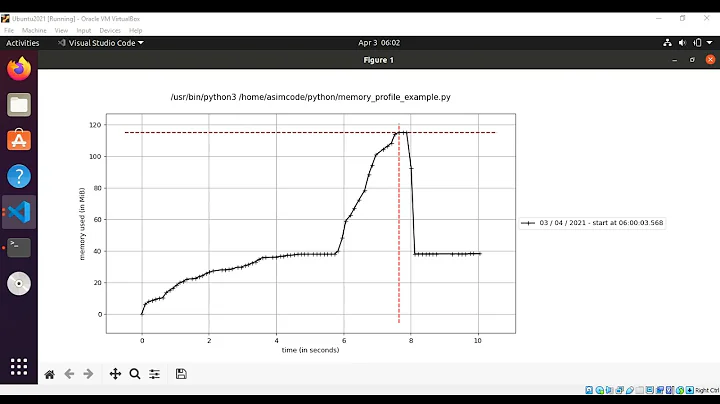

Levon almost 12 yearsDo you know of any good way to determine the amount of memory some snippet of Python code takes? It's easy to use

timeitfor speed comparisons, so I'm looking for something that will allow me to determine/characterize memory consumption. Just curious if there's something as simple. -

cedbeu almost 12 yearsmemory_profiler is pretty useful, easy to use for quick debugging. Now you can try meliae (step-by-step how-to), or heapy for more complete solutions. Good discussion here and some interresting estimation methods here

-

cedbeu almost 12 yearsI think you are more looking for something like the memory_profiler module I mentioned, though.

-

Levon almost 12 yearsThanks for the information, I favored this question so that I can come back to it and follow up on the links you mentioned. Much appreciated.