How to resolve backend conn errors on haproxy load balancer?

Your backend has maxconn 50 so HAProxy is enqueueing the overflow waiting for one of the existing 50 to finish. When each connection disconnects, another one is allowed through to the back-end... but your back-end is not fast enough. The requests are backing up at the proxy, and eventually, the proxy tears them down after they sit in the proxy queue for timeout connect 60s.

The sQ in the log entry is what explains this.

s: the server-side timeout expired while waiting for the server to send or receive data.

Q: the proxy was waiting in the QUEUE for a connection slot. This can only happen when servers have a 'maxconn' parameter set.

See Session State at Disconnection in the HAProxy configuration guide.

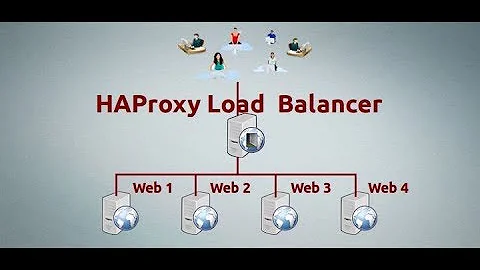

Related videos on Youtube

sushrut619

Updated on September 18, 2022Comments

-

sushrut619 over 1 year

The following is my haproxy config

global log /dev/log local0 notice log /dev/log local0 debug log 127.0.0.1 local0 chroot /var/lib/haproxy stats socket /run/haproxy/admin.sock mode 660 level admin stats timeout 30s user haproxy group haproxy daemon maxconn 5000 defaults log global mode tcp option tcplog option tcpka timeout connect 60s timeout client 1000s timeout server 1000s frontend aviator-app option tcplog log /dev/log local0 debug bind *:4433 ssl crt /etc/haproxy/certs/{domain_name}.pem mode tcp option clitcpka option http-server-close maxconn 5000 default_backend aviator-app-pool # Table definition stick-table type ip size 2000k expire 30s store conn_cur # Allow clean known IPs to bypass the filter tcp-request connection accept if { src -f /etc/haproxy/whitelist.lst } # Shut the new connection as long as the client has already 100 opened # tcp-request connection reject if { src_conn_cur ge 500 } tcp-request connection track-sc1 src backend aviator-app-pool option tcplog log /dev/log local0 debug balance roundrobin mode tcp option srvtcpka option http-server-close maxconn 50 # list each server server appserver1 10.0.1.205 maxconn 12 server appserver2 10.0.1.183 maxconn 12 server appserver3 10.0.1.75 maxconn 12 server appserver4 10.0.1.22 maxconn 12 # end of list listen stats bind *:8000 mode http log global maxconn 10 clitimeout 100s srvtimeout 100s contimeout 100s timeout queue 100s stats enable stats hide-version stats refresh 30s stats show-node stats auth username:password stats uri /haproxy?statsWhen I run a load test with about 12-13 http requests per second, I do not see any errors for about first hour of the test. But about 90 mins into the test a large number of requests start failing. The error message from jmeter usually reads "Connection timed out: Connect" or '"domain_name:4433" failed to respond.' The following are some log messages from haproxy.log. I also see a large number of 'conn errors' as highlighted in the image above and some 'resp errors'.

May 7 19:15:00 ip-10-0-0-206 haproxy[30349]: 64.112.179.79:55894 [07/May/2018:19:14:00.488] aviator-app~ aviator-app-pool/<NOSRV> 60123/-1/60122 0 sQ 719/718/717/0/0 0/672 May 7 19:15:00 ip-10-0-0-206 haproxy[30349]: 64.112.179.79:49905 [07/May/2018:19:12:53.483] aviator-app~ aviator-app-pool/appserver2 60022/1/127171 2283568 -- 719/718/716/11/0 0/666I do not see any errors or stack trace on my backend servers. The CPU usage on load balancer is low during the test (less than 10%) for load balancer and about 30 % for each backend server. Any help to debug this issue is appreciated.

-

sushrut619 almost 6 yearsWhat would be the best way to resolve the issue ? Should I increase the backend maxconn limit or increase the timeout or add more backend server instances ? From the monitoring console, the CPU usage of individual backend servers does not exceed 30% and the memory usage is also not too high, so I would prefer not to add more instances unless it is the only way.

-

Michael - sqlbot almost 6 yearsIt really depends on why (or whether) your server can't handle more traffic than this. Why are using

mode tcpfor what looks like it might be HTTPS traffic? (I'm guessing.) Why ismaxconn 50? -

sushrut619 almost 6 yearsYes, most of our requests are https. However, we are restricted to using tcp mode because of some malformed http request on the device firmware which cannot be changed easily. Do you think switching it to http mode will improve performance ?

-

Michael - sqlbot almost 6 yearsUsually,

mode httpis a much better option, because back-end connections can be either reused or released quickly while waiting on the client's next request.option accept-invalid-http-requestmight relax the parser enough that broken clients can still be supported... it depends on the nature of the error.